The appearance of the BERT (Bidiractional Encoder Representaions from Transformers) model in 2018 has certainly been an infelection point in the NLP world. "BERT models have demonstrated a very sophisticated knowledge of language, achieving human-level performance on certain tasks" [1]. BERT is a pre-trained model (trained by Google on a huge corpus), and can be fine-tuned and repurposed for different NLP tasks. BERT was first introduced by Google AI Language in 2019. See [2] for the paper link and [3] for the announcement made by Google about open sourcing BERT.

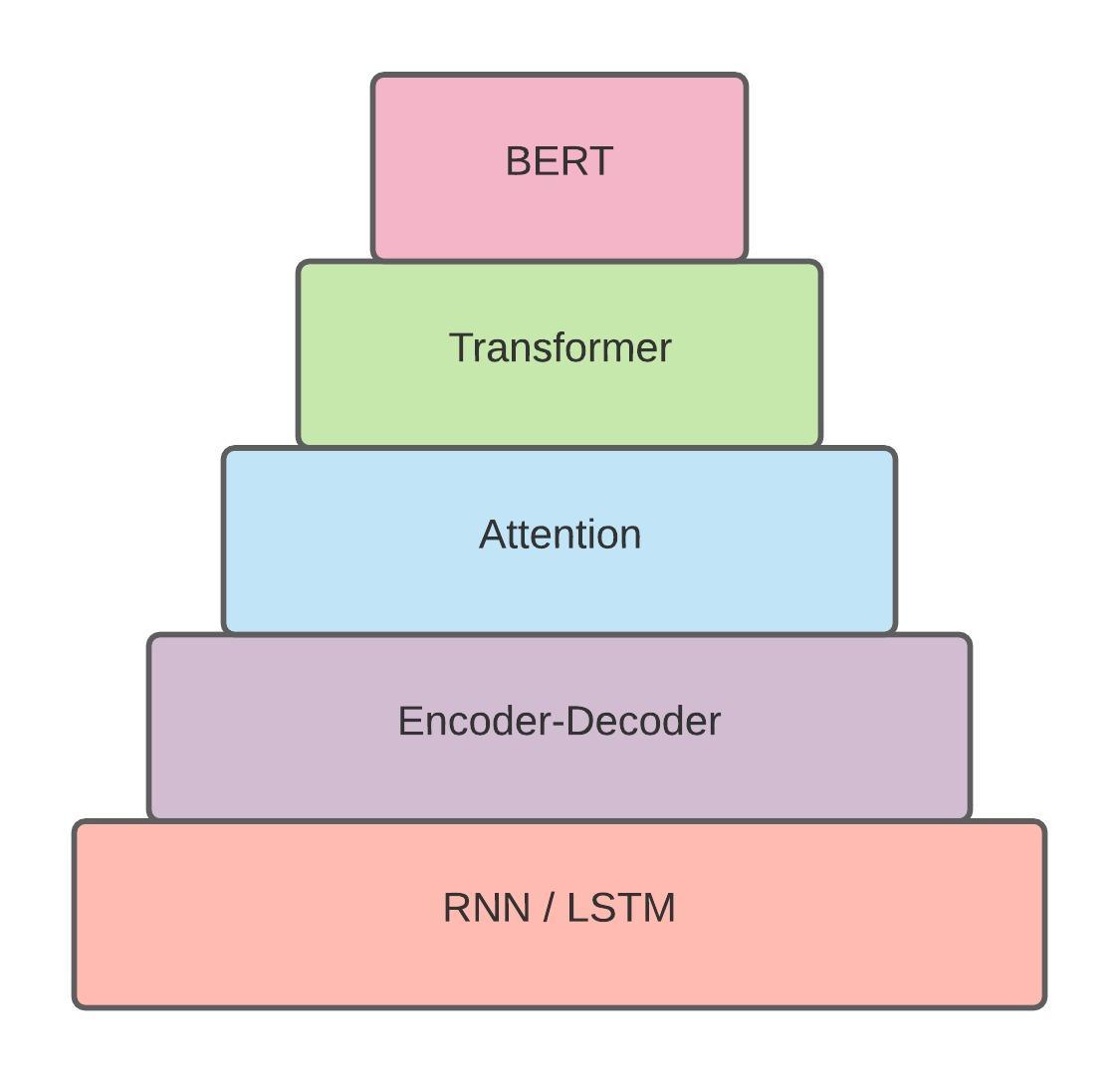

The BERT model is a result of a series of continuous progress in utilizing deep learning models for NLP tasks. Figure 1 shows the hierarchy of advancements leading to BERT. I got the idea of this figure from [4] written by Chris McCormick. So the credit shoud go to him! He called such an hierarchy the BERT mountain, however, I'd like to call it the BERT Cake here as it more looks like a cake to me!

Understanding the lower levels of the BERT cake, on which the BERT was evolved, seems necessary before diving deep into BERT. I would highly recommend to go over [5] and [6], blogs that contains amazing illustrations around these concepts, to have an overview of the necessary concepts.

The transformer was first proposed in a paper titled "Attention is All you Need" [7]. The Harvard nlp group has published a notebook containing the full implementation of the paper [8].

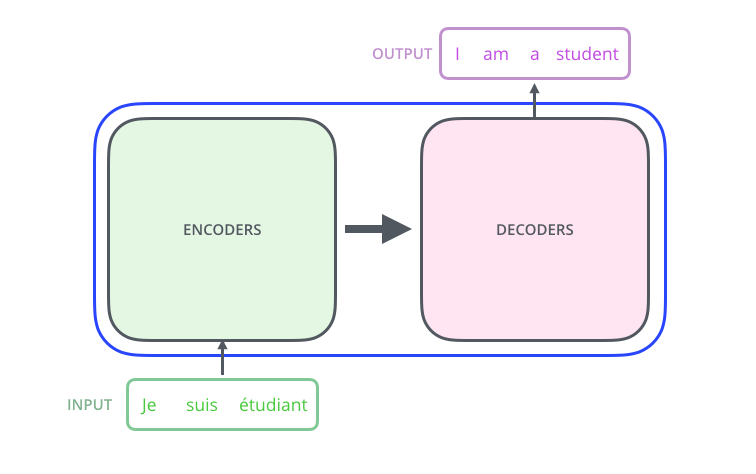

The transfomer is a newer version of sequence to sequence transduction model (e.g. machine translation model - figure 2). Prior to the invention of the transformer, the dominant sequence transduction models were based on recurrent encoder and decoder networks, connected via attention mechanism ([5],[9],[10]).

Fig2: Sequence Transduction Model (source: [6])

Using recurrent networks as endoer an deocder in transduction models leads to some limitations. As we know, hidden states in the RNN has temporal (time) dependencies to one another, meaning that h(t) would be pending h(t-1) computation and so on. This makes it imposible to benefit much from parallelizing operations during the training; consequently, training RNN based transduction models are expensive in both time and memory. The Transformer, however, dispenses with recurrence and instead relies entirely on an attention mechanism to draw global dependenciecs between input and output. As mentioned in the main paper [7]: "The Tramsformer allows for significantly more parallelization and can reach a new state of the art in translation quality after being trained for as little as twelve hours on eight P100 GPUs". As claimed by the authors: "The Transformer is the first transduction model relying entirely on self-attention to compute representations of its input and ouput without using sequence-aligned RNNs or convolution".

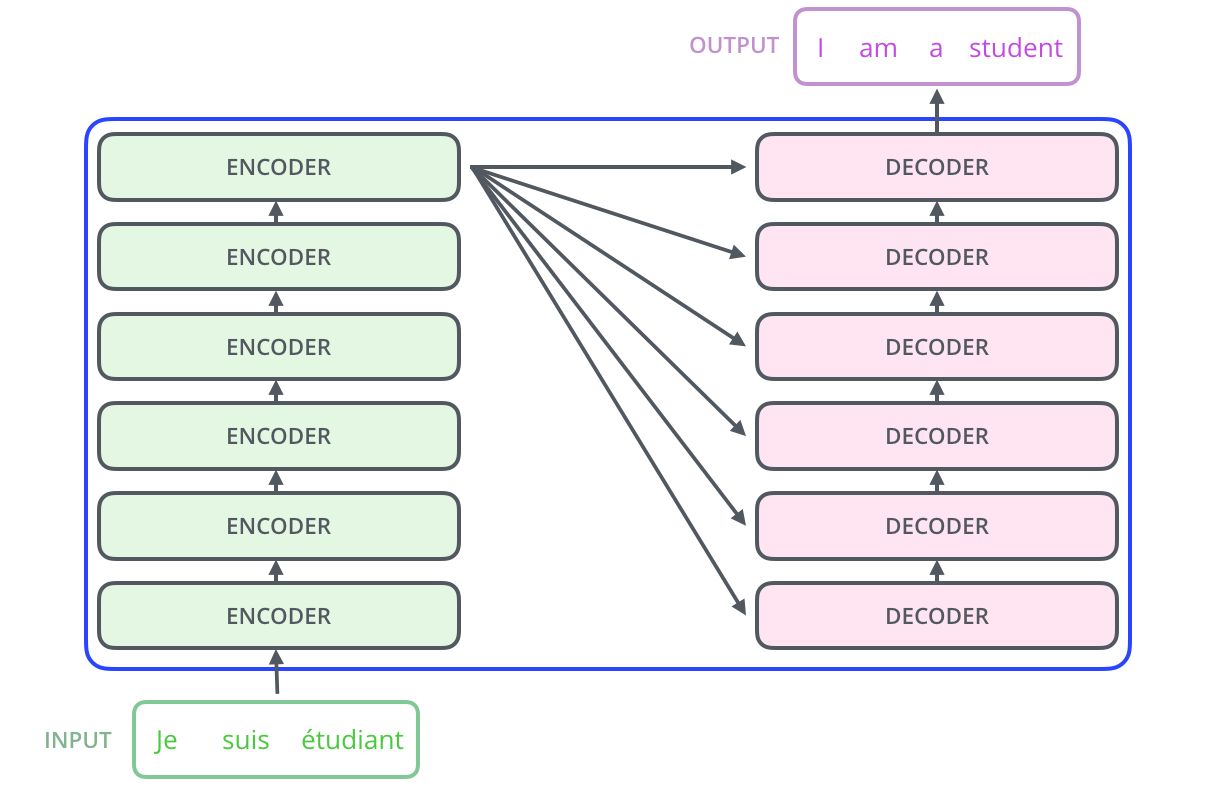

The encoder and the decoder components of the transformer are each composed of 6 layers of encoders and decoders (Fig 3).

Fig3: The Transformer Decoder and Encoder Stacks (source: [6])

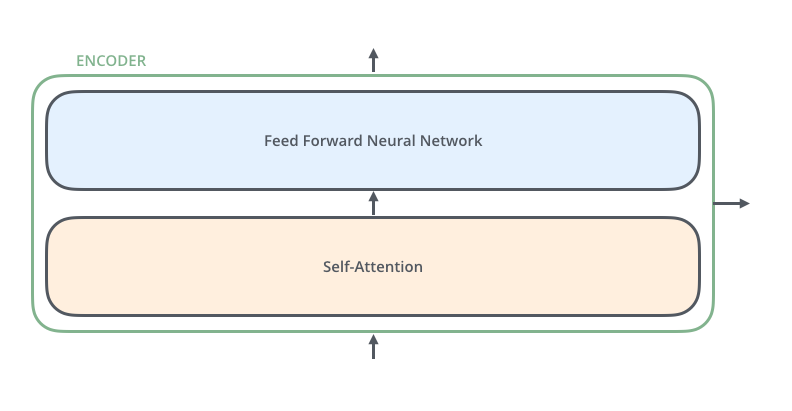

Each of the six encoders in the encoder stack has two sub-layers. The first sub-layer is a multi-head self-attention mechanism (more on this in the following sections), and the second is a fully connected feed-forward network (Fig 4).

Fig4: Transformer Encoder (source: [6])

[1] Chris McCormick. The Inner Workings of BERT (2020)

[2] Bert: Pre-training of deep bidirectional transformers for language understanding

[3] Open Sourcing BERT: State-of-the-Art Pre-training for Natural Language Processing

[4] BERT Research - Ep. 1 - Key Concepts & Sources

[5] Visualizing A Neural Machine Translation Model (Mechanics of Seq2seq Models With Attention)

[6] The Illustrated Transformer

[9] Neural Machine Translation by Jointly Learning to Align and Translate

[10] Effective Approaches to Attention-based Neural Machine Translation