CVPR 2023

- June 20, 2023: Initial release with sample preprocessed data.

- July 3, 2023: How to process new video. Added all preprocessed data from subject one.

requirements.txtorrequirements_reduce.txt- MANO model for hand only model

- SMPLX model for hand and arm model

- Mesh Transformer for initial hand mesh prediction on new videos

The easiest way to run the code is to use conda.

The code is tested on Ubuntu 20.04 with python 3.8 and 3.9.

Installation with python 3.9

- Create a conda env with python 3.9:

conda create -n harp python=3.9 && conda activate harp - Install requirements for pytorch3d version 0.6.2:

conda install pytorch==1.11.0 torchvision==0.12.0 cudatoolkit=11.3 -c pytorch conda install -c fvcore -c iopath -c conda-forge fvcore iopath - Install pytorch3d version 0.6.2:

conda install pytorch3d=0.6.2 -c pytorch3d - Install other packages:

pip install -r requirements_reduce.txt

For other version of python and pytorch, check a good summary from mJones00 here.

NOTE: As the python requirements for Mesh Transformer and pytorch3D are different, they need to be installed in separate conda environments.

- Download smplx and put it in

./hand_models/. - Replace

./hand_models/smplx/smplx/body_models.pyand./hand_models/smplx/smplx/__init__.pywith our version in./hand_models_harp/. - Download MANO and put it in the root directory

./mano/and./manopth/.

- Download the sample preprocessed sequence from here

- Put the data in

../data/. The path can be changed inutils/config_utils.py. - Released data (from one subject, with different appearance variations) can be found here

To start optimizing the sequence from the coarse initialization, run:

python optmize_sequence.py

The output images are in the exp folder as set in config_utils.py.

MeshTransformer Installation

- Install MeshTransformer following their repo

- Copy the following files in

./metro_modificationsand replace the files in./MeshTransformer/metro:./MeshTransformer/metro/tools/end2end_inference_handmesh.py ./MeshTransformer/metro/hand_utils/hand_utils.py ./MeshTransformer/metro/utils/renderer.py - Set the

SMPLX_PATHin end2end_inference_handmesh.py - Set the new sequence path at L150:

- Get the hand segmentation mask using Unscreen or RVM or any other tool. We used RVM for the sample sequence from InterHand2.6M and Unscreen in other cases.

- Put them in the same structure as in the sample sequence.

- For output from Unscreen, you can download the

.giffile then useffmpegto split the video into frames.SEQ=name mkdir ${SEQ} mkdir ${SEQ}/image mkdir ${SEQ}/unscreen ffmpeg -i ${SEQ}.mp4 -vf fps=30 ${SEQ}/image/%04d.png ffmpeg -i ${SEQ}.gif -vsync 0 ${SEQ}/unscreen/%04d.png- The

end2end_inference_handmeshhas the option to convert empty background from Unscreen into white background with the flag--do_crop.

- The

- Run METRO to get the initial hand mesh

python ./metro/tools/end2end_inference_handmesh.py --resume_checkpoint ./models/metro_release/metro_hand_state_dict.bin --image_file_or_path PATH --do_crop - (Run by default) Fit the hand model to METRO output. This step is needed as METRO only predicts the vertex locations.

- Change the path in

utils/config_utils.pyand runpython optmize_sequence.py.

@inproceedings{karunratanakul2023harp,

author = {Karunratanakul, Korrawe and Prokudin, Sergey and Hilliges, Otmar and Tang, Siyu},

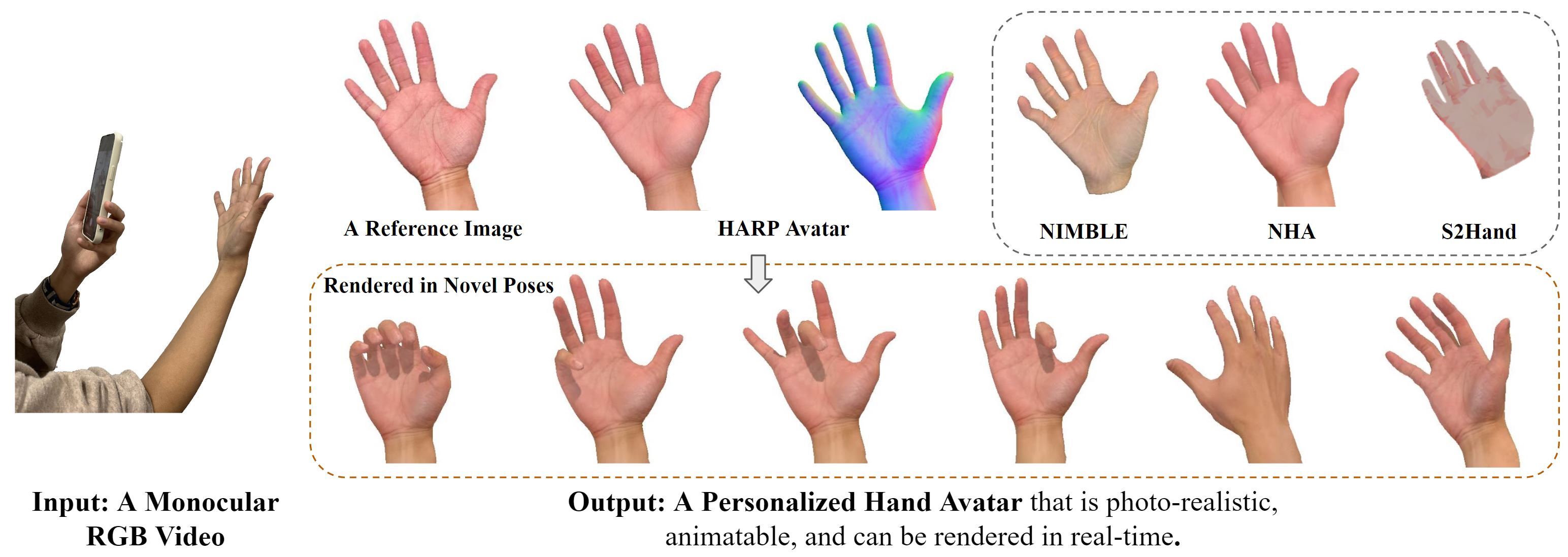

title = {HARP: Personalized Hand Reconstruction from a Monocular RGB Video},

booktitle = {IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2023},

}

Parts of the code are based on the following repositories. Please consider citing them in the relevant context:

- The

body_modelsis built on top of the base class from SMPLX which falls under their license. - The renderer are based on the renderer from Pytorch3D.

- The MANO model implementation from Yana Hasson.

- The hand fitting code from Grasping Field.