Contact Point:

- Alkis Koudounas: alkis.koudounas@polito.it

-

Thesis Projects on Audio and Speech Processing

- Exploring Deep Learning Techniques to Improve Voice Disorder Diagnoses

- Continual Learning in Spoken Language Understanding scenarios

- Fearless Step APOLLO

- Emotional Speech Synthesis

- Investigating fairness and bias in E2E SLU Models

- Speech XAI, explaining reasons behind speech model predictions

- Combining Speech and Text Language Models

- Music Generation

If you are a student from Politecnico di Torino the latex template to write the master thesis is avaiable in Overleaf.

The first step is to create a GitHub Educational account and create an ad-hoc repository containing all relevant code and information for the master thesis.

The research work expected during the development of the master thesis will cover the following steps.

Collect, read and analyze the most recent and relevant publications in the proposed application field. Related works could be summarized and presented by using the Markdown Template available here. Publication could be searched by using the following services:

The majority of the thesis requires a step of data collection or data search. During the exploration of the state of the art the student is asked to collect and organize the data used by each publication. Dataset must be presented in an organized way. If a new data collection is created/parsed please explain both the data collection procedure and the statistics of the data collection.

The code must be organized in a GitHub repository and must be presented in an organized way. The code must be documented and easy to use. It also must be tested and be able to run on a different machine.

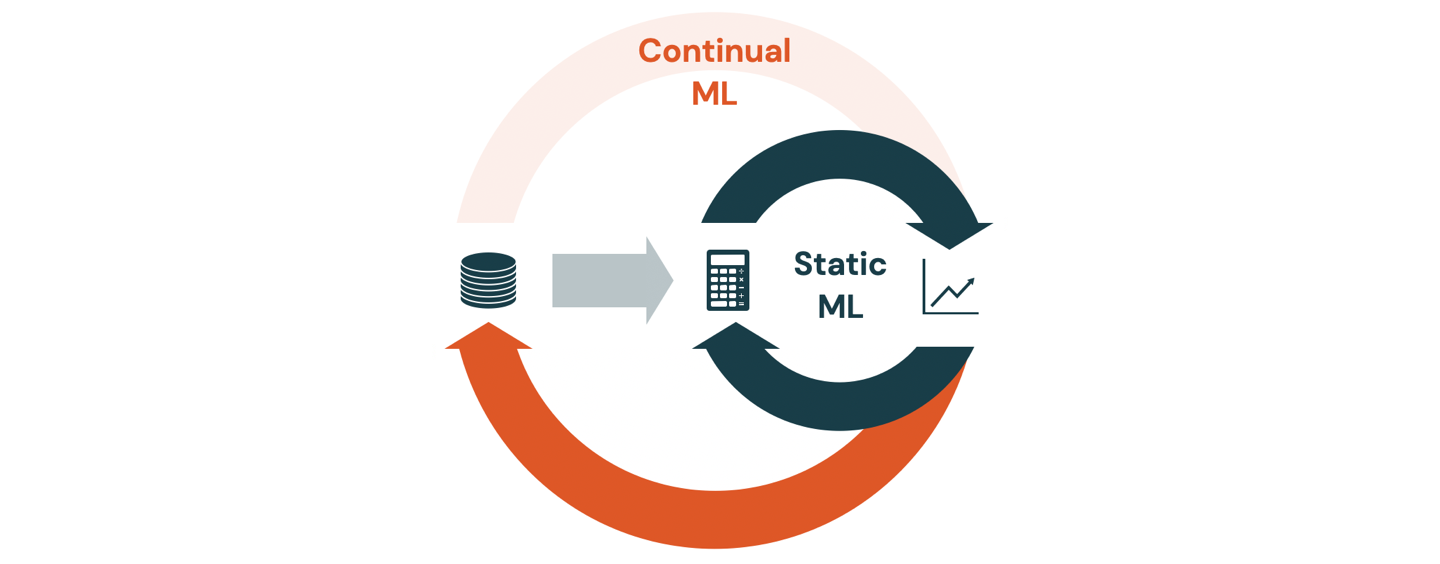

Continual learning (CL) is a way for models to keep learning from new data over time. As the data changes or new data comes in, the model needs to adjust and adapt without forgetting what it already learned

Scenario: Spoken Language Understanding (virtual assistants, home devices, etc.)

Problem: Little to no literature for speech-related tasks.

Common Approaches: regularization losses, rehearsal or experience replay, architectural changes.

The main objectives of this thesis are:

- Analyze the state-of-the-art techniques for Continual Learning.

- Propose a novel approach (architecture, training procedure, etc.) to address this issue.

- Demonstrate the effectiveness of the proposed approach across different datasets and w.r.t. previous methods.

References:

- An Investigation of the Combination of Rehearsal and Knowledge Distillation in Continual Learning for Spoken Language Understanding

- A Progressive Model to Enable Continual Learning for Semantic Slot Filling

- Local-to-global learning for iterative training of production SLU models on new features

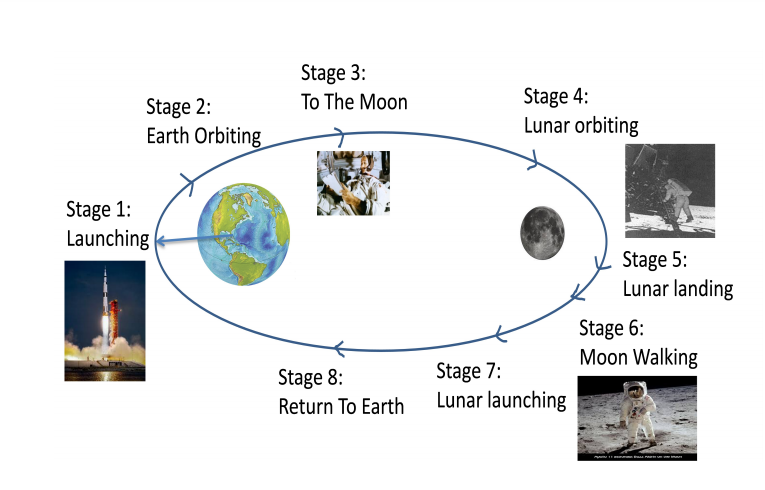

The NASA Apollo program represents one of mankind’s most significant technological challenges to place a human on the moon. Voice communications played a key role in ensuring a coordinated team effort. The primary objective of this thesis is to explore and address urgent needs within the speech/language community that can advance our field through the massive naturalistic Fearless Steps APOLLO corpus.

The main objectives of this thesis are:

- Advancements in digitizing and recovery of APOLLO audio from tapes, and refining machine learning solution(s) for community resource/sharing.

- Understanding team based communication dynamics through speech processing.

- Applications to SLT development, including but not limited to automatic speech recognition (ASR), speech activity detection (SAD), speaker recognition, and conversational topic detection.

- Participation to the Fearless Steps APOLLO Challenge (and possibility to publish in speech top conferences).

References:

- Fearless Steps APOLLO Workshop

- Fearless Steps APOLLO: Advanced Naturalistic Corpora Development

- Speech Activity Detection for Naturalistic Audio Streams

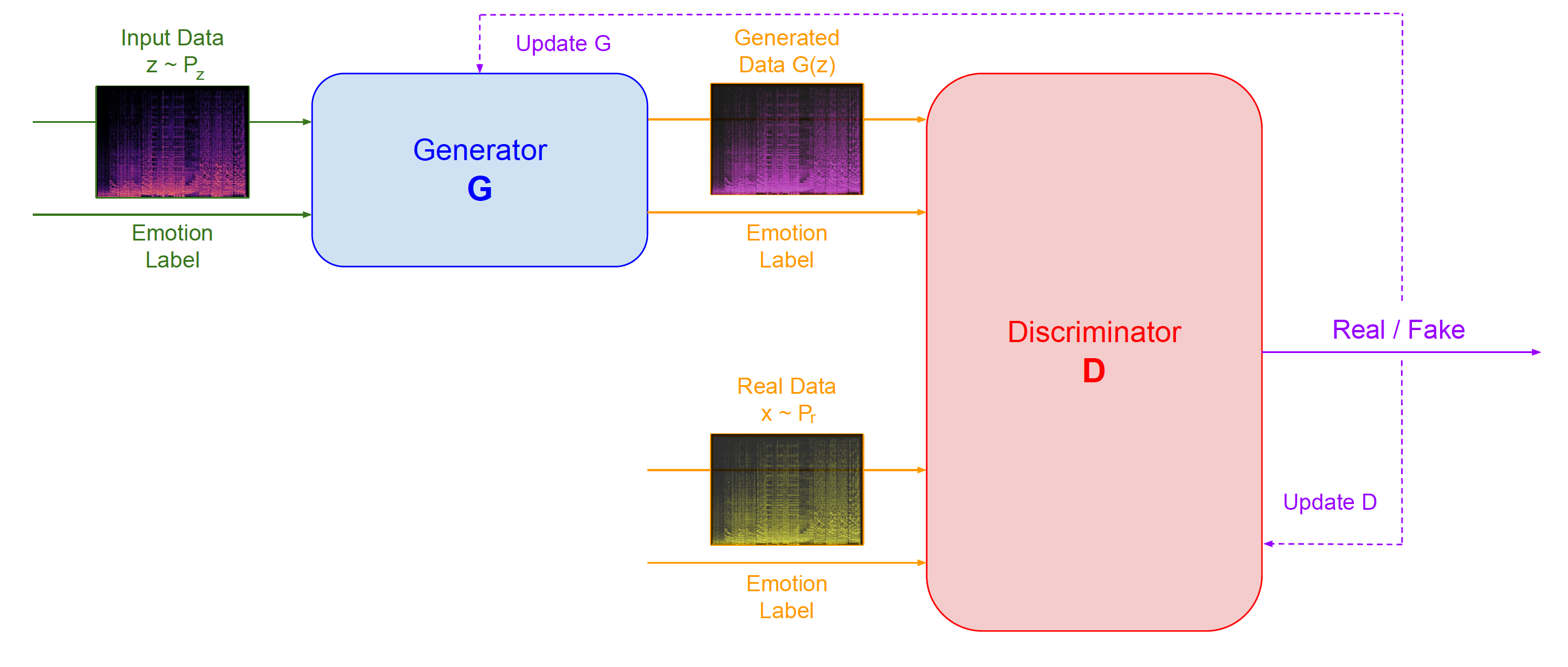

Emotional speech synthesis represents a groundbreaking technology that has the potential to reshape human-machine interaction across various domains. By infusing synthesized speech with different emotions, this technology can enhance the naturalness and effectiveness of machine-generated speech, opening up new frontiers in virtual agents, human-computer interfaces, entertainment, therapy, and assistive technologies. The implications are vast, promising a future where machines can authentically and empathetically communicate emotions, transforming how we interact and engage with artificial systems.

The main objectives of this thesis are:

- Analyze the state-of-the-art techniques for emotional speech synthesis.

- Leverage modern deep learning architectures to design a novel approach for this task.

- Demonstrate the effectiveness of the proposed approach using benchmark data collections (e.g., IEMOCAP).

References:

- Emotional Speech Synthesis: A Review

- Speech Synthesis with Mixed Emotions

- Hume AI

- A List of Voice Conversion Papers & Projects

Voice disorders are difficult to diagnose and require expensive and invasive analysis. The use of Deep Learning techniques can provide early and non-invasive diagnoses by analyzing voice samples. However, the limited amount of medical data is a major obstacle for the development of such systems.

The main objectives of this thesis are:

- Analyze voice samples by means of Deep Learning techniques to provide early and non-invasive diagnoses.

- Explore different types of neural architectures (CNNs and Transformers).

- Explore different transfer-learning and data augmentation strategies to cope with the limited amount of medical data.

Contact Points: Alkis Koudounas, Gabriele Ciravegna.

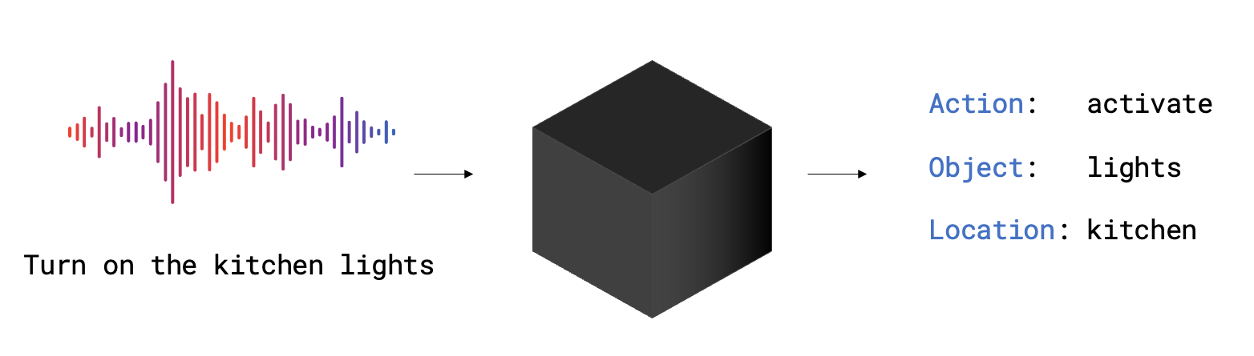

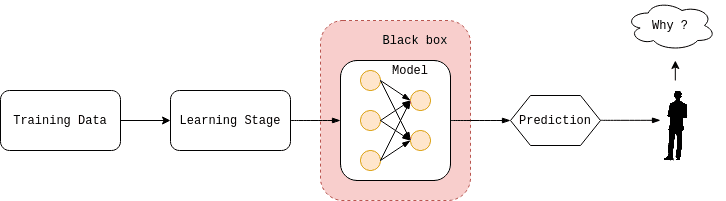

Spoken language understanding (SLU) systems typically rely on automatic spee h recognition (ASR) and natural language understanding (NLU) models to derive meaning from speech signals and text. However, end-to-end (E2E) models offer a direct approach to extracting semantic information from speech signals, leading to improved accuracy and reduced complexity. Nonetheless, E2E models are complex black-box processes, making it difficult to explain their predictions and interpret their results. Therefore, investigating problematic data subgroups is crucial for understanding and debugging AI pipelines to ensure model fairness.

This project is in collaboration with Amazon Alexa AI.

The main objectives of this thesis are:

- Analyze the state-of-the-art E2E SLU models.

- Identify models' bias and source of errors in different scenarios (incremental and curriculum learning).

- Demonstrate the effectiveness of the proposed approach across different models, datasets, tasks.

- (Optional) Propose a novel approach to mitigate the bias and improve the model's performance.

References:

- Exploring Subgroup Performance in End-to-End Speech Models

- Toward Fairness in Speech Recognition

- Shedding light on fairness in AI with a new data set

from The AI Summer

Speech XAI focuses on providing insights into the reasons behind predictions made by speech models. This emerging field aims to enhance transparency and interpretability in speech recognition and synthesis systems. By employing various techniques such as attention mechanisms, saliency maps, and feature importance analysis, Speech XAI enables users to understand why a particular prediction was made. This empowers users to gain insights into the underlying decision-making processes of speech models, fostering trust, accountability, and enabling targeted improvements to ensure more accurate and reliable speech-based applications.

The main objectives of this thesis are:

- Analyze the state-of-the-art XAI techniques.

- Design a novel pipeline to analyze and debug speech models and their predictions.

- Demonstrate the effectiveness of the proposed approach using renowned benchmarks (e.g., SUPERB).

References:

- Towards Relatable Explainable AI with the Perceptual Process

- Exploring Subgroup Performance in End-to-End Speech Models

- Towards Measuring Fairness in Speech Recognition

- Interpretable Machine Learning

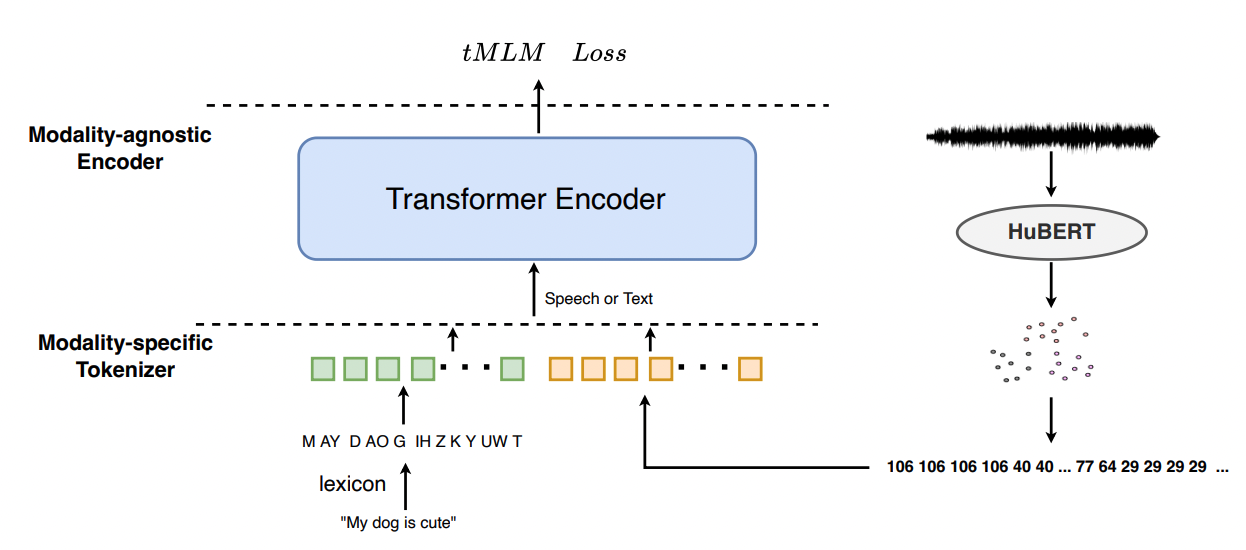

Speech, with its various elements like intonation and non-verbal vocalizations, is considered the earliest form of human language. However, existing systems for understanding spoken language mostly focus on the textual aspect, disregarding these additional components. Recent advancements in speech language modeling and speech synthesis have enabled the development of speech-based language models called SpeechLMs. Nevertheless, despite the increasing prevalence of speech and audio content, text remains the primary mode of communication on the internet. This hampers the construction of large-scale SpeechLMs, unlike the significant achievements seen in textual Language Models (LMs).

The main objectives of this thesis are:

- Analyze the state-of-the-art speech models.

- Propose a novel approach to combine speech and text modalities. Specifically, design a novel architecture capable of leveraging the advantages of both modalities.

- Demonstrate the effectiveness of the proposed approach across different datasets and tasks.

References:

from Analytics Vidhya

from Analytics Vidhya

In recent years, the field of deep music generation has witnessed remarkable advancements driven by the integration of cutting-edge machine learning techniques. The task of generating music poses substantial challenges, as it requires proficient modeling of long-range sequences, generating high-fidelity, coherent audio, with the challenge of the limited availability of paired audio-text data, and dealing with substantial computational resource requirements. Several models have demonstrated impressive skills in music generation from the text while differing in their conditioning. Nevertheless, they still struggle with producing vocals of satisfactory quality, often yielding unclear and unintelligible outputs. Furthermore, the potential of leveraging lyric content to enhance vocal coherence and overall musical output remains underexplored.

The main objectives of this thesis are:

- Analyze the state-of-the-art music generation models.

- Propose a novel approach to address the problem of lyrics intelligibility during the music generation process.

- Demonstrate the effectiveness of the proposed approach across different objective and subjective metrics.

References:

-

2024

- Enrico Porcelli: "Evaluation of the impact of the Multi-Head Attention algorithm in Music Source Separation"

-

2023

- Damiano Bonaccorsi: "Speech-Text Cross-Modal Learning through Self-Attention Mechanisms"

- Giuseppe Concialdi: "Ainur: Enhancing Vocal Quality through Lyrics-Audio Embeddings in Multimodal Deep Music Generation"