This repository is a minimal version of the pytorch-a2c-ppo-acktr-gail repository, which only includes the PyTorch implementation of Proximal Policy Optimization, and is designed to be friendly and flexible for MuJoCo environments.

Differences compared to the original pytorch-a2c-ppo-acktr-gail repository:

- Minimal code for PPO training and simplified installation process

- Using local environments in

envs/for environment customization - Support fine-tuning policies (i.e. training starts on the loaded policy)

- Support enjoy without learned policies (zero actions or random actions)

- The default hyperparameters are adjusted according to the suggestions from the original repository

pip install -r requirements.txt# training from scratch

python train.py --env-name HalfCheetah-v2 --num-env-steps 1000000

# fine-tuning

python train.py --env-name HalfCheetah-v2 --num-env-steps 1000000 --load-dir trained_models# with learned policy

python enjoy.py --env-name HalfCheetah-v2 --load-dir trained_models

# without learned policy (zero actions)

python enjoy.py --env-name HalfCheetah-v2

# without learned policy (random actions)

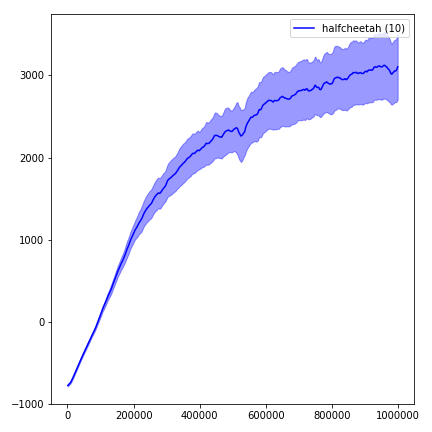

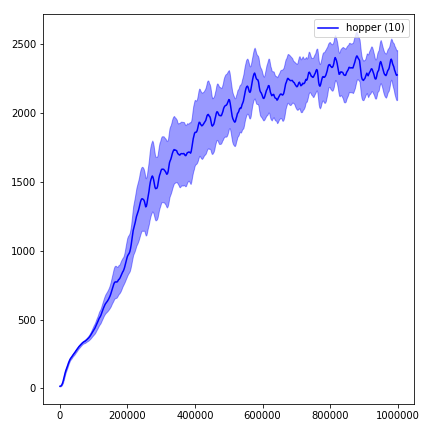

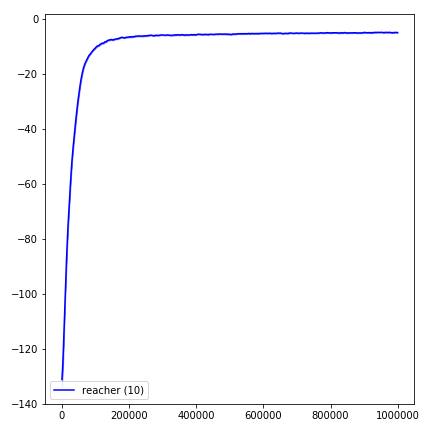

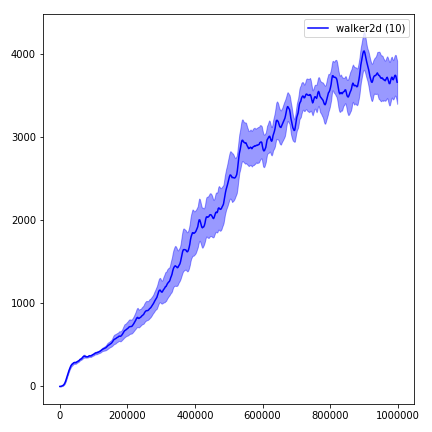

python enjoy.py --env-name HalfCheetah-v2 --randomIn order to visualize the training curves use visualize.ipynb.