Apply several algorithms of Generative Adversarial Networks that can create anime faces. In this repository, I have applied four GAN Algorithms:

- Deep Convolutional Generative Adversarial Networks (DCGAN).

- Wasserstein Generative Adversarial Networks (WGAN).

- StyleGAN by Nvidia Research Labs.

- StyleGAN2 ADA (PyTorch) by Nvidia Research Labs.

The dataset consists of high resolution 140,000 512x512 images of anime faces which is of about 11GB and can be downloaded here.

The algorithms for the GAN models is provided in the respective directories.

The models were trained on Nvidia K80 Tesla GPUs on XSEDE Portal except StyleGAN which was trained on Nvidia RTX 2070 since the subscription was over. StyleGAN2 ADA was trained on Nvidia RTX 3060 for about 3 days to improve the results.

The GAN Algorithm consists of two neural networks, Generator and Discriminator. Generator takes random noise as input (np.random.normal() usually) and consists of several Convolutional Transpose layers that create the fake images. Discriminator takes a combination of real and fake images and applies a simple classification problem for real and fake images.

The models are trained differently. First the generator model is trained using the noise and then the full GAN model is trained while training the discriminator. Therefore, while training the generator, the gradients are used only to update the generator. Where as, while training discriminator, the gradients update the weights for both generator and discriminator.

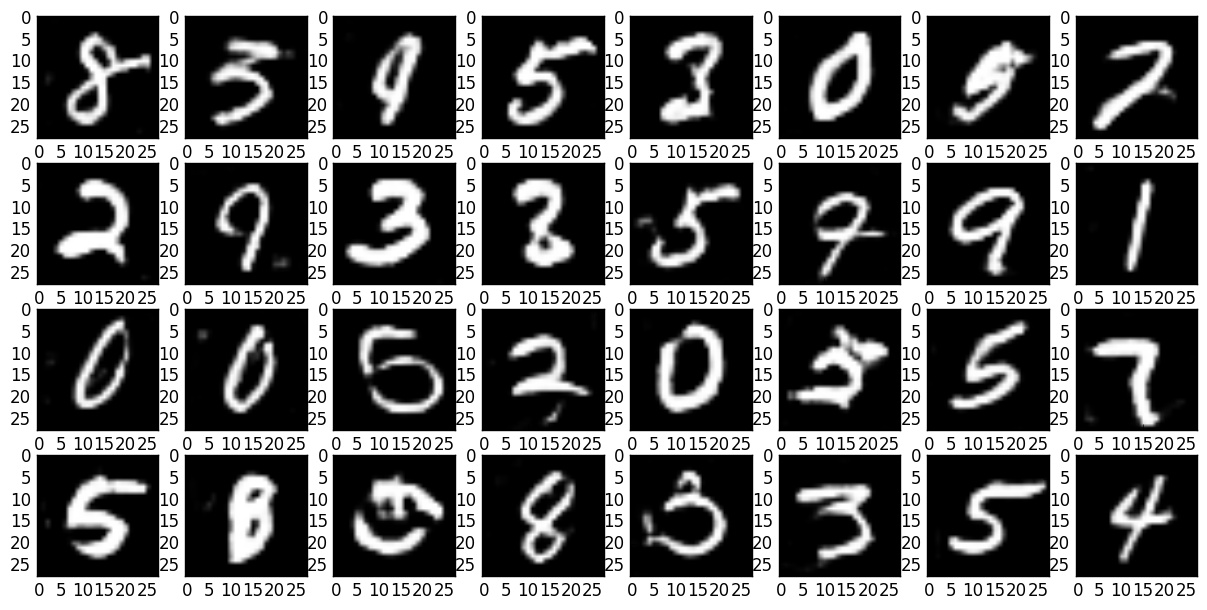

The model convergence while training GANs is difficult as there are two neural networks that are to be trained so it is difficult for hyperparameter optimization and it is common for either the generator or the discriminator model to collapse resulting in faulty training. While optimization is simpler for simpler dataset such as MNIST digits, the results would have been better if the model was trained for a longer duration. The primary goal for using a simpler dataset was to understand how to obtain the balance between the generator and discriminator and understand model collapses.

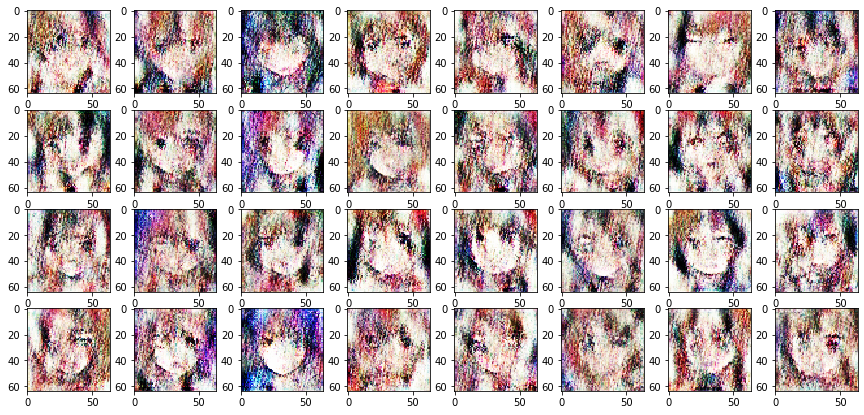

Out of all the algorithms, StyleGAN 2 ADA performed the best to generate anime faces. The observations are given below.

- StyleGAN2 ADA

- StyleGAN

- DCGAN

- DCGAN for MNIST Digits

- WGAN

The WGAN model faces generator collapse frequently and since StyleGAN outperforms WGAN, I invested more training time for StyleGAN than WGAN.

Since the output after every epoch looks fascinating, I created timelapse videos uploaded on YouTube.

Here are the timelapse videos to see how the generator progresses with number of epochs.

StyleGAN2 ADA performes better than StyleGAN and was implemented a year after StyleGAN due to lack of resources. I tried to train StyleGAN2 ADA over Google Colab but it needed more memory so it was trained on a local computer on RTX 3060. StyleGAN2 ADA performs better and requires less training time. The results were more significant in less iterations as compared to StyleGAN. StyleGAN2 ADA was trained over 880 kimg whereas StyleGAN was trained for over 4421 kimg.

The FID50k metric was used for evaluating the models. StyleGAN achieved an FID score of 119.83 whereas StyleGAN2 ADA achieved an FID score of 14.55.

The trained models can be downloaded using this Link.

The StyleGAN models can be downloaded using this Link.

The StyleGAN2 model can be downloaded using this Link.

The Licence is MIT open source for the entire directory except StyleGAN which has its own licence present in the directory.

- Radford, A., Metz, L., & Chintala, S. (2015). Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434. DCGAN.

- Arjovsky, M., Chintala, S., & Bottou, L. (2017). Wasserstein gan. arXiv preprint arXiv:1701.07875. Wasserstein GAN.

- Jin, Y., Zhang, J., Li, M., Tian, Y., Zhu, H., & Fang, Z. (2017). Towards the automatic anime characters creation with generative adversarial networks. arXiv preprint arXiv:1708.05509. Improved version of DCGAN.

- Karras, T., Laine, S., & Aila, T. (2019). A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 4401-4410). StyleGAN.

- Radford, A., Metz, L., & Chintala, S. (2015). Unsupervised representation learning with deep convolutional generative adversarial networks.arXiv preprint arXiv:1511.06434. Additional paper that creates bedrooms with GAN.

- Heusel, M., Ramsauer, H., Unterthiner, T., Nessler, B., & Hochreiter, S. (2017). Gans trained by a two time-scale update rule converge to a local nash equilibrium. In Advances in neural information processing systems (pp. 6626-6637). Fréchet Inception Distance which is used for evaluating GANs.