In this lab, we'll continue to formalize our work with gradient descent and once again practice coding some implementations, starting with a review of linear regression. In the upcoming labs, you'll apply similar procedures to implement logistic regression on your own.

You will be able to:

- Create a full gradient descent algorithm

In order to practice gradient descent, lets begin by investigating a simple regression case in which we are looking to minimize the Residual Sum of Squares (RSS) between our predictions and the actual values. Remember that this is referred to Ordinary Least Squares (OLS) regression. Below, is a mock dataset that we will work with. Preview the dataset. Then, we will compare to simplistic models. Finally, we will use gradient descent to improve upon these initial models.

Good luck!

#The dataset

import pandas as pd

df = pd.read_excel('movie_data.xlsx')

df.head().dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| budget | domgross | title | |

|---|---|---|---|

| 0 | 13000000 | 25682380 | 21 & Over |

| 1 | 45658735 | 13414714 | Dredd 3D |

| 2 | 20000000 | 53107035 | 12 Years a Slave |

| 3 | 61000000 | 75612460 | 2 Guns |

| 4 | 40000000 | 95020213 | 42 |

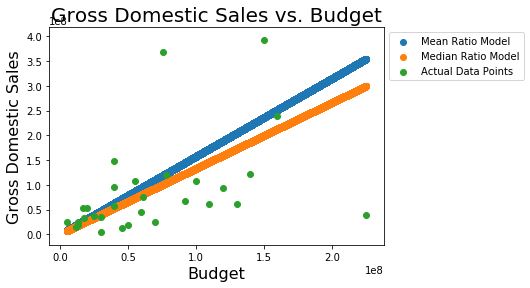

Let's imagine someone is attempting to predict the domestic gross sales of a movie based on the movie's budget, or at least further investigate how these two quantities are related. Two models are suggested, and need to be compared.

The two models are:

Here's a graph of the two models along with the actual data:

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

x = np.linspace(start=df.budget.min(), stop=df.budget.max(), num=10**5)

plt.scatter(x, 1.575*x, label='Mean Ratio Model') #Model 1

plt.scatter(x, 1.331*x, label='Median Ratio Model') #Model 2

plt.scatter(df.budget, df.domgross, label='Actual Data Points')

plt.title('Gross Domestic Sales vs. Budget', fontsize=20)

plt.xlabel('Budget', fontsize=16)

plt.ylabel('Gross Domestic Sales', fontsize=16)

plt.legend(bbox_to_anchor=(1,1))<matplotlib.legend.Legend at 0x112b01198>

In compare the two models (and future ones), we need to define a metric for evaluating and comparing models to each other. Traditionally this is the residual sum of squares. As such we are looking to minimize $ \sum(\hat{y}-y)^2$.

Write a function rss(m) which calculates the residual sum of squares for a simplistic model

def rss(m, X=df.budget, y=df.domgross):

#Your code hereWhich of the two models is better?

#Your code here#Your response hereNow that we have a loss function, we can use numerical methods to find a minimum to the loss function. By minimizing our loss, we have achieved an optimal solution according to our problem formulation. Here's our outline of gradient descent from the previous lesson:

- Define initial parameters:

- pick a starting point

- pick a step size

$\alpha$ (alpha) - choose a maximum number of iterations; the algorithm will terminate after this many iterations if a minimum has yet to be found

- (optionally) define a precision parameter; similar to the maximum number of iterations, this will terminate the algorithm early. For example, one might define a precision parameter of 0.00001, in which case if the change in the loss function were less then 0.00001, the algorithm would terminate. The idea is that we are very close to the bottom and further iterations would make a negligable difference.

- Calculate the gradient at the current point (initially, the starting point)

- Take a step (of size alpha) in the direction of the gradient

- Repeat steps 2 and 3 until the maximum number of iterations is met, or the difference between two points is less then your precision parameter

To start, lets simply visualize our cost function. Plot the cost function output for a range of m values from -3 to 5.

#Your code hereAs you can see, this is a simple cost function. The minimum is clearly around 1. With that, let's try and implement gradient descent in order to find our optimal value for m.

cur_x = #Set a starting point

alpha = #Initialize a step size

precision = 0.0000001 #Initialize a precision

previous_step_size = 1 #Helpful initialization

max_iters = 10000 # maximum number of iterations

iters = 0 #iteration counter

#Create a loop to iterate through the algorithm until either the max_iteration or precision conditions is met

#Your code here; create a loop as described above

#Calculate the gradient. This is often done by hand to reduce computational complexity.

#For here, generate points surrounding your current state, then calculate the rss of these points

#Finally, use the np.gradient() method on this survey region. This code is provided here to ease this portion of the algorithm implementation

x_survey_region = np.linspace(start = cur_x - previous_step_size , stop = cur_x + previous_step_size , num = 101)

rss_survey_region = [np.sqrt(rss(m)) for m in x_survey_region]

gradient = np.gradient(rss_survey_region)[50]

#Update the current x, by taking a "alpha sized" step in the direction of the gradient

#Update the iteration number

#The output for the above will be: ('The local minimum occurs at', 1.1124498053361267)Replot the RSS cost curve as above. Add a red dot for the minimum of this graph using the solution from your gradient descent function above.

#Your code hereIn this lab you coded up a gradient descent algorithm from scratch! In the next lab, you'll apply this to logistic regression in order to create a full implementation yourself!