2023-06 (v0.1): This repository is the official PyTorch implementation of our paper. 2023-02 OVAD has been accepted by CVPR 2023! paper 2022-11 Dataset visualizer page release page. 2022-11 Project page release page. 2022-11 Arxiv paper release

- News

- Table of Contents

- Installation

- Prepare datasets

- Open Vocabulary Detection Benchmark

- Acknowledgements

- License

- Citation

- Linux or macOS with Python ≥ 3.6

- PyTorch ≥ 1.8. Install them together at pytorch.org to make sure of this. Note, please check the PyTorch version matches the one required by Detectron2 and your CUDA version.

- Detectron2: follow Detectron2 installation instructions.

Originally the code was tested on python=3.8.13, torch=1.10.0, cuda=11.2 and OS Ubuntu 20.04.

conda create --name ovad_env python=3.8 -y

conda activate ovad_env

# install PyTorch and Detectron2 from previous section

# under your working directory

git clone https://github.com/OVAD-Benchmark/ovad-benchmark-code.git

cd ovad-benchmark-code

pip install -r requirements.txtThe basic functions of our code use COCO images and our annotations of the OVAD benchmark.

Download the dataset from the official website use sim-link under $datasets/.

datasets/

coco/

train2017/

val2017/

annotations/

captions_train2017.json

instances_train2017.json

instances_val2017.json

ovad/

ovad2000.json

ovad_evaluator.py

ovad.py

README.md

python tools/make_ovd_json.py --base_novel base --json_path datasets/coco/annotations/instances_train2017.json

python tools/make_ovd_json.py --base_novel base_novel17 --json_path datasets/coco/annotations/instances_val2017.jsonThis generates two new annotation json files

$datasets/coco/annotations/instances_train2017_base.json training file with only base class (48) annotations

$datasets/coco/annotations/instances_val2017_base_novel17.json validation file with base (48) and novel17 (17) class annotations

python tools/extract_obj_boxes.pyThis should generate a new folder under $datasets/.

datasets/

ovad_box_instances/

2000_img/

bb_images/

bb_labels/

ovad_4fold_ids.pkl

ovad_labels.pkl

Compute and save the noun and attribute text features for the OVAD benchmark. Or download them from link and place them under $datasets/text_representations.

python tools/dump_attribute_features.py --out_dir datasets/text_representations \

--save_obj_categories --save_att_categories --fix_space \

--prompt none \

--avg_synonyms --not_use_object --prompt_att none This should generate a new folder under $datasets/.

datasets/

text_representations/

ovad_att_clip-ViT-B32_+catt.npy

ovad_att_clip-ViT-B32_+catt.pkl

ovad_obj_cls_clipViT-B32_none+cname.npy

ovad_obj_cls_clipViT-B32_none+cname.pkl

Evaluate Vision Language Models using the Box object setup

Clone the respective repositories under $ovamc/, CLIP, Open Clip, ALBEF, BLIP, BLIP2, X-VLM

Run the evaluation commands. Flags selected correspond to the best model and prompt configuration for every case of model.

# CLIP

python ovamc/ova_clip.py --model_arch "ViT-B/16" -bs 50 --prompt all

# Open CLIP

python ovamc/ova_open_clip.py --model_arch "ViT-B-32" -bs 50 --pretrained laion2b_e16 --prompt a

# ALBEF

python ovamc/ova_albef.py --model_arch "cocoGrounding" -bs 50 --prompt the --average_syn

# BLIP

python ovamc/ova_blip.py --model_arch "baseRetrieval" -bs 50 --prompt the

# BLIP 2

python ovamc/ova_blip2.py --model_arch "base_coco" -bs 50 --prompt the

# X-VLM

python ovamc/ova_xvlm.py --model_arch "pretrained16M2" -bs 50 --prompt all --average_synResults

| Model | ALL mAP | HEAD | MEDIUM | TAIL |

|---|---|---|---|---|

| CLIP | 16.55 | 43.87 | 18.56 | 4.39 |

| Open CLIP | 16.97 | 44.30 | 18.45 | 5.46 |

| ALBEF | 21.04 | 44.18 | 23.83 | 9.39 |

| BLIP | 24.29 | 50.99 | 28.46 | 9.69 |

| BLIP 2 | 25.5 | 49.75 | 30.45 | 10.84 |

| X-VLM | 27.99 | 49.7 | 34.1 | 12.8 |

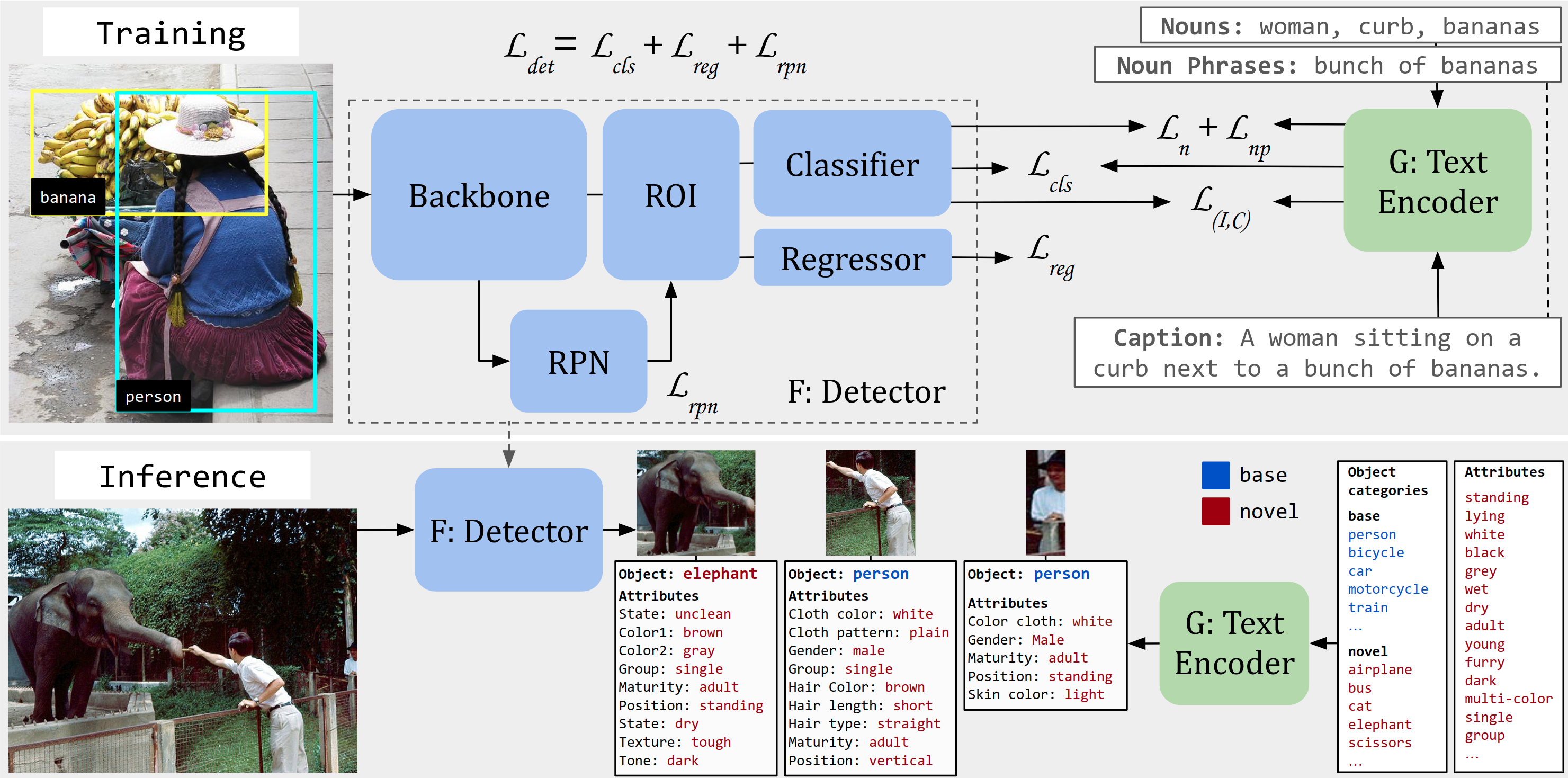

Model Outline

TODO: To be added soon

Download the pretrained OVAD Baseline model and place it under $models/

models/

OVAD_baseline.pth

# OVAD Baseline

python train_att_net.py --num-gpus 1 --resume \

--eval-only --config-file configs/Base_OVAD_C4_1x.yaml \

MODEL.WEIGHTS models/OVAD_baseline.pth

# OVAD Baseline Box Oracle

python train_att_net.py --num-gpus 1 --resume \

--eval-only --config-file configs/BoxAnn_OVAD_C4_1x.yaml \

MODEL.WEIGHTS models/OVAD_baseline.pthAttribute results mAP for the given model weights

| Model / Att Type | Chance | OVAD Baseline |

|---|---|---|

| all | 8.610 | 20.134 |

| head | 36.005 | 46.577 |

| medium | 7.329 | 24.580 |

| tail | 0.614 | 5.298 |

Object results AP50 Generalized (OVD-80) - 2,000 images

| Model | Novel (32) | Base (48) | All (80) |

|---|---|---|---|

| OVAD Baseline | 24.557 | 50.396 | 40.061 |

Object results AP50 Generalized (OVD) - 4,836 images

| Model | Novel (17) | Base (48) | All (65) |

|---|---|---|---|

| OVAD Baseline | 29.757 | 49.355 | 44.229 |

For the full references see our paper. We especially thank the creators of the following github repositories for providing helpful code:

- Zhou et al. for their open vocabulary model and code. Our code is in great portion based on this repository: Detic

- Zareian et al. for their open-vocabulary setup and code: OVR-CNN

- Radford et al. for their Clip model and code.

If you're using OVAD dataset or code in your research or applications, please cite using this BibTeX:

@InProceedings{Bravo_2023_CVPR,

author = {Bravo, Mar{\'\i}a A. and Mittal, Sudhanshu and Ging, Simon and Brox, Thomas},

title = {Open-Vocabulary Attribute Detection},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

pages = {7041-7050}

}