This repository contains the code for ModelDiff, a framework for feature-based comparisons of ML models trained with two different learning algorithms:

ModelDiff: A Framework for Comparing Learning Algorithms

Harshay Shah*, Sung Min Park*, Andrew Ilyas*, Aleksander Madry

Paper: https://arxiv.org/abs/2211.12491

Blog post: http://gradientscience.org/modeldiff/

@inproceedings{shah2022modeldiff,

title={ModelDiff: A Framework for Comparing Learning Algorithms},

author = {Harshay Shah and Sung Min Park and Andrew Ilyas and Aleksander Madry},

booktitle = {ArXiv preprint arXiv:2211.12491},

year = {2022}

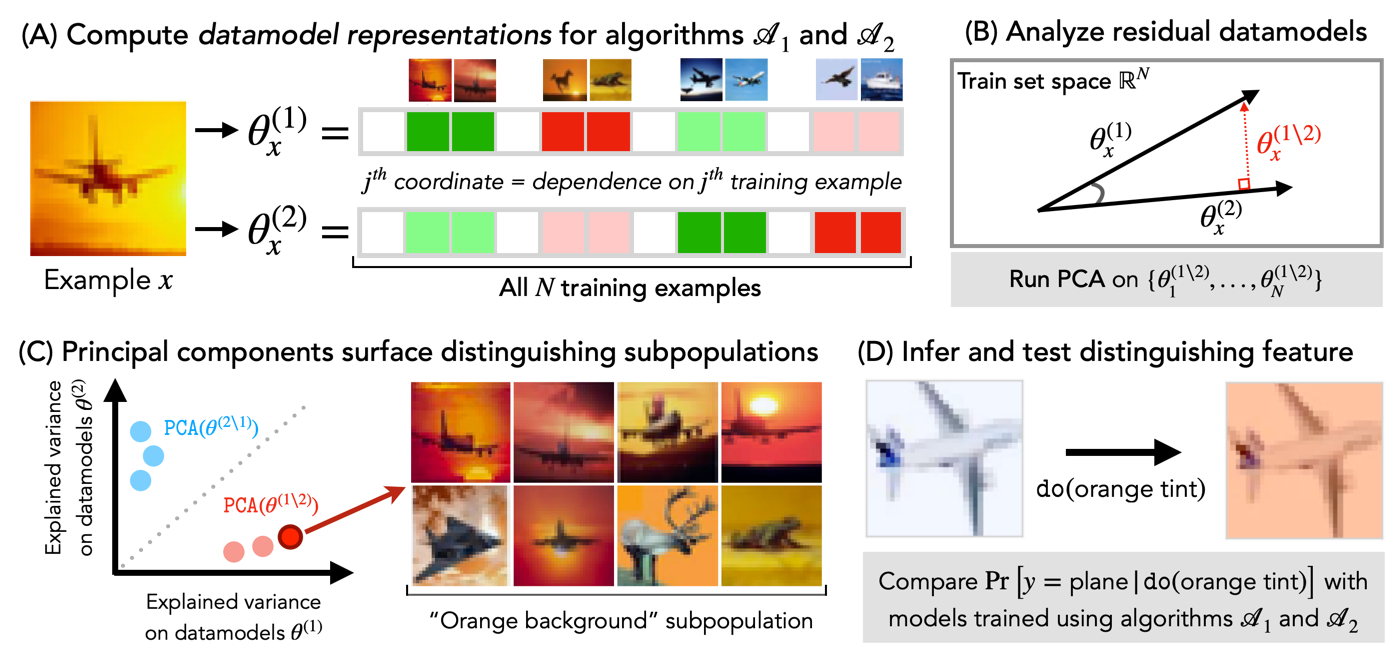

}The figure above summarizes our algorithm comparisons framework, ModelDiff.

- First, our method computes datamodel representations for each algorithm (part A) and then computes residual datamodels (part B) to identify directions (in training set space) that are specific to each algorithm.

- Then, we run PCA on the residual datamodels (part C) to find a set of distinguishing training directions---weighted combinations of training examples that disparately impact predictions of models trained with different algorithms. Each distinguishing direction surfaces a distinguishing subpopulation, from which we infer a testable distinguishing transformation (part D) that significantly impacts predictions of models trained with one algorithm but not the other.

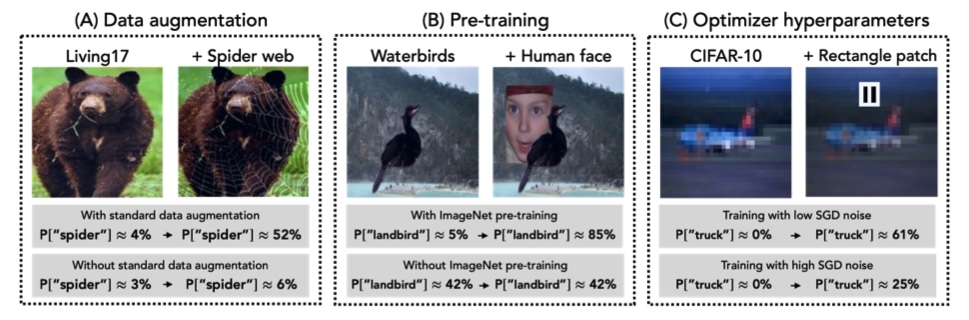

In our paper, we apply ModelDiff to three case studies that compare models trained with/without standard data augmentation, with/without ImageNet pre-training, and with different SGD hyperparameters. As shown below, in all three cases, our framework allows us to pinpoint concrete ways in which the two algorithms being compared differ:

-

Clone the repo:

git clone git@github.com:MadryLab/modeldiff.git -

Our code relies on the FFCV Library. To install this library along with other dependencies including PyTorch, follow the instructions below:

conda create -n ffcv python=3.9 cupy pkg-config compilers libjpeg-turbo opencv pytorch torchvision cudatoolkit=11.3 numba -c pytorch -c conda-forge conda activate ffcv cd <REPO-DIR> pip install -r requirements.txt -

Setup datasets. We use CIFAR-10 (torchvision), Waterbirds (WILDS), and Living17 (BREEDS). Also, change the

DATA_DIRpath insrc/data/datasets.pyto the parent directory of ImageNet data. -

Our framework uses datamodel representations to identify distinguishing features. Download pre-computed datamodels for all three case studies from here and unzip them into

datamodels/

That's it! Now you can run notebooks (one corresponding to each case study in analysis/), or take a look at our scripts (in counterfactuals/) that evaluate the average treatment effect of distinguishing feature transformations.