Instructor: Prof. Yen-Yu Lin

- You could download the notebooks & data, put them all in the same folder and

run allon your local environment or GoogleColab - You need to unzip the data first

- You might need to download some package if you don't have some of them.

In this coding assignment, you need to implement linear regression by using only NumPy, then train your implemented model using Gradient Descent by the provided dataset and test the performance with testing data.

Find the questions at here

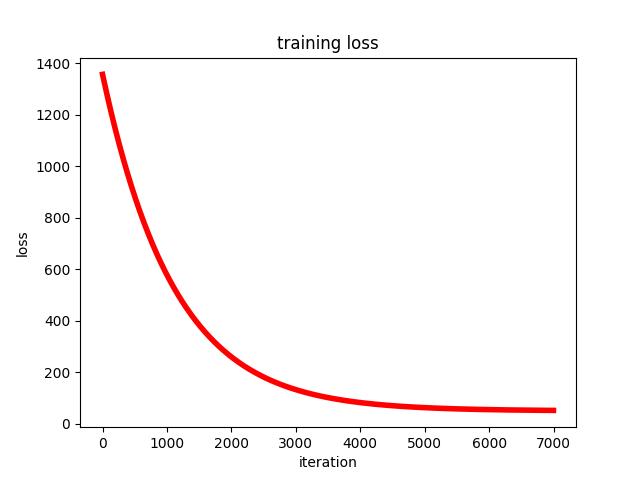

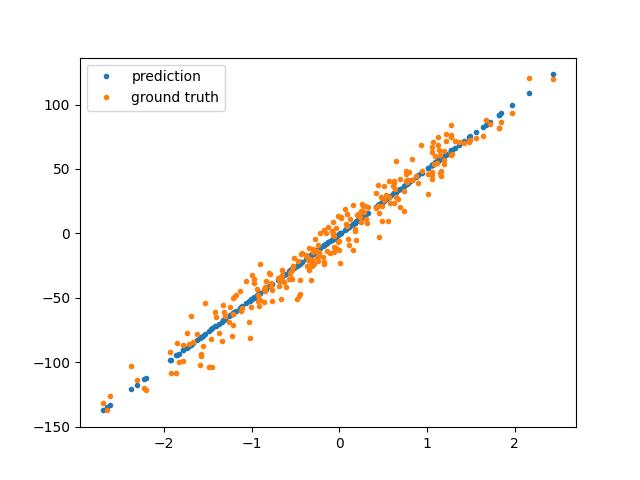

- LinearRegression MSE is 54.2685 Intercept is [-0.4061] and weights are [50.7277]

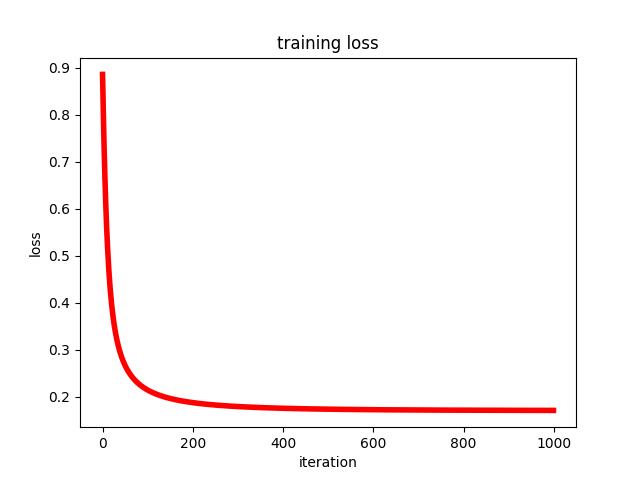

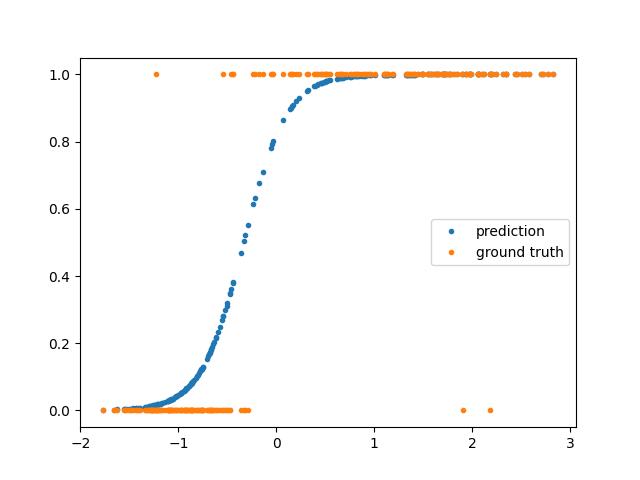

- LogisticRegression Cross Entropy Error is 0.1854 Intercept is [4.5803] and weights are [1.5370]

| Method | Learning Curve | Result |

|---|---|---|

| Logistic Regression |  |

|

| Linear Regression |  |

|

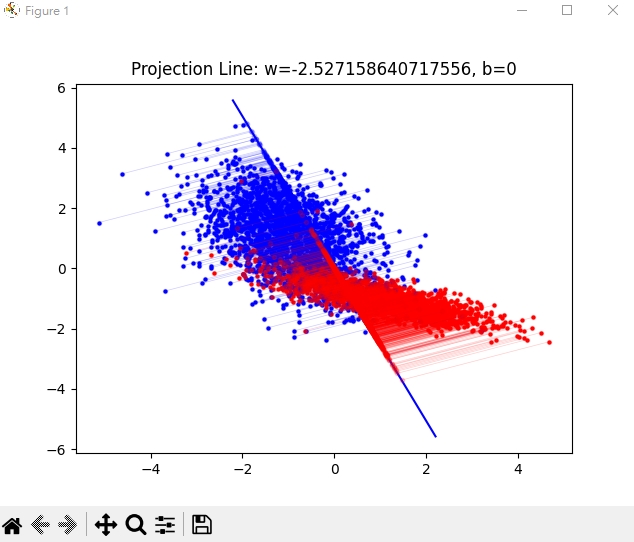

In hw2, you need to implement Fisher’s linear discriminant by using only numpy, then train your implemented model by the provided dataset and test the performance with testing data

Please note that only NUMPY can be used to implement your model, you will get no points by simply calling sklearn.discriminant_analysis.LinearDiscriminantAnalysis

Find the questions at https://docs.google.com/document/d/1T7JLWuDtzOgEQ_OPSgsSiQdp5pd-nS5bKTdU3RR48Z4/edit?usp=sharing

- From HW3 to HW5, see the result in the

report.pdf

In this coding assignment, you need to implement the Decision Tree, AdaBoost and Random Forest algorithm by using only NumPy, then train your implemented model by the provided dataset and test the performance with testing data. Find the sample code and data on the GitHub page.

Please note that only NumPy can be used to implement your model, you will get no points by simply calling sklearn.tree.DecsionTreeClassifier.

Find the questions at this document

In this coding assignment, you need to implement the Cross-validation and grid search by using only NumPy, then train the SVM model from scikit-learn by the provided dataset and test the performance with testing data.

Please note that only NumPy can be used to implement cross-validation and grid search, you will get no points by simply calling sklearn.model_selection.GridSearchCV

Find the questions at this document

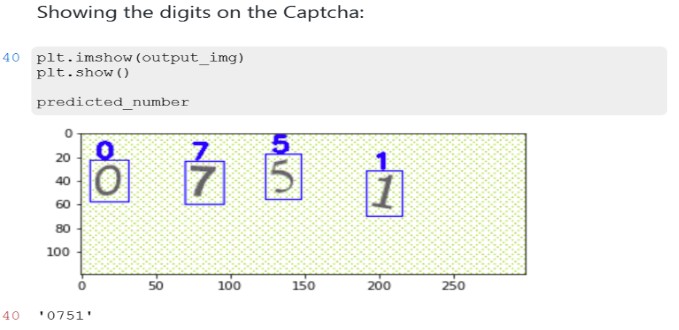

Implement the deep neural network by any deep learning frameworks, e.g., Pytorch, TensorFlow and Keras, and then train DNN model on the provided dataset Find the Kaggle page here

- Train a model to predict all the digits in the image

- Download the Data Here

- Download the Data Here

- For more information, read

README.mdin the folderFinal_Project