Study Friendly Implementation of Pix2Pix in Pytorch

More Information: Original Paper

Identical Tensorflow Implemenation will be uploaded on HyeongminLEE's Github

- GAN: [Pytorch][Tensorflow]

- DCGAN: [Pytorch][Tensorflow]

- InfoGAN: [Pytorch][Tensorflow]

- Pix2Pix: [Pytorch][Tensorflow]

- DiscoGAN: [Pytorch][Tensorflow]

- Ubuntu 16.04

- Python 3.6 (Anaconda)

- Pytorch 0.2.0

- Torchvision 0.1.9

- PIL / random

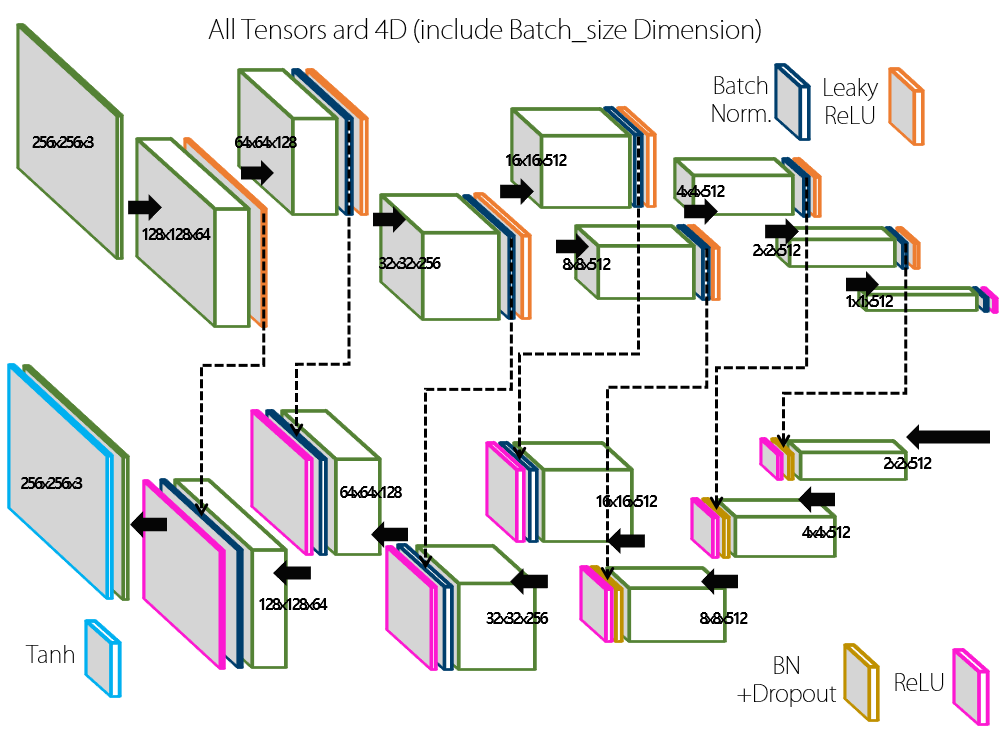

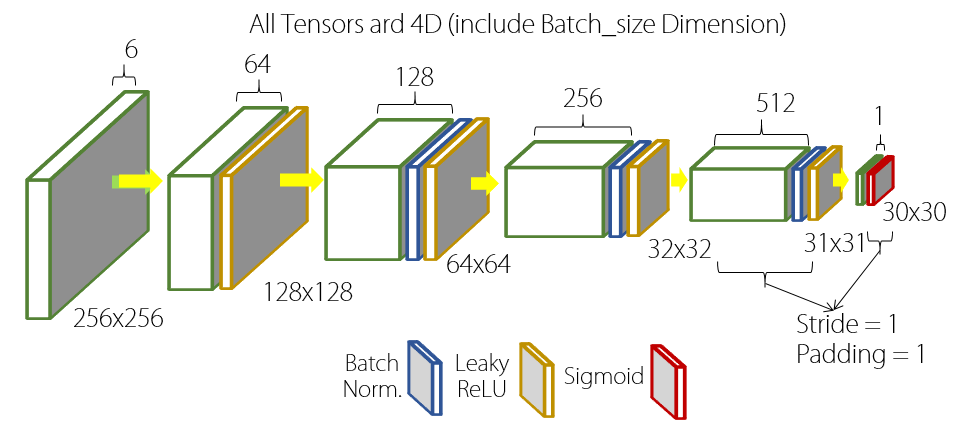

pix2pix.py: Main Codepix2pix_test.py: Test Code after Trainingnetwork.py: Generator and Discriminatordb/download.sh: DB Downloader

- Image Size = 256x256 (Randomly Resized and Cropped)

- Batch Size = 1 or 4

- Learning Rate = 0.0002

- Adam_beta1 = 0.5

- Lambda_A = 100 (Weight of L1-Loss)

Use Batch Normalization when Batch Size = 4

Use Instance Normalization when Batch Size = 1

./db/download.sh dataset_namedataset_name can be one of [cityscapes, maps, edges2handbags]

cityscapes: 256x256, 2975 for Train, 500 for Valmaps: 600x500, 1096 for Train, 1098 for Valedges2handbags: 256x256, 138567 for Train, 200 for Val

python pix2pix.py --dataroot ./datasets/edges2handbags --which_direction AtoB --num_epochs 15 --batchSize 4 --no_resize_or_crop --no_flippython pix2pix.py --dataroot ./datasets/maps --which_direction BtoA --num_epochs 100 --batchSize 1python pix2pix.py --dataroot ./datasets/cityscapes --which_direction BtoA --num_epochs 100 --batchSize 1After finish training, saved models are in the ./models directory.

which_direction,no_resize_or_cropandno_flipoptions must be same with TrainingbatchSizemeans test sample sizenum_epochsis the parameter which model will be used for test

python pix2pix_test.py --dataroot ./datasets/edges2handbags --which_direction AtoB --num_epochs 15 --batchSize 4 --no_resize_or_crop --no_flippython pix2pix_test.py --dataroot ./datasets/maps --which_direction BtoA --num_epochs 100 --batchSize 1python pix2pix_test.py --dataroot ./datasets/cityscapes --which_direction BtoA --num_epochs 100 --batchSize 1Test results will be saved in ./test_result

200 Epochs (which is in the Paper) will give better results

200 Epochs (which is in the Paper) will give better results