💻 Welcome to the "Automated Testing for LLMOps" course! Instructed by Rob Zuber, CTO at CircleCI, this course will teach you how to create a continuous integration (CI) workflow for evaluating your Large Language Model (LLM) applications at every change, enabling faster, safer, and more efficient application development.

Course Website: 📚deeplearning.ai

In this course, you will learn the importance of systematic testing in LLM application development and how to implement a continuous integration workflow to catch issues early. Here's what you can expect to learn and experience:

- 📋 Robust LLM Evaluations: Write robust evaluations covering common problems like hallucinations, data drift, and harmful or offensive output.

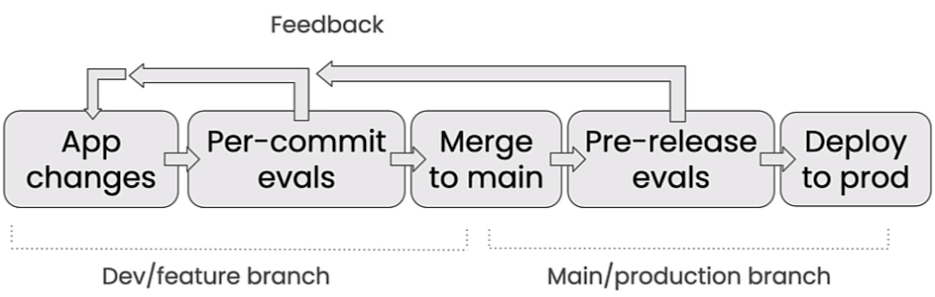

- ⚙️ Continuous Integration Workflow: Build a CI workflow to automatically evaluate every change to your LLM application.

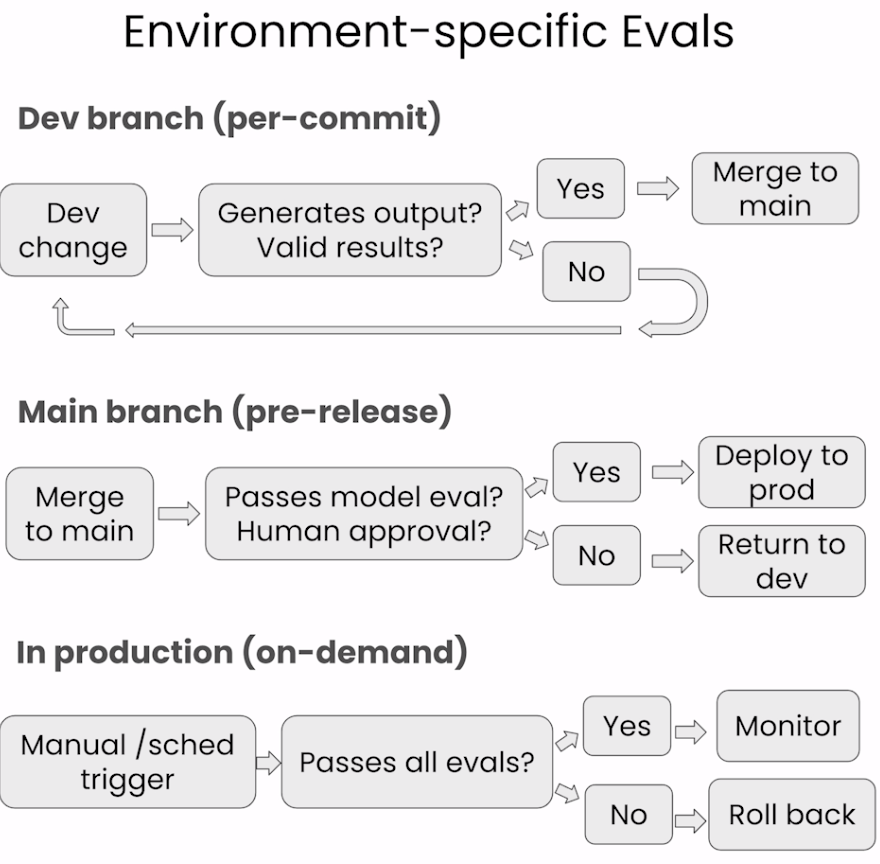

- 🔄 Orchestrating CI Workflow: Orchestrate your CI workflow to run specific evaluations at different stages of development.

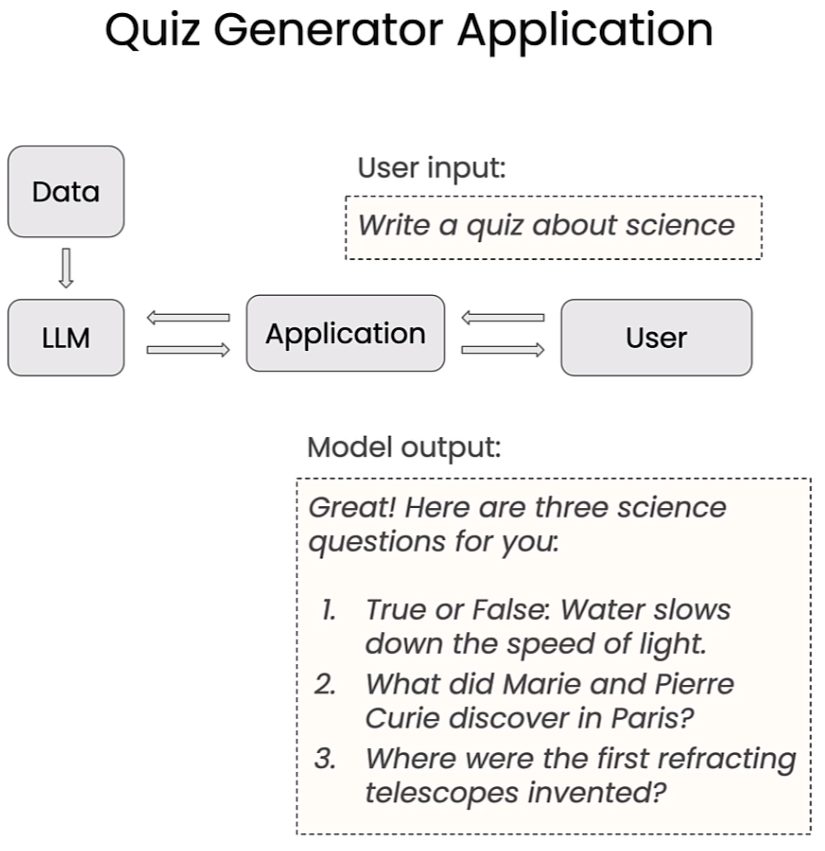

- 🧪 Learn how LLM-based testing differs from traditional software testing and implement rules-based testing to assess your LLM application.

- 📝 Build model-graded evaluations to test your LLM application using an evaluation LLM.

- 🔄 Automate your evaluations (rules-based and model-graded) using continuous integration tools from CircleCI.

🌟 Rob Zuber is the CTO at CircleCI, bringing extensive expertise in software development and continuous integration to guide you through automating testing for LLMOps.

🔗 To enroll in the course or for further information, visit deeplearning.ai.