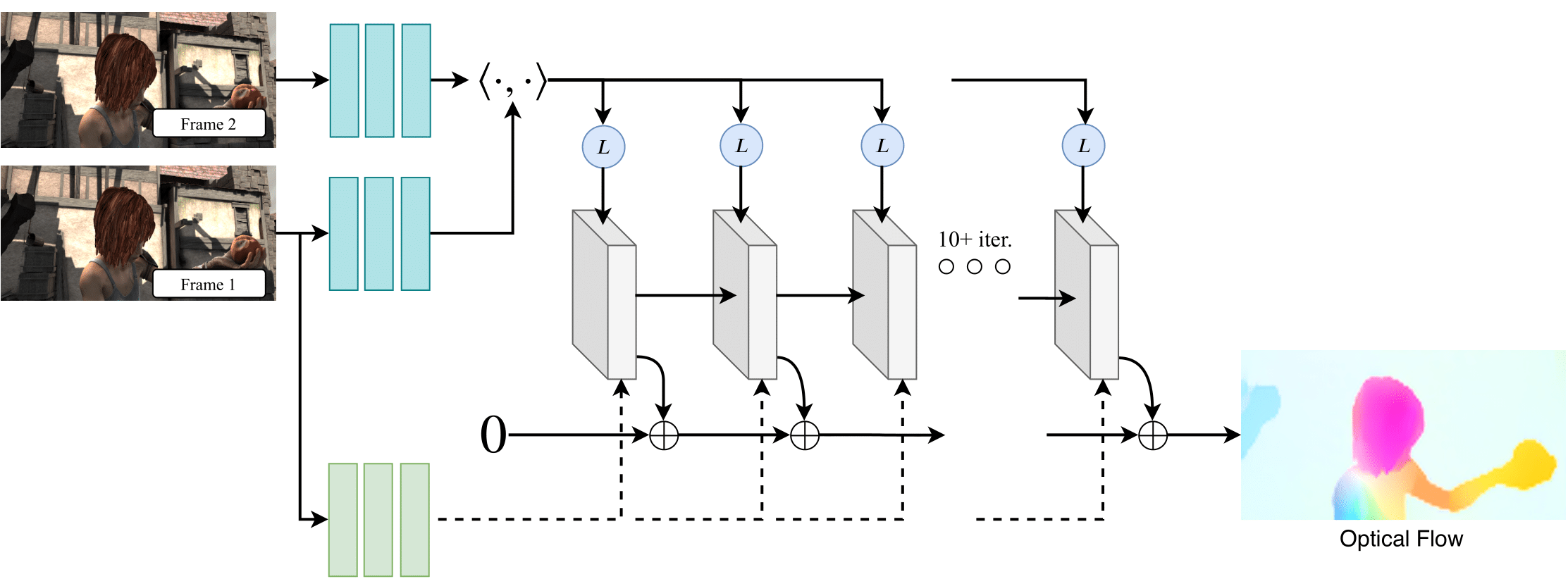

Custom fork of the RAFT implementation, dense optical flow DL framework described in following paper:

RAFT: Recurrent All Pairs Field Transforms for Optical Flow

ECCV 2020

Zachary Teed and Jia Deng

The code has been tested with PyTorch 1.6 and Cuda 10.1.

conda create --name raft

conda activate raft

pip install -e .Pretrained models can be downloaded by running

./download_models.shor downloaded from google drive

You can demo a trained model on a sequence of frames

raft-demo --model=models/raft-things.pth --path=demo-framesTo evaluate/train RAFT, you will need to download the required datasets.

- FlyingChairs

- FlyingThings3D

- Sintel

- KITTI

- HD1K (optional)

By default datasets.py will search for the datasets in these locations. You can create symbolic links to wherever the datasets were downloaded in the datasets folder

├── datasets

├── Sintel

├── test

├── training

├── KITTI

├── testing

├── training

├── devkit

├── FlyingChairs_release

├── data

├── FlyingThings3D

├── frames_cleanpass

├── frames_finalpass

├── optical_flowYou can evaluate a trained model using evaluate.py

raft-evaluate --model=models/raft-things.pth --dataset=sintel --mixed_precisionWe used the following training schedule in our paper (2 GPUs). Training logs will be written to the runs which can be visualized using tensorboard

train_scripts/train_standard.shIf you have a RTX GPU, training can be accelerated using mixed precision. You can expect similiar results in this setting (1 GPU)

train_scripts/train_mixed.shYou can optionally use our alternate (efficent) implementation by running demo.py and evaluate.py with the --alternate_corr flag. The cuda kernel compilation is performed automaticly in jit manner and can take some time at the first run. Note, this implementation is somewhat slower than all-pairs, but uses significantly less GPU memory during the forward pass.

- Added pyproject.toml so it can be installed as python library and used as 3rd party in different projects

- Remove unncecessary submodule levels in python structure, made it little bit more human-friendly

- Replaced setuptools script for custom cuda kernel compilation with jit compiler

- Replaced RAFT model initialization parameters, now it can be created without Namespace object with implicit parameters

- Some minor changes which do not affect the model training/inference