This is the source code for the paper "C-LLM: Learn to Check Chinese Spelling Errors Character by Character" (https://arxiv.org/pdf/2406.16536 )

[2024.9.20] Our paper is accepted by EMNLP2024 Main!

- Python: 3.8

- Cuda: 12.0 (NVIDIA A100-SXM4-40GB)

- Packages: pip install -r requirements.txt

-

Data for Continued Pre-training: Tiger-pretrain-zh (https://huggingface.co/datasets/TigerResearch/pretrain_zh)

-

Data for Supervised Fine-tuning (see /dataset/train_date/):

See /dataset/test_date/:

- General Dataset: CSCD-NS (test)

- Multi-Domain Dataset: LEMON (https://github.com/gingasan/lemon/tree/main/lemon_v2)

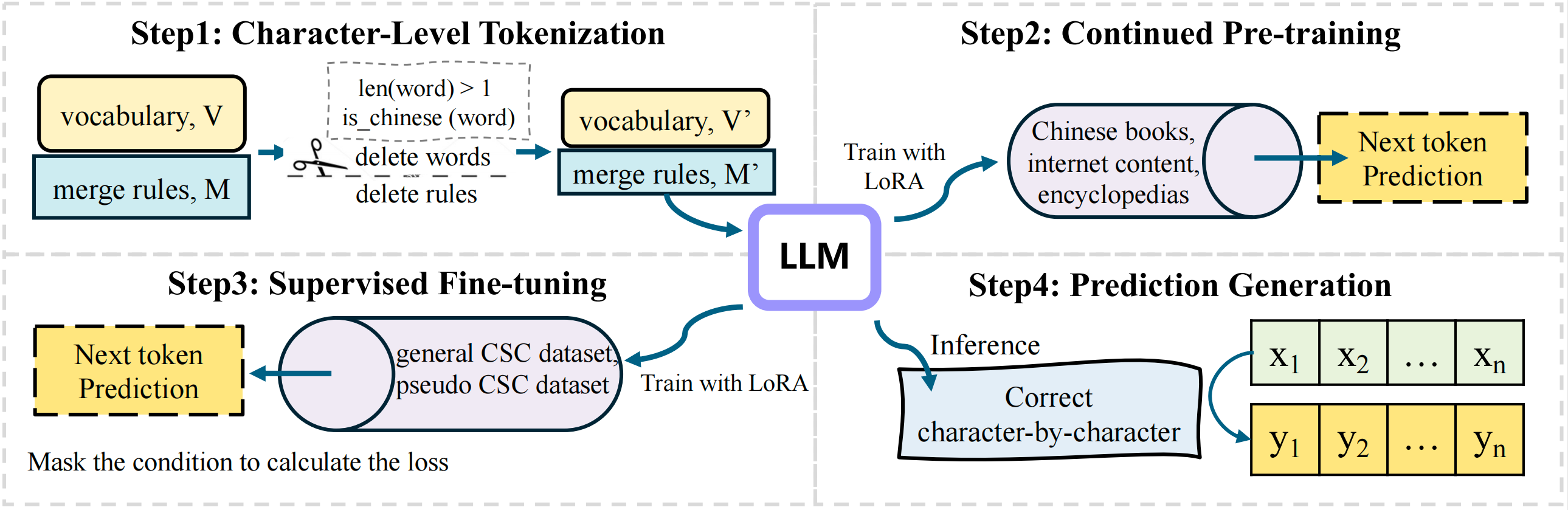

First, run tokenizer_prune_qwen.py to trim the vocabulary for BPE-based tokenization. Next, run pruner.py to update the model embeddings with the new vocabulary.

python tokenizer_prune_qwen.py

python pruner.py

The training data comprised approximately 19B tokens, but we trained for 30,000 steps, covering about 2B tokens. The backbone model is QWEN1.5.

After the above steps are completed, run train.sh for fine-tuning.

sh train.sh

After fine-tuning, run test.sh for inference. Please modify the parameter path in the script is updated to match the path where you have saved the parameters.

bash test.sh

Two methods for handling unequal length sentences were designed: one based on CheRRANT and the other on difflib. In this paper, we adopted the CheRRANT-based method. For evaluation, CheRRANT must first be downloaded to the specified directory.

Run evaluate_result.py for evaluation:

python evaluate_result.py

The script for calculating metrics is adapted from CSCD-NS.

If you find this work is useful for your research, please cite the following paper: C-LLM: Learn to Check Chinese Spelling Errors Character by Character (https://arxiv.org/pdf/2406.16536 )

@article{li2024c,

title={C-LLM: Learn to Check Chinese Spelling Errors Character by Character},

author={Li, Kunting and Hu, Yong and He, Liang and Meng, Fandong and Zhou, Jie},

journal={arXiv preprint arXiv:2406.16536},

year={2024}

}