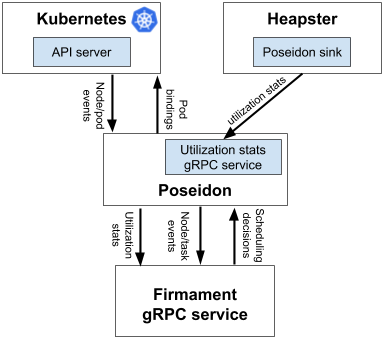

The Poseidon/Firmament scheduler incubation project is to bring integration of Firmament Scheduler OSDI paper in Kubernetes. At a very high level, Poseidon/Firmament scheduler augments the current Kubernetes scheduling capabilities by incorporating a new novel flow network graph based scheduling capabilities alongside the default Kubernetes Scheduler. Firmament models workloads on a cluster as flow networks and runs min-cost flow optimizations over these networks to make scheduling decisions.

Due to the inherent rescheduling capabilities, the new scheduler enables a globally optimal scheduling for a given policy that keeps on refining the dynamic placements of the workload.

As we all know that as part of the Kubernetes multiple schedulers support, each new pod is typically scheduled by the default scheduler. But Kubernetes can also be instructed to use another scheduler by specifying the name of another custom scheduler at the time of pod deployment (in our case, by specifying the 'schedulerName' as Poseidon in the pod template). In this case, the default scheduler will ignore that Pod and instead allow Poseidon scheduler to schedule the Pod on a relevant node.

-

Flow graph scheduling provides the following

- Support for high-volume workloads placement.

- Complex rule constraints.

- Globally optimal scheduling for a given policy.

- Extremely high scalability.

NOTE: Additionally, it is also very important to highlight that Firmament scales much better than default scheduler as the number of nodes increase in a cluster.

Alpha Release

Poseidon/Firmament Integration architecture

For more details about the design of this project see the design document.

In-cluster installation of Poseidon, please start here.

For developers please refer here

To view details related to coordinated release process between Firmament & Poseidon repos, refer here.

Please refer to link for detail throughput performance comparison test results between Poseidon/Firmament scheduler and Kubernetes default scheduler.

- Release 0.9 onwards:

- Provide High Availability/Failover for in-memory Firmament/Poseidon processes.

- Scheduling support for “Dynamic Persistence Volume Provisioning”.

- Optimizations for reducing the no. of arcs by limiting the number of eligible nodes in a cluster.

- CPU/Mem combination optimizations.

- Transitioning to Metrics server API – Our current work for upstreaming new Heapster sink is not a possibility as Heapster is getting deprecated.

- Continuous running scheduling loop versus scheduling intervals mechanism.

- Priority Pre-emption support.

- Priority based scheduling.

- Release 0.8 – Target Date 15th February, 2019:

- Pod Affinity/Anti-Affinity optimization in 'Firmament' code.

- Release 0.7 – Target Date 19th November, 2018:

- Support for Max. Pods per Node.

- Co-Existence with Default Scheduler.

- Node Prefer/Avoid pods priority function.

- Release 0.6 – Target Date 12th November, 2018:

- Gang Scheduling.

- Release 0.5 – Released on 25th October 2018:

- Support for Ephemeral Storage, in addition to CPU/Memory.

- Implementation for Success/Failure of scheduling events.

- Scheduling support for “Pre-bound Persistence Volume Provisioning”.

- Release 0.4 – Released on 18th August, 2018:

- Taints & Tolerations.

- Support for Pod anti-affinity symmetry.

- Throughput Performance Optimizations.

- Release 0.3 – Released on 21st June, 2018:

- Pod level Affinity and Anti-Affinity implementation using multi-round scheduling based affinity and anti-affinity.

- Release 0.2 – Released on 27th May, 2018:

- Node level Affinity and Anti-Affinity implementation.

- Release 0.1 – Released on 3rd May, 2018:

- Baseline Poseidon/Firmament Scheduling capabilities using new multi-dimensional CPU/Memory cost model is part of this release. Currently, this does not include node and pod level affinity/anti-affinity capabilities. As shown below, we are building all this out as part of the upcoming releases.

- Entire test.infra BOT automation jobs are in place as part of this release.