This repository is a repository that implements the exchange except for the golden tokens and more closely resembles the real Splendor environment from the original repository.

Based on the superb repo https://github.com/suragnair/alpha-zero-general but support games with more than 2 players, proper support of invalid actions, and 25-100x speed improvement. You can test it quickly on your browser on https://github.com/cestpasphoto/cestpasphoto.github.io (to play only, not training).

Click here to see details in this section

- Added Dirichlet Noise as per original DeepMind paper, using this pull request

- Compute policy gradients properly when some actions are invalid based on A Closer Look at Invalid Action Masking inPolicy Gradient Algorithms and its repo

- Support games with more than 2 players

- Speed optimized

- Reaching about 3000 rollouts/sec on 1 CPU core without batching and without GPU, meaning 1 full game in 30 seconds when using 1600 rollouts for each move. All in all, that is a 25x to 100x speed improvement compared to initial repo, see details here.

- Neural Network inference speed and especially latency improved, thanks to ONNX

- MCTS and logic optimized thanks to Numba, NN inference is now >80% time spent during self-plays based on profilers

- Memory optimized with minimal performance impact

- use of in-memory compression

- regularly clean old nodes in MCTS tree

- Algorithm improvements based on Accelerating Self-Play Learning in Go

- Playout Cap Randomization

- Forced Playouts and Policy Target Pruning

- Global Pooling

- Auxiliary Policy Targets

- Score Targets

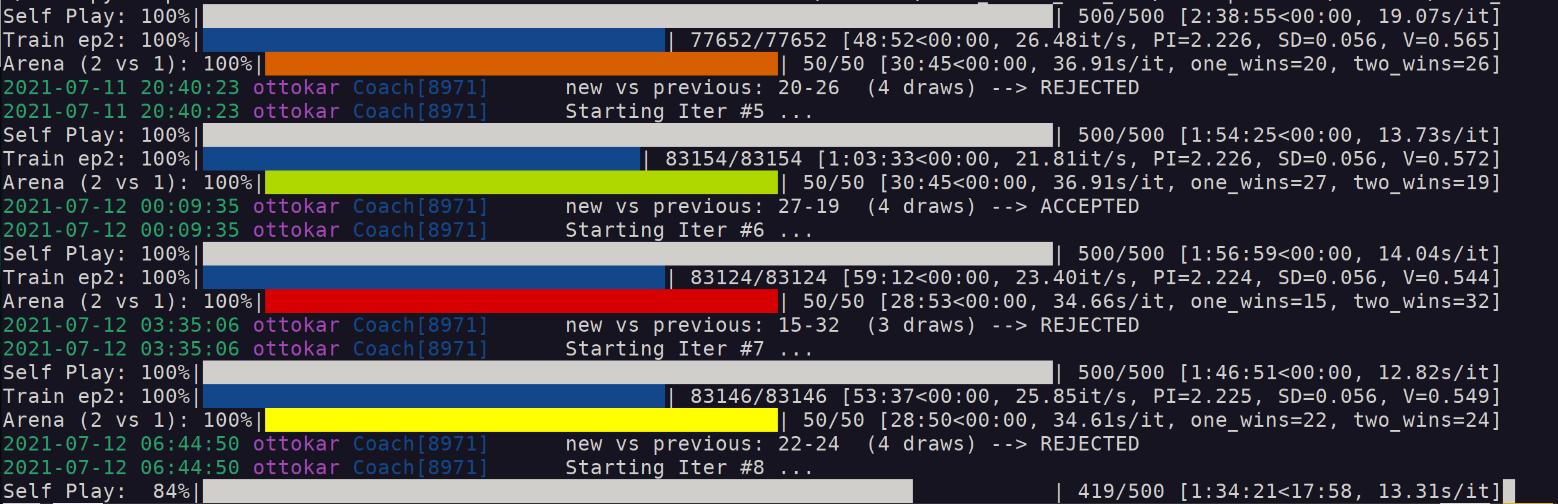

Others changes: improved prints (logging, tqdm, colored bards depending on current Arena results) and parameters can be set in cmdline (added new parameters like time limit). Still todo: set up HyperParameters Optimization (like Hyperband or Population-Based Traininginclude), and ELO-like ranking

Supported games: Splendor, The Little Prince - Make me a planet, Machi Koro (Minivilles), Santorini with basic gods

- Support of Splendor game with 2 players

- Support of 3-4 players (just change NUMBER_PLAYERS in main.py)

- Proper MCTS handling of "chance" factor when revealing new deck card

- Optimized implementation of Splendor, thanks to Numba

- Explore various architectures

- Added pretrained models for 2-3-4 players

There are some limitations: implemented logic doesn't allow you to both take gems from the bank and give back some (whereas allowed in real rules), you can either 1-2-3 gems or give back 1-2 gems.

- Quick implementation of Minivilles, with handful limitations

- Quick implementation of The little prince, with limitations. Main ones are:

- No support of 2 players, only 3-5 players are supported

- When market is empty, current player doesn't decide card type, it is randomly chosen.

- Grey sheeps are displayed on console using grey wolf emoji, and brown sheeps are displayed using a brown goat.

- Own implementation of Santorini, policy for initial status is user switchable (predefined, random or chosen by players)

- Optimized implementation, thanks to Numba again

- Support of goddess (basic only)

- Explore various architectures, max pooling in addition to 2d convolutions seems to help

About 70% winrate against Ai Ai and 90+% win rate against BoardSpace AI. See more details here

Click here for details about training, running or playing

pip3 install onnxruntime numba tqdm colorama coloredlogs

and

pip3 install torch --extra-index-url https://download.pytorch.org/whl/cpu

Contrary to before, latest versions of onnxruntime and pytorch lead to best performance, see GenericNNetWrapper.py line 255

./pit.py -p splendor/pretrained_2players.pt -P human -n 1

Switch -p and -P options if human wants to be first player. You can also make 2 networks fight each other.

. Contrary to baseline version, pit.py automatically retrieves training settings and load them (numMCTSSims, num_channels, ...) although you can override if you want; you may even select 2 different architecture to compare them!

. Contrary to baseline version, pit.py automatically retrieves training settings and load them (numMCTSSims, num_channels, ...) although you can override if you want; you may even select 2 different architecture to compare them!

Compared to initial version, I target a smaller network but more MCTS simulations allowing to see further: this approach is less efficient on GPU, but similar on CPU and allow stronger AI.

main.py -m 1600 -v 15 -T 30 -e 500 -i 10 -p 2 -d 0.50 -b 32 -l 0.0003 --updateThreshold 0.55 -C ../results/mytest:

- Start by defining proper number of players in SplendorGame.py and disabling card reserve actions in first lines of splendor/SplendorLogicNumba.py

-v 15: define loss weights of value estimation vs policy, higher mean more weights to value loss. Suraganair value of 1 lead to very bad performance, I had good results with-v 30during first iterations, and then decrease it down to-v 5-b 32 -l 0.0003 -p 2: define batch size, learning rate and number of epochs. Larger number of epochs degrades performance, same for larger batch sizes--updateThreshold 0.55: result of iteration is kept if winning ratio in self-play is above this threshold. Suraganair value of 60% win seems too high to me

The option -V allows you to switch between different NN architectures. If you specify a previous checkpoint using a different architecture, it will still try loading weights as much as possible. It allows me starting first steps of training with small/fast networks and then I experiment larger networks. I also usually execute several trainings in parallel; you can evaluate the results obtained in the last 24 hours by using this command (execute as many times as threads): ./pit.py -A 24 -T 8

I usually stop training when the 5 last iterations (or -i value) were rejected.

Use of forced rollouts, surprise weight, cyclic learning rate or tuning cpuct value hadn't lead to any significant improvement.

It is possible to use multiple threads by changing intra_op_num_threads and inter_op_num_threads values in GenericNNetWrapper.py (inference) and torch.set_num_threads() (training).

I tried to parallelize the code into multiple threads or multiple processes by running parallel and independent games, but I always had poor results (6 processes resulted in only 2x speedup at best): the cause could be that computations are limited by memory bandwidth, not by CPU speed.