Terraform module to provision an S3 bucket to store terraform.tfstate file and a DynamoDB table to lock the state file

to prevent concurrent modifications and state corruption.

The module supports the following:

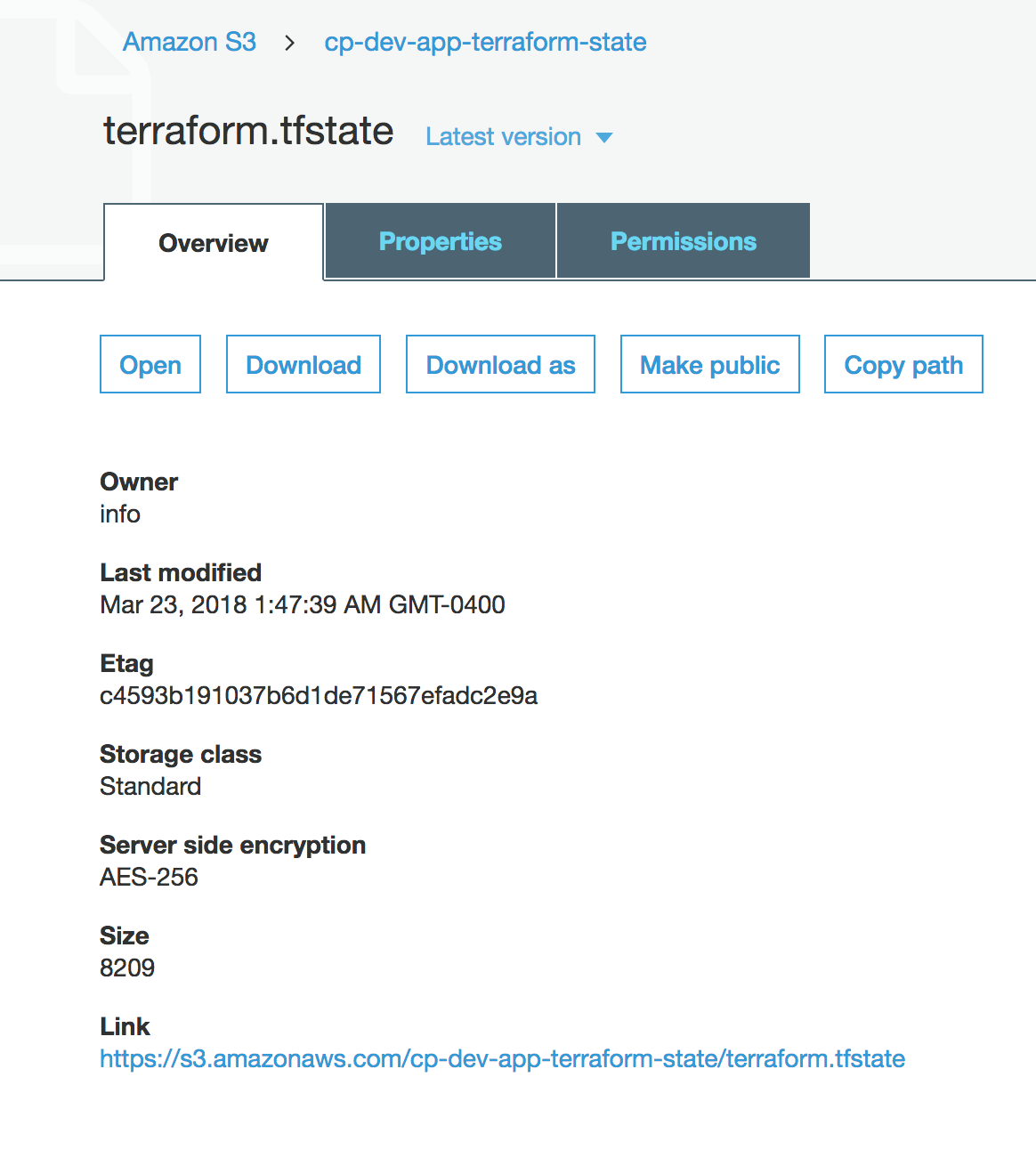

- Forced server-side encryption at rest for the S3 bucket

- S3 bucket versioning to allow for Terraform state recovery in the case of accidental deletions and human errors

- State locking and consistency checking via DynamoDB table to prevent concurrent operations

- DynamoDB server-side encryption

https://www.terraform.io/docs/backends/types/s3.html

NOTE: The operators of the module (IAM Users) must have permissions to create S3 buckets and DynamoDB tables when performing terraform plan and terraform apply

NOTE: This module cannot be used to apply changes to the mfa_delete feature of the bucket. Changes regarding mfa_delete can only be made manually using the root credentials with MFA of the AWS Account where the bucket resides. Please see: hashicorp/terraform-provider-aws#62

This project is part of our comprehensive "SweetOps" approach towards DevOps.

It's 100% Open Source and licensed under the APACHE2.

We literally have hundreds of terraform modules that are Open Source and well-maintained. Check them out!

IMPORTANT: The master branch is used in source just as an example. In your code, do not pin to master because there may be breaking changes between releases.

Instead pin to the release tag (e.g. ?ref=tags/x.y.z) of one of our latest releases.

-

Define the module in your

.tffile using local state:module "terraform_state_backend" { source = "git::https://github.com/cloudposse/terraform-aws-tfstate-backend.git?ref=master" namespace = "eg" stage = "test" name = "terraform" attributes = ["state"] region = "us-east-1" }

-

terraform init -

terraform apply. This will create the state bucket and locking table. -

Then add a

backendthat uses the new bucket and table:backend "s3" { region = "us-east-1" bucket = "< the name of the S3 bucket >" key = "terraform.tfstate" dynamodb_table = "< the name of the DynamoDB table >" encrypt = true } } module "another_module" { source = "....." }

-

terraform init. Terraform will detect that you're trying to move your state into S3 and ask, "Do you want to copy existing state to the new backend?" Enter "yes". Now state is stored in the bucket and the DynamoDB table will be used to lock the state to prevent concurrent modifications.

Available targets:

help Help screen

help/all Display help for all targets

help/short This help short screen

lint Lint terraform code

| Name | Description | Type | Default | Required |

|---|---|---|---|---|

| acl | The canned ACL to apply to the S3 bucket | string | private |

no |

| additional_tag_map | Additional tags for appending to each tag map | map(string) | <map> |

no |

| attributes | Additional attributes (e.g. state) |

list(string) | <list> |

no |

| block_public_acls | Whether Amazon S3 should block public ACLs for this bucket | bool | true |

no |

| block_public_policy | Whether Amazon S3 should block public bucket policies for this bucket | string | true |

no |

| context | Default context to use for passing state between label invocations | object | <map> |

no |

| delimiter | Delimiter to be used between namespace, environment, stage, name and attributes |

string | - |

no |

| enable_server_side_encryption | Enable DynamoDB server-side encryption | bool | true |

no |

| environment | Environment, e.g. 'prod', 'staging', 'dev', 'pre-prod', 'UAT' | string | `` | no |

| force_destroy | A boolean that indicates the S3 bucket can be destroyed even if it contains objects. These objects are not recoverable | bool | false |

no |

| ignore_public_acls | Whether Amazon S3 should ignore public ACLs for this bucket | bool | true |

no |

| label_order | The naming order of the id output and Name tag | list(string) | <list> |

no |

| mfa_delete | A boolean that indicates that versions of S3 objects can only be deleted with MFA. ( Terraform cannot apply changes of this value; hashicorp/terraform-provider-aws#629 ) | bool | false |

no |

| name | Solution name, e.g. 'app' or 'jenkins' | string | terraform |

no |

| namespace | Namespace, which could be your organization name or abbreviation, e.g. 'eg' or 'cp' | string | `` | no |

| prevent_unencrypted_uploads | Prevent uploads of unencrypted objects to S3 | bool | true |

no |

| profile | AWS profile name as set in the shared credentials file | string | `` | no |

| read_capacity | DynamoDB read capacity units | string | 5 |

no |

| regex_replace_chars | Regex to replace chars with empty string in namespace, environment, stage and name. By default only hyphens, letters and digits are allowed, all other chars are removed |

string | /[^a-zA-Z0-9-]/ |

no |

| region | AWS Region the S3 bucket should reside in | string | - | yes |

| restrict_public_buckets | Whether Amazon S3 should restrict public bucket policies for this bucket | bool | true |

no |

| role_arn | The role to be assumed | string | `` | no |

| stage | Stage, e.g. 'prod', 'staging', 'dev', OR 'source', 'build', 'test', 'deploy', 'release' | string | `` | no |

| tags | Additional tags (e.g. map('BusinessUnit','XYZ') |

map(string) | <map> |

no |

| terraform_backend_config_file_name | Name of terraform backend config file | string | terraform.tf |

no |

| terraform_backend_config_file_path | The path to terrafrom project directory | string | `` | no |

| terraform_state_file | The path to the state file inside the bucket | string | terraform.tfstate |

no |

| terraform_version | The minimum required terraform version | string | 0.12.2 |

no |

| write_capacity | DynamoDB write capacity units | string | 5 |

no |

| Name | Description |

|---|---|

| dynamodb_table_arn | DynamoDB table ARN |

| dynamodb_table_id | DynamoDB table ID |

| dynamodb_table_name | DynamoDB table name |

| s3_bucket_arn | S3 bucket ARN |

| s3_bucket_domain_name | S3 bucket domain name |

| s3_bucket_id | S3 bucket ID |

| terraform_backend_config | Rendered Terraform backend config file |

Like this project? Please give it a ★ on our GitHub! (it helps us a lot)

Are you using this project or any of our other projects? Consider leaving a testimonial. =)

Check out these related projects.

- terraform-aws-dynamodb - Terraform module that implements AWS DynamoDB with support for AutoScaling

- terraform-aws-dynamodb-autoscaler - Terraform module to provision DynamoDB autoscaler

Got a question?

File a GitHub issue, send us an email or join our Slack Community.

Work directly with our team of DevOps experts via email, slack, and video conferencing.

We provide commercial support for all of our Open Source projects. As a Dedicated Support customer, you have access to our team of subject matter experts at a fraction of the cost of a full-time engineer.

- Questions. We'll use a Shared Slack channel between your team and ours.

- Troubleshooting. We'll help you triage why things aren't working.

- Code Reviews. We'll review your Pull Requests and provide constructive feedback.

- Bug Fixes. We'll rapidly work to fix any bugs in our projects.

- Build New Terraform Modules. We'll develop original modules to provision infrastructure.

- Cloud Architecture. We'll assist with your cloud strategy and design.

- Implementation. We'll provide hands-on support to implement our reference architectures.

Are you interested in custom Terraform module development? Submit your inquiry using our form today and we'll get back to you ASAP.

Join our Open Source Community on Slack. It's FREE for everyone! Our "SweetOps" community is where you get to talk with others who share a similar vision for how to rollout and manage infrastructure. This is the best place to talk shop, ask questions, solicit feedback, and work together as a community to build totally sweet infrastructure.

Signup for our newsletter that covers everything on our technology radar. Receive updates on what we're up to on GitHub as well as awesome new projects we discover.

Please use the issue tracker to report any bugs or file feature requests.

If you are interested in being a contributor and want to get involved in developing this project or help out with our other projects, we would love to hear from you! Shoot us an email.

In general, PRs are welcome. We follow the typical "fork-and-pull" Git workflow.

- Fork the repo on GitHub

- Clone the project to your own machine

- Commit changes to your own branch

- Push your work back up to your fork

- Submit a Pull Request so that we can review your changes

NOTE: Be sure to merge the latest changes from "upstream" before making a pull request!

Copyright © 2017-2019 Cloud Posse, LLC

See LICENSE for full details.

Licensed to the Apache Software Foundation (ASF) under one

or more contributor license agreements. See the NOTICE file

distributed with this work for additional information

regarding copyright ownership. The ASF licenses this file

to you under the Apache License, Version 2.0 (the

"License"); you may not use this file except in compliance

with the License. You may obtain a copy of the License at

https://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing,

software distributed under the License is distributed on an

"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

KIND, either express or implied. See the License for the

specific language governing permissions and limitations

under the License.

All other trademarks referenced herein are the property of their respective owners.

This project is maintained and funded by Cloud Posse, LLC. Like it? Please let us know by leaving a testimonial!

We're a DevOps Professional Services company based in Los Angeles, CA. We ❤️ Open Source Software.

We offer paid support on all of our projects.

Check out our other projects, follow us on twitter, apply for a job, or hire us to help with your cloud strategy and implementation.

Andriy Knysh |

Erik Osterman |

Maarten van der Hoef |

Vladimir |

|---|