Apache Spark Cluster supporting Patient/Donor stem cell matching

This project builds an Apache Spark cluster in standalone mode with a JupyterLab interface built on top of Docker. Learn Apache Spark through its Scala, Python (PySpark) and R (SparkR) API by running the Jupyter notebooks with examples on how to read, process and write data. This work is based on (and forked from) the original project by André Perez - dekoperez - andre.marcos.perez@gmail.com.

My expansion of this work applies Apache Spark/GraphX and Hadoop to impute N-locus haplotype graphs where edges are generated from public haplotype frequencies and connect nodes of sub-haplotype components. This will be used to provide a scalable patient / donor matching solution for bone marrow transplants.

TL;DR

curl -LO https://raw.githubusercontent.com/kunau/spark-standalone-cluster-on-docker/master/docker-compose.yml

docker-compose upContents

Quick Start

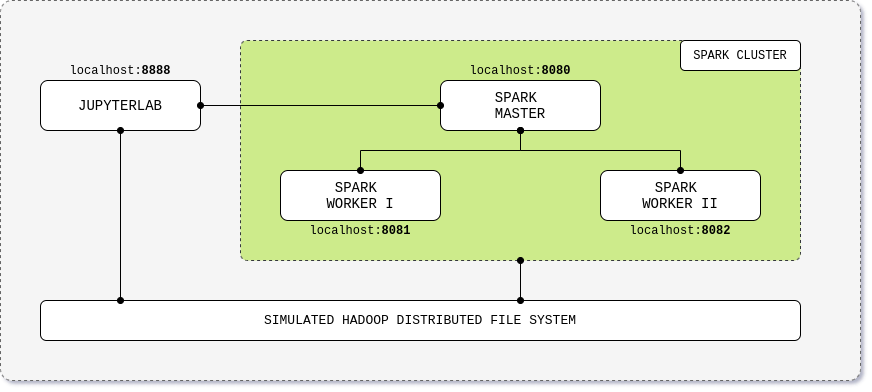

Cluster overview

| Application | URL | Description |

|---|---|---|

| JupyterLab | localhost:8888 | Cluster interface with built-in Jupyter notebooks |

| Apache Spark Master | localhost:8080 | Spark Master node |

| Apache Spark Worker I | localhost:8081 | Spark Worker node with 1 core and 512m of memory (default) |

| Apache Spark Worker II | localhost:8082 | Spark Worker node with 1 core and 512m of memory (default) |

Prerequisites

- Install Docker and Docker Compose, check infra supported versions

Build from Docker Hub

- Download the source code or clone the repository;

- Edit the docker compose file with your favorite tech stack version, check apps supported versions;

- Build the cluster;

docker-compose up- Run Apache Spark code using the provided Jupyter notebooks with Scala, PySpark and SparkR examples;

- Stop the cluster by typing

ctrl+c.

Build from your local machine

Note: Local build is currently only supported on Linux OS distributions.

- Download the source code or clone the repository;

- Move to the build directory;

cd build- Edit the build.yml file with your favorite tech stack version;

- Match those version on the docker compose file;

- Build the images;

chmod +x build.sh ; ./build.sh- Build the cluster;

docker-compose up- Run Apache Spark code using the provided Jupyter notebooks with Scala, PySpark and SparkR examples;

- Stop the cluster by typing

ctrl+c.

Tech Stack

- Infrastructure

| Component | Version |

|---|---|

| Docker Engine | 1.13.0+ |

| Docker Compose | 1.10.0+ |

| Python | 3.7.3 |

| Scala | 2.12.11 |

| R | 3.5.2 |

- Jupyter Kernels

| Component | Version | Provider |

|---|---|---|

| Python | 2.1.4 | Jupyter |

| Scala | 0.10.0 | Almond |

| R | 1.1.1 | IRkernel |

- Applications

| Component | Version | Docker Tag |

|---|---|---|

| Apache Spark | 2.4.0 | 2.4.4 | 3.0.0 | <spark-version>-hadoop-2.7 |

| JupyterLab | 2.1.4 | <jupyterlab-version>-spark-<spark-version> |

Apache Spark R API (SparkR) is only supported on version 2.4.4. Full list can be found here.

Docker Hub Metrics

| Image | Size | Downloads |

|---|---|---|

| JupyterLab |  |

|

| Spark Master |  |

|

| Spark Worker |  |

|

Contributing

We'd love some help. To contribute, please read this file.

Staring us on GitHub is also an awesome way to show your support ⭐

Contributors

- André Perez - dekoperez - andre.marcos.perez@gmail.com

- NMDP/GRIMM - https://github.com/nmdp-bioinformatics/grimm