This is a PyTorch implementation of speaker embedding trained with GE2E loss. The original paper about GE2E loss could be found here: Generalized End-to-End Loss for Speaker Verification

You can download the pretrained models from: Wiki - Pretrained Models.

Since the models are compiled with TorchScript, you can simply load and use a pretrained d-vector anywhere.

import torch

from modules import AudioToolkit

wav = AudioToolkit.preprocess_wav(audio_path)

mel = AudioToolkit.wav_to_logmel(wav)

mel = torch.FloatTensor(mel)

with torch.no_grad():

dvector = torch.jit.load(checkpoint_path).eval()

emb = dvector.embed_utterance(mel)To use the script provided here, you have to organize your raw data in this way:

- all utterances from a speaker should be put under a directory (speaker directory)

- all speaker directories should be put under a directory (root directory)

- speaker directory can have subdirectories and utterances can be placed under subdirectories

And you can extract utterances from multiple root directories, e.g.

python preprocess.py VoxCeleb1/dev LibriSpeech/train-clean-360 -o preprocessedYou have to specify where to store checkpoints and logs, e.g.

python train.py preprocessed <model_dir>During training, logs will be put under <model_dir>/logs and checkpoints will be placed under <model_dir>/checkpoints.

For more details, check the usage with python train.py -h.

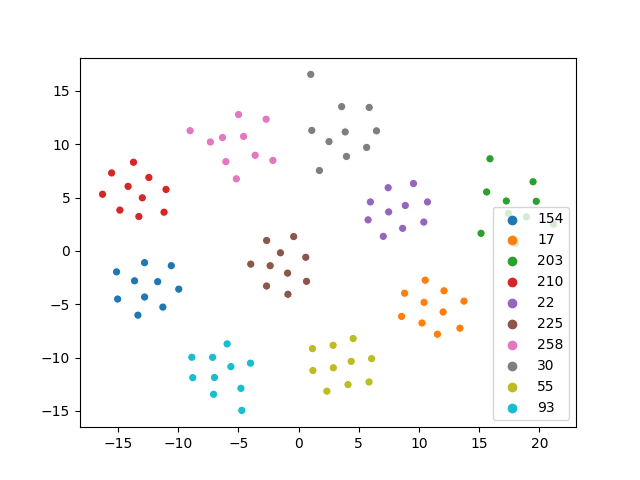

You can visualize speaker embeddings using a trained d-vector. Note that you have to structure speakers' directories in the same way as for preprocessing. e.g.

python visualize.py LibriSpeech/dev-clean -c dvector.pt -o tsne.jpgThe following plot is the dimension reduction result (using t-SNE) of some utterances from LibriSpeech.

- GE2E-Loss module: cvqluu/GE2E-Loss