from audio_encoder import AudioEncoderVita

# Example usage:

if __name__ == "__main__":

# Assume 2 seconds of audio with 16kHz sample rate

audio_input = torch.randn(

8, 32000

) # batch_size = 8, num_samples = 32000

model = AudioEncoderVita()

output = model(audio_input)

print(

output.shape

) # Should output (batch_size, num_tokens, output_dim)2024.08.12🌟 We are very proud to launch VITA, the First-Ever open-source interactive omni multimodal LLM! All training code, deployment code, and model weights will be released soon! The open source process requires some flow, stay tuned!

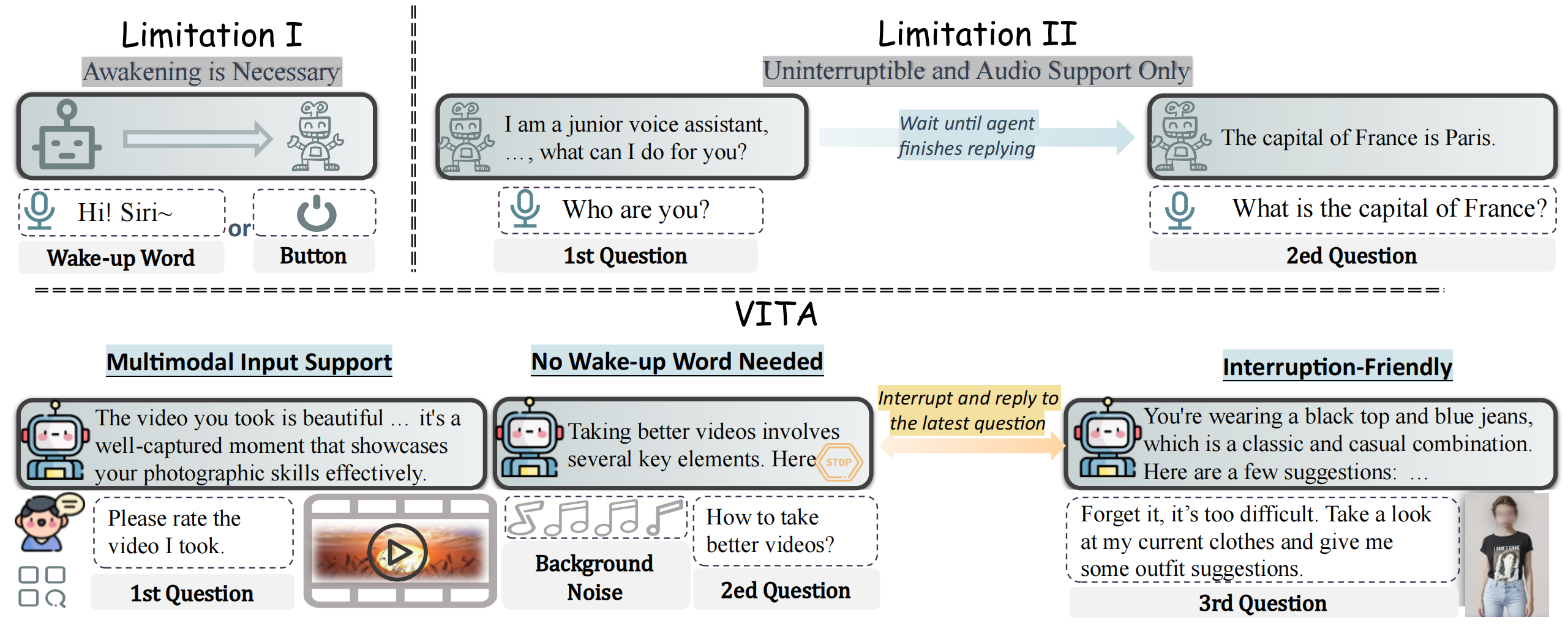

The remarkable multimodal capabilities and interactive experience of GPT-4o underscore their necessity in practical applications, yet open-source models rarely excel in both areas. In this paper, we introduce VITA, the first-ever open-source Multimodal Large Language Model (MLLM) adept at simultaneous processing and analysis of Video, Image, Text, and Audio modalities, and meanwhile has an advanced multimodal interactive experience. Our work distinguishes from existing open-source MLLM through three key features:

- Omni Multimodal Understanding: VITA demonstrates robust foundational capabilities of multilingual, vision, and audio understanding, as evidenced by its strong performance across a range of both unimodal and multimodal benchmarks.

- Non-awakening Interaction: VITA can be activated and respond to user audio questions in the environment without the need for a wake-up word or button.

- Audio interrupt Interaction: VITA is able to simultaneously track and filter external queries in real-time. This allows users to interrupt the model's generation at any time with new questions, and VITA will respond to the new query accordingly.

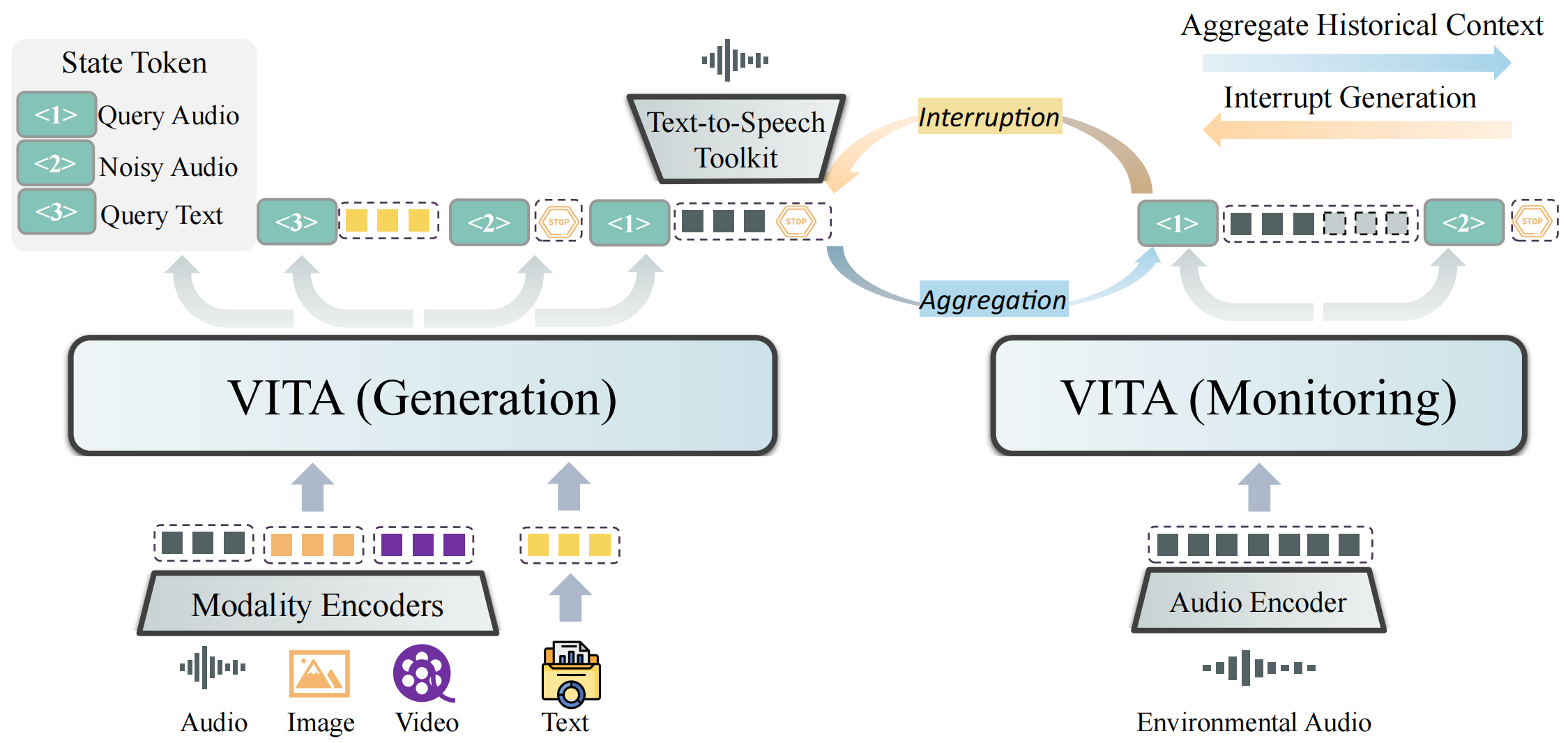

VITA is capable of processing inputs in the form of pure text/audio, as well as video/image combined with text/audio. Besides, two key techniques are adopted to advance the multimodal interactive experience:

- State token. We set different state tokens for different query inputs. <1> corresponds to the effective query audio, such as “what is the biggest animal in the world?”, for which we expect a response from the model. <2> corresponds to the noisy audio, such as someone in the environment calls me to eat, for which we expect the model not to reply. <3> corresponds to the query text, i.e., the question given by the user in text form. During the training phase, we try to teach the model to automatically distinguish different input queries. During the deployment phase, with <2> we can implement non-awakening interaction.

- Duplex scheme. We further introduce a duplex scheme for the audio interrupt interaction. Two models are running at the same time, where the generation model is responsible for handling user queries. When the generation model starts working, the other model monitors the environment. If the user interrupts with another effective audio query, the monitoring model aggregates the historical context to respond to the latest query, while the generation model is paused and tune to monitor, i.e., the two models swap identities.

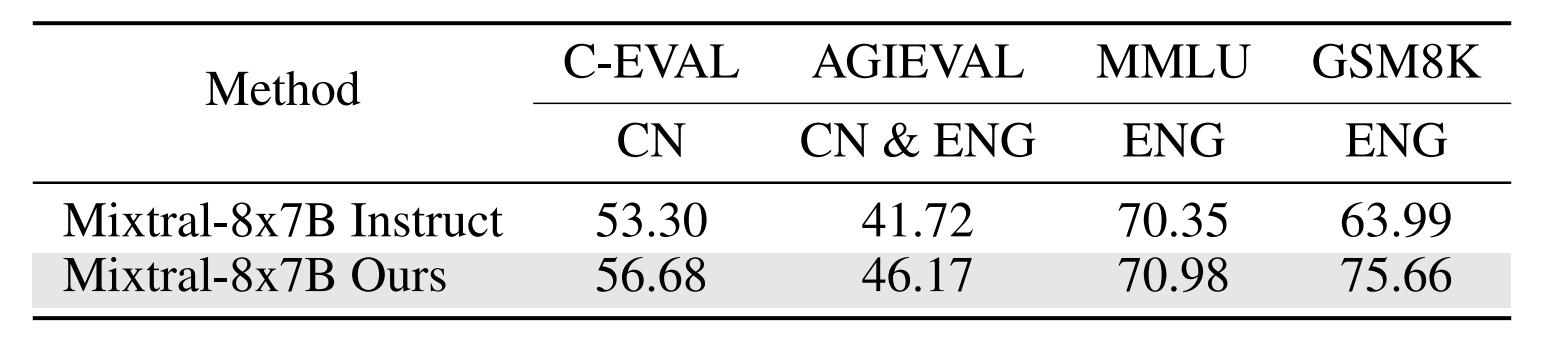

- Comparison of official Mixtral 8x7B Instruct and our trained Mixtral 8x7B

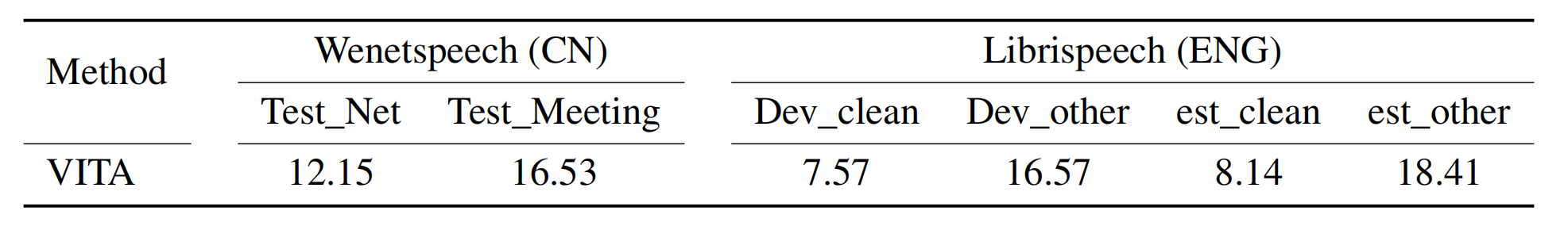

- Evaluation on ASR tasks.

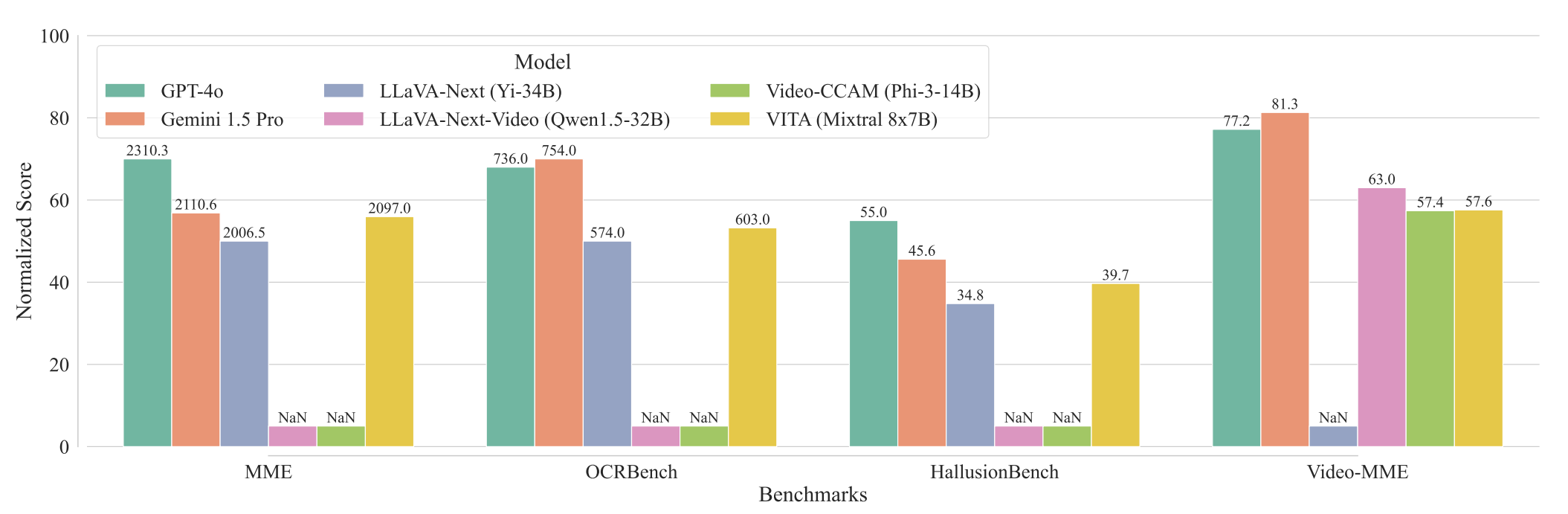

- Evaluation on image and video understanding.

If you find our work helpful for your research, please consider citing our work.

@article{fu2024vita,

title={VITA: Towards Open-Source Interactive Omni Multimodal LLM},

author={Fu, Chaoyou and Lin, Haojia and Long, Zuwei and Shen, Yunhang and Zhao, Meng and Zhang, Yifan and Wang, Xiong and Yin, Di and Ma, Long and Zheng, Xiawu and He, Ran and Ji, Rongrong and Wu, Yunsheng and Shan, Caifeng and Sun, Xing},

journal={arXiv preprint arXiv:2408},

year={2024}

}Explore our related researches: