- Clickhouse (Database)

- dbt (Transformations)

- Cube (Metrics) + Metabase (Business Intelligence)

- Configure environment

- Install dbt packages

- Configure data sources

- Build staging layer models

- Build intermediate layer models

- Build a data mart

- Set up metrics layer with Cube

- Visualize metrics

-

Install prerequisites:

-

Fork & Clone this repository and open in IDE

-

Initialize ENV variables with

.envfile.envis used to store secrets as environment variables.Copy template file .env.template to

.envfile:cp .env.template .env

Open file in editor and set your own values.

❗️ Never commit secrets to git

-

Spin up Docker containers

All the services are configured via Docker containers.

- devcontainer

- Clickhouse

- Metabase

- Cube

# build dev container devcontainer build . # open dev container devcontainer open .

-

Verify you are in a development container by running commands:

dbt --version

If any of these commands fails printing out used software version then you are probably running it on your local machine not in a dev container!

-

Install modules via packages.yml

dbt clean # clean temp files dbt deps # install dependencies (modules)

We will use dbt-labs/dbt_utils package for this project.

-

Turn on custom schema management

I use generate_schema_name.sql macro to set target schema names:

Renders a schema name given a custom schema name. In production, this macro will render out the overriden schema name for a model. Otherwise, the default schema specified in the active target is used.

Take a look at custom_schema_management.sql macro to find out more.

-

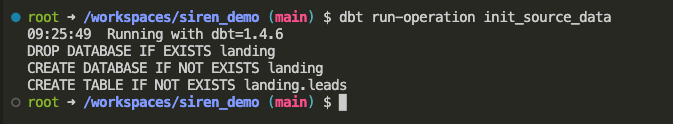

Create table from Parquet file with source data:

dbt run-operation init_source_data

-

Seed .csv files with data (CRM snapshots):

dbt seed

DWH is structured like this for simplicity:

siren_demo:

staging:

+schema: staging

+tags: ['staging']

intermediate:

+schema: intermediate

+tags: ['intermediate']

marts:

+schema: analytics

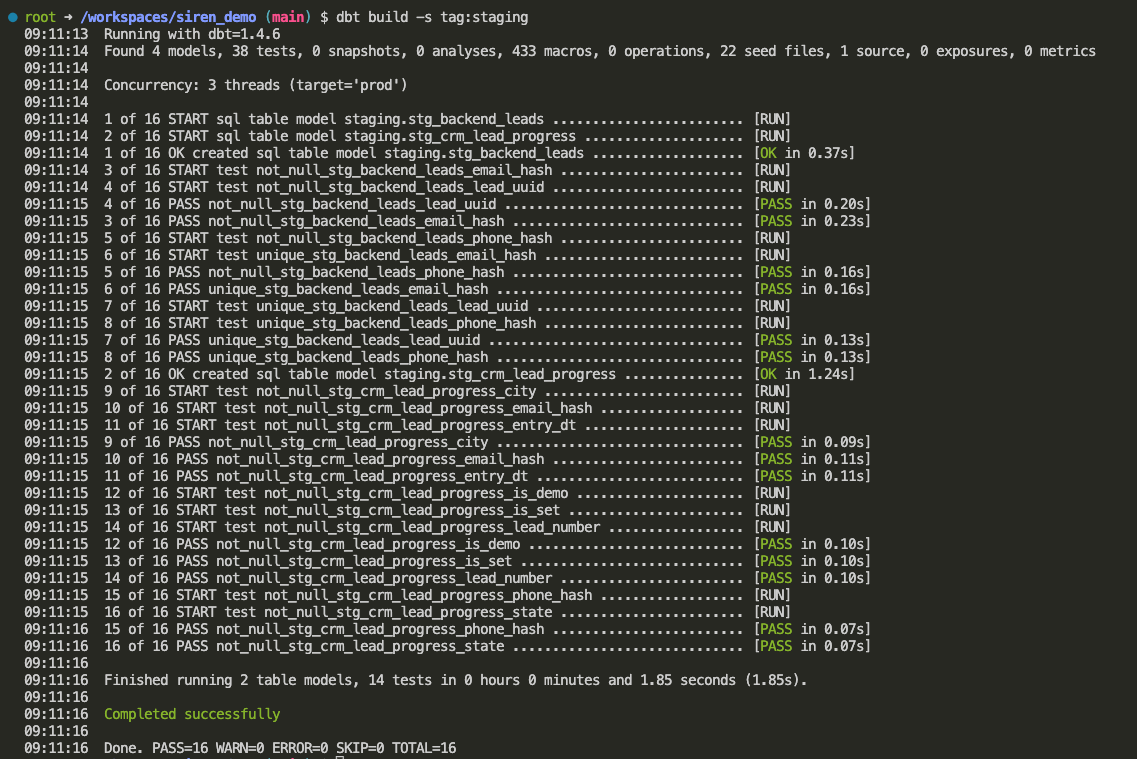

+tags: ['analytics']- Ensure data quality with expectations testing with staging.yml

- Generate surrogate keys

{{ dbt_utils.generate_surrogate_key(['phone_hash', 'email_hash']) }} as lead_hash - Union all .csv data with

dbt_utils.union_relations() - Remove header rows

ENTRYDATE not in ('-----') - Parse timestamps

parseDateTimeBestEffortOrNull(ENTRYDATE) AS entry_dt - Coalesce data

COALESCE(CITY, CityName, trim(splitByChar('|', assumeNotNull(location))[1]))

dbt build -s tag:staging- Set Clickhouse table engine to

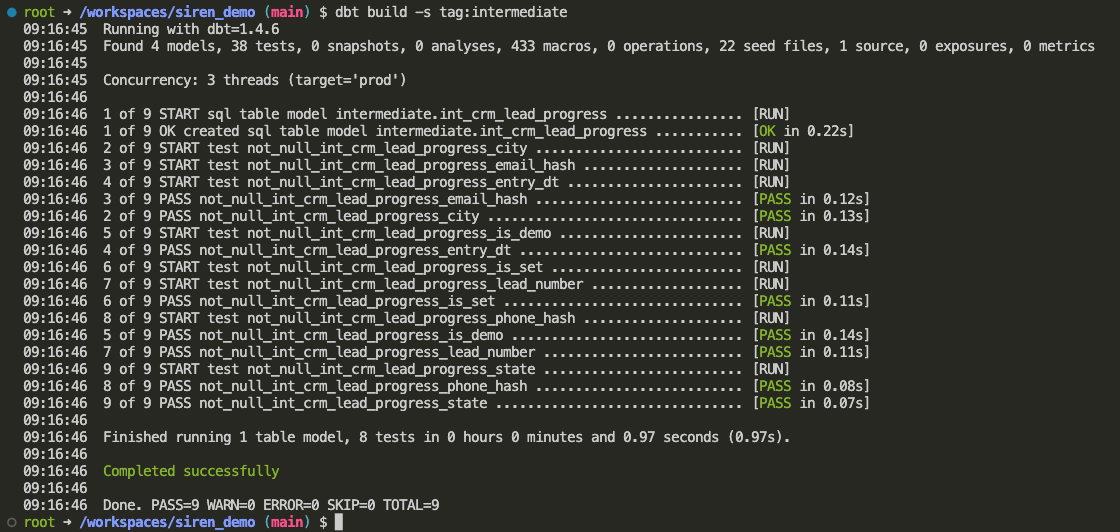

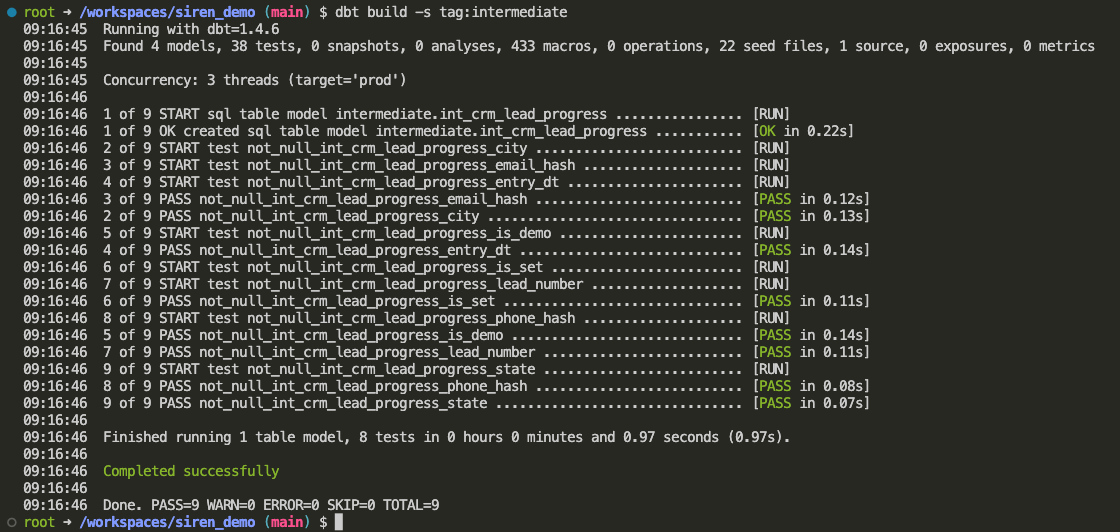

ReplacingMergeTree() - Force deduplicate rows with post-hook

post_hook=['OPTIMIZE TABLE {{ this }} FINAL DEDUPLICATE BY lead_hash, entry_dt, appt_dt'] - Ensure data quality with expectations testing with intermediate.yml

dbt build -s tag:intermediate- Join data from backend and customer CRM

- Ensure data quality with expectations testing with marts.yml

dbt build -s tag:analytics- Define dbt Metrics in f_leads_stats.yml

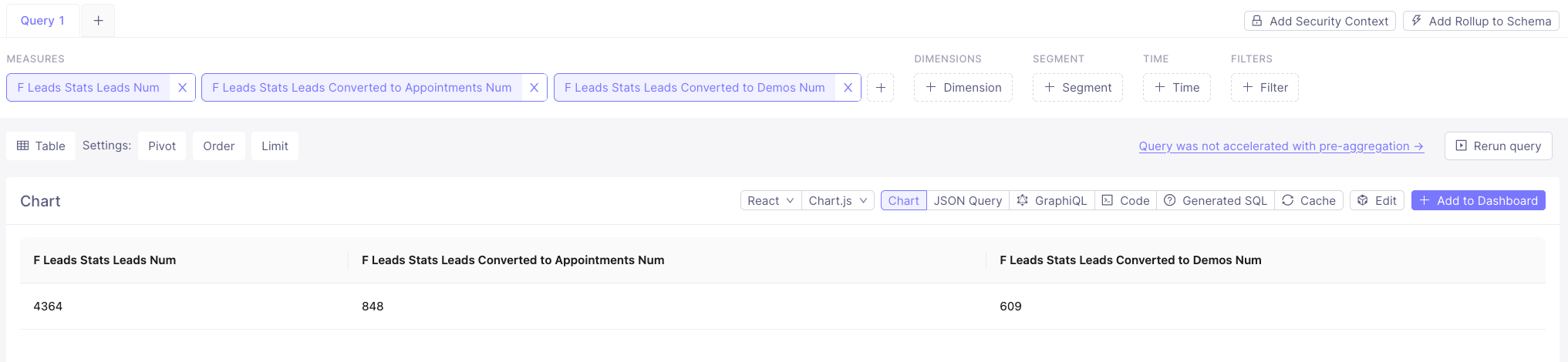

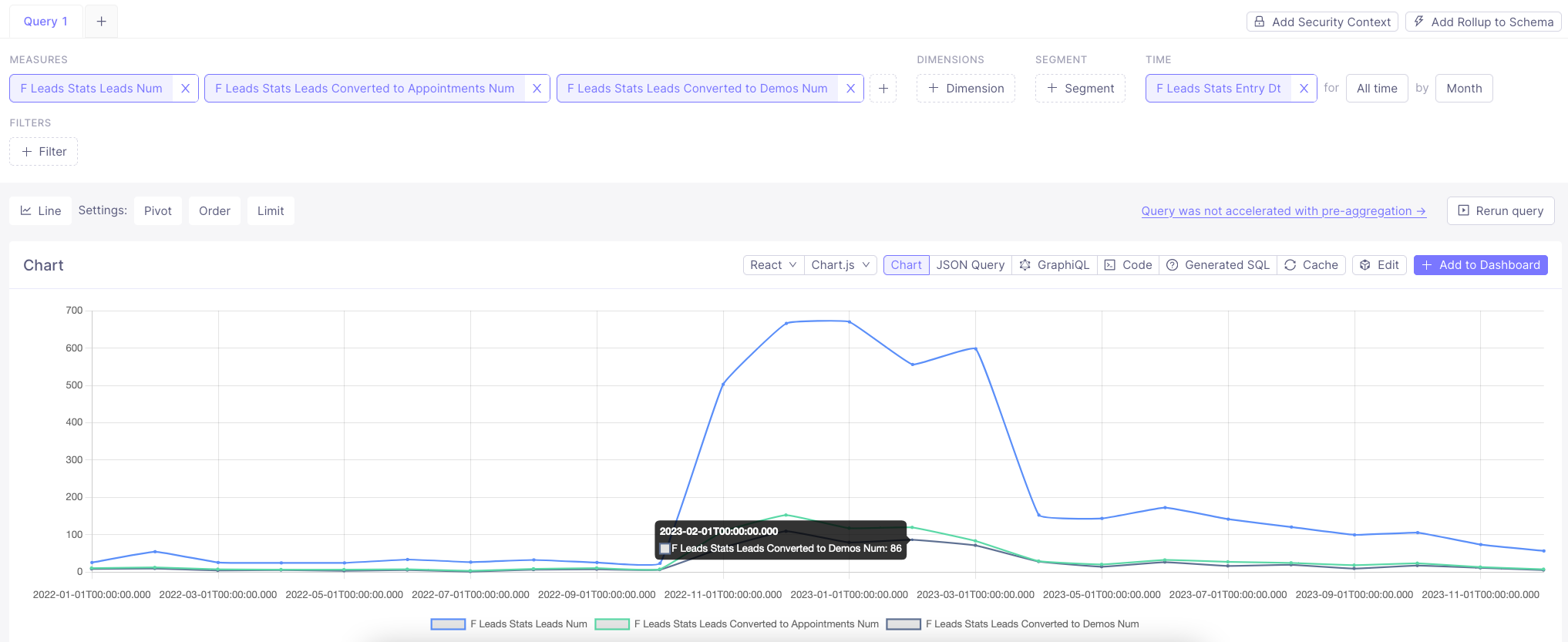

Now we are ready to visualize key metrics on a dashboard.

- Access dbt Metrics through Cube playground or Metabase