This repo collects and re-produces models related to domains of question answering and machine reading comprehension.

It's now still in the process of supplement.

WikiQA, TrecQA, InsuranceQA

cd cQA

bash download.sh

python preprocess_wiki.py

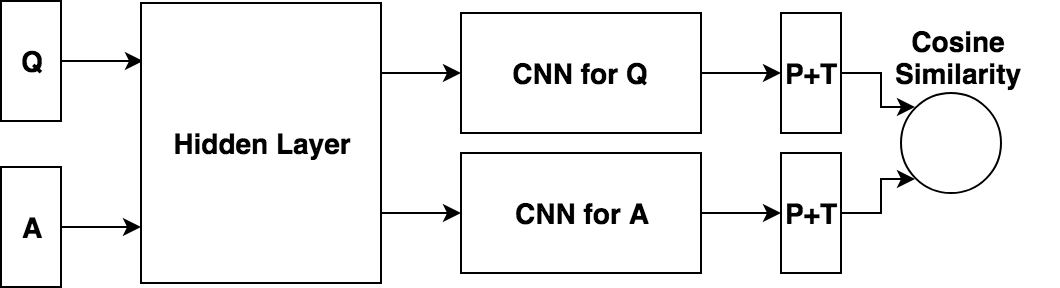

This model is a simple complementation of a Siamese NN QA model with a pointwise way.

python siamese.py --train

python siamese.py --test

This model is a simple complementation of a Siamese CNN QA model with a pointwise way.

python siamese.py --train

python siamese.py --test

This model is a simple complementation of a Siamese RNN/LSTM/GRU QA model with a pointwise way.

python siamese.py --train

python siamese.py --test

All these three models above are based on the vanilla siamese structure. You can easily combine these basic deep learning module cells together and build your own models.

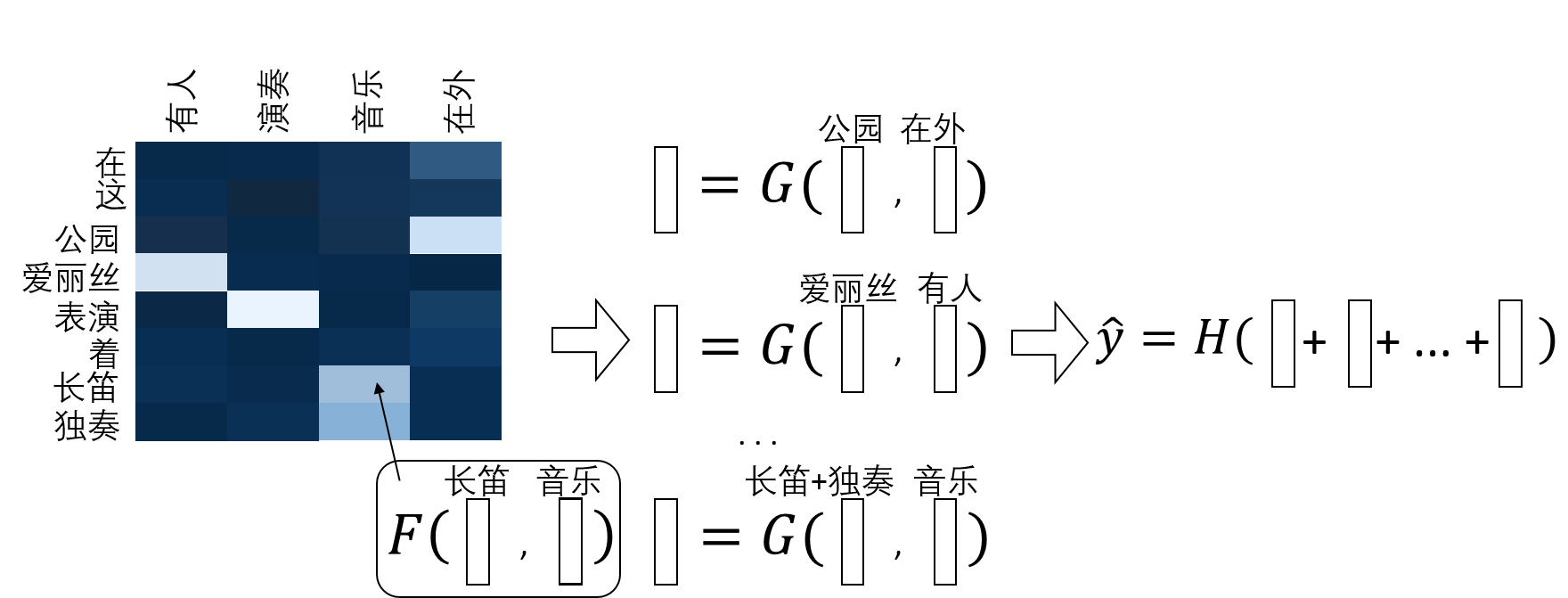

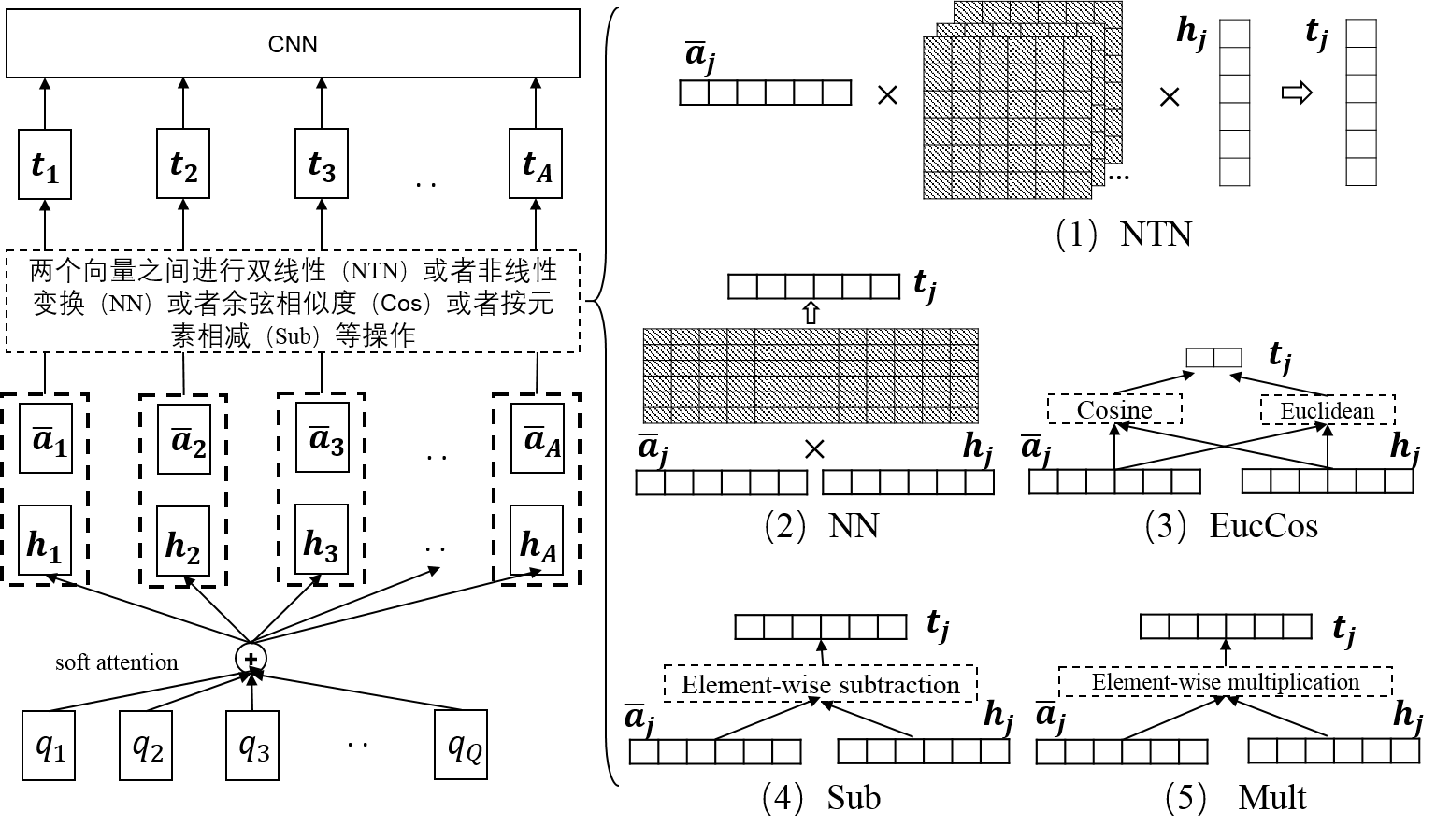

Given a question, a positive answer and a negative answer, this pairwise model can rank two answers with higher ranking in terms of the right answer.

python qacnn.py --train

python qacnn.py --test

Refer to:

python decomp_att.py --train

python decomp_att.py --test

Refer to:

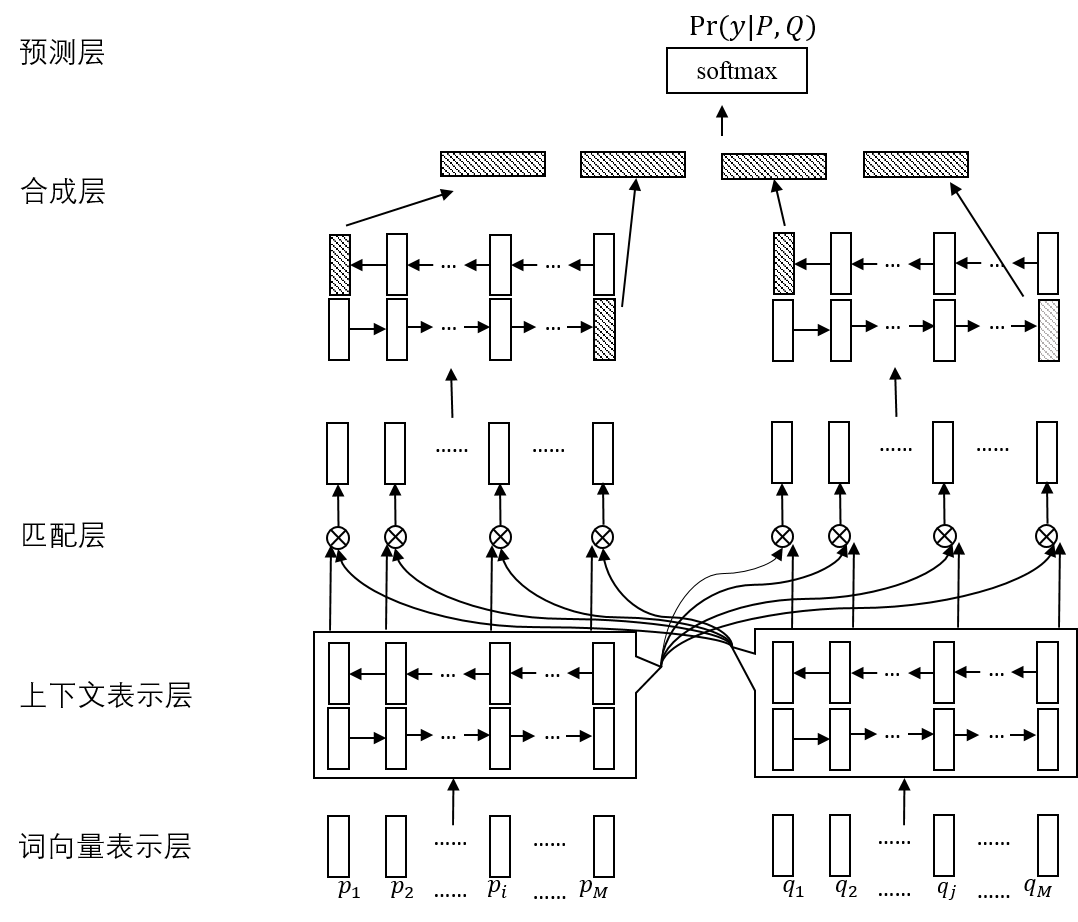

python seq_match_seq.py --train

python seq_match_seq.py --test

Refer to:

python bimpm.py --train

python bimpm.py --test

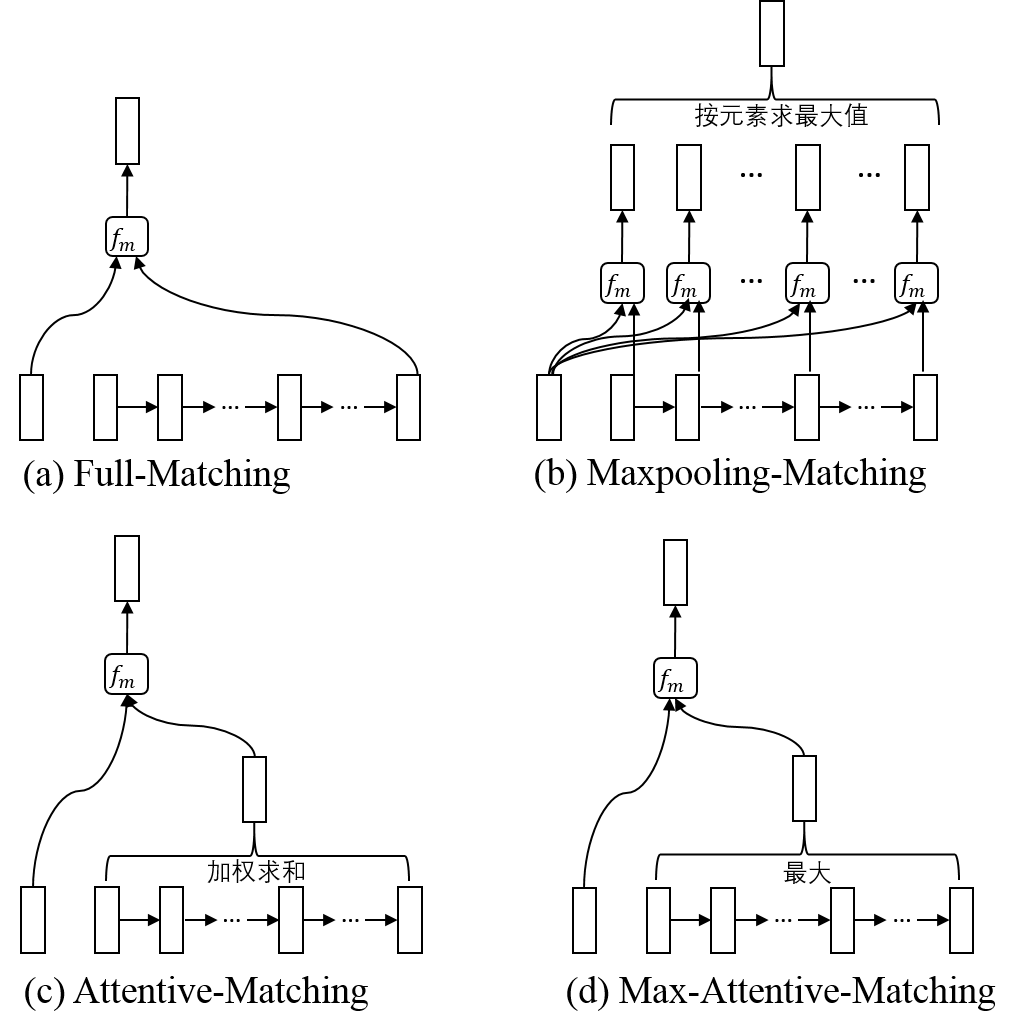

Refer to:

CNN/Daily mail, CBT, SQuAD, MS MARCO, RACE

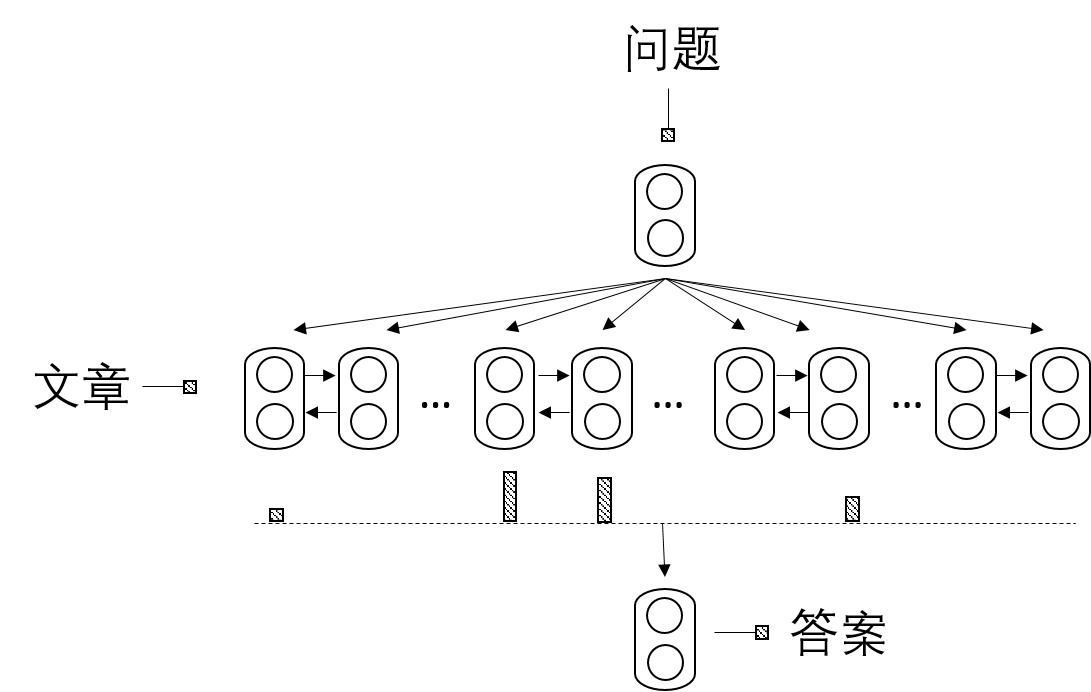

To be done

Refer to:

To be done

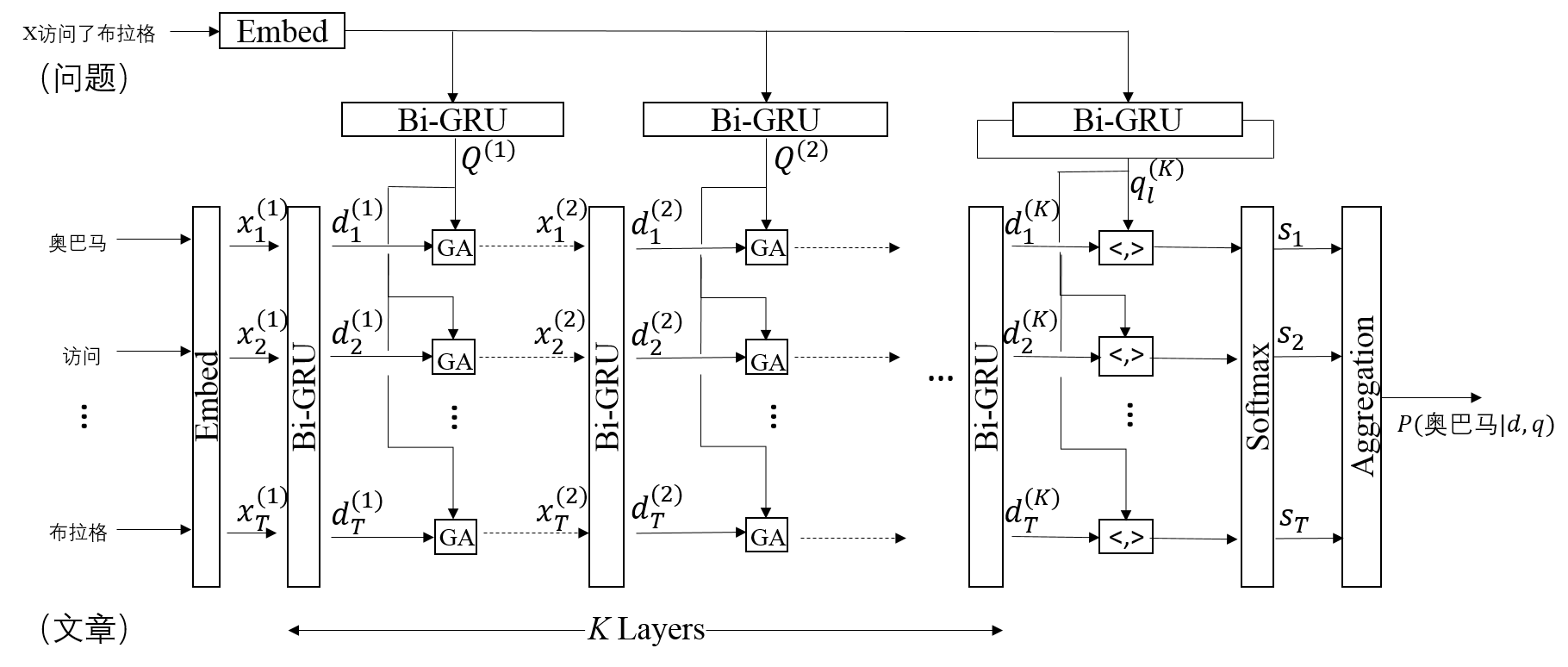

Refer to:

To be done

Refer to:

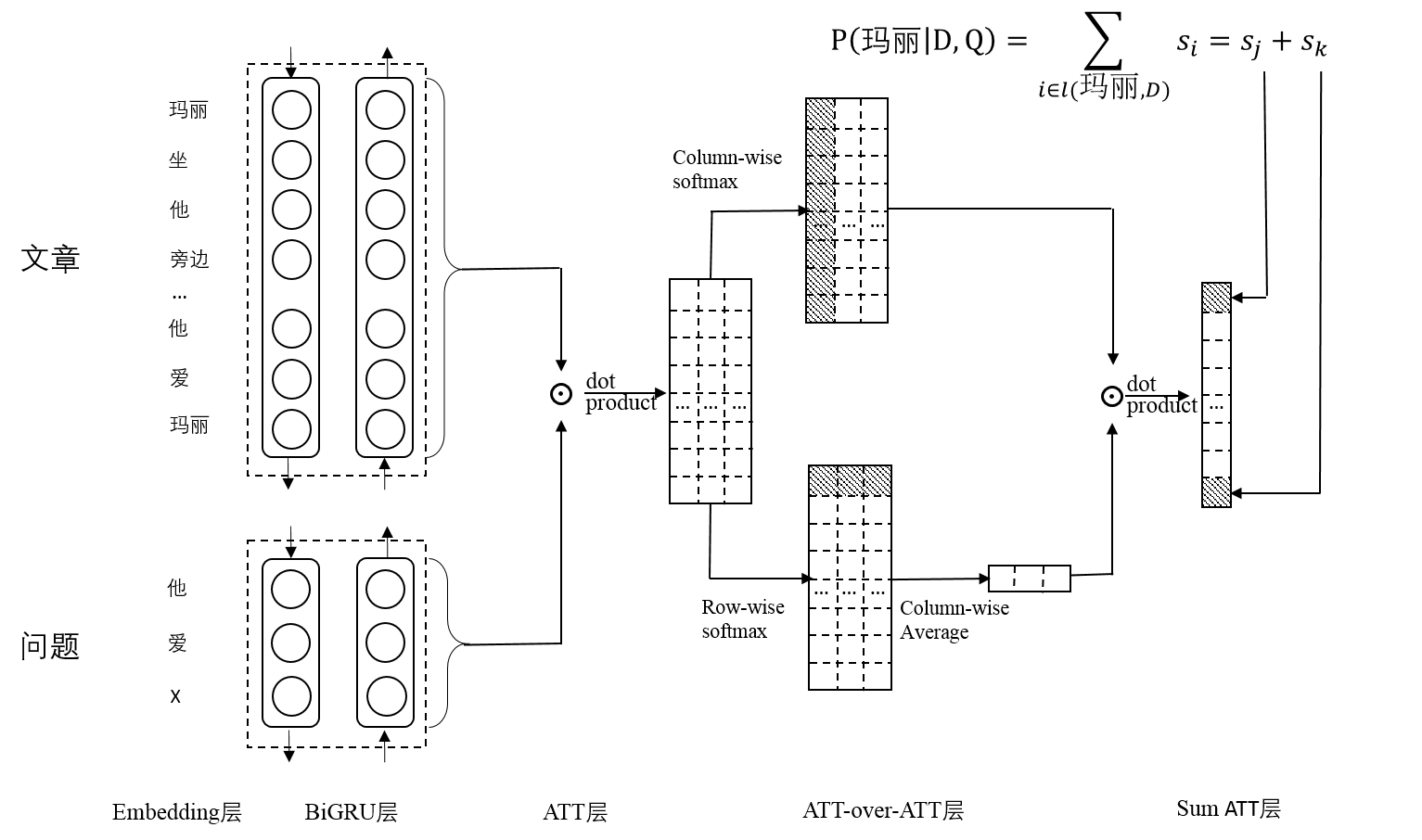

- Attention-over-Attention Neural Networks for Reading Comprehension

The result on dev set(single model) under my experimental environment is shown as follows:

| training step | batch size | hidden size | EM (%) | F1 (%) | speed | device |

|---|---|---|---|---|---|---|

| 12W | 32 | 75 | 67.7 | 77.3 | 3.40 it/s | 1 GTX 1080 Ti |

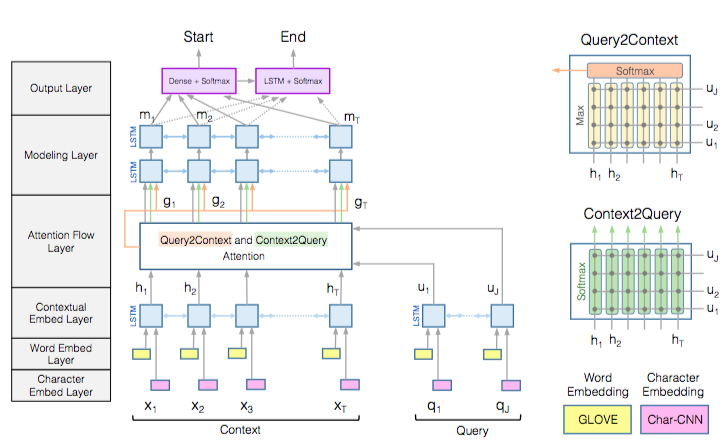

Refer to:

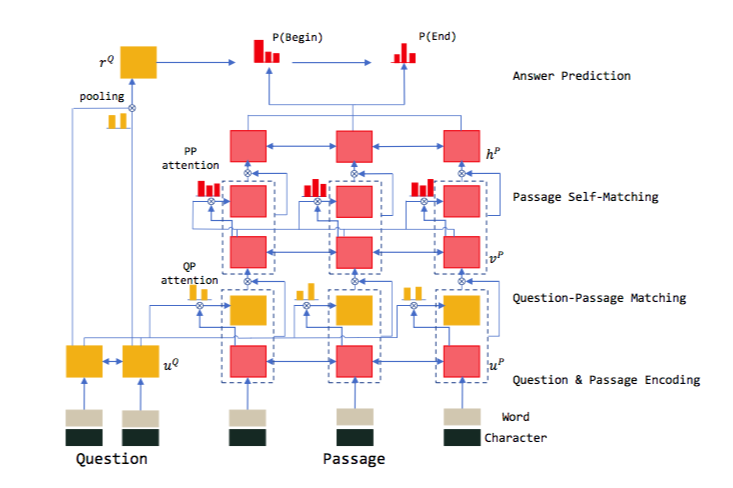

The result on dev set(single model) under my experimental environment is shown as follows:

| training step | batch size | hidden size | EM (%) | F1 (%) | speed | device | RNN type |

|---|---|---|---|---|---|---|---|

| 12W | 32 | 75 | 69.1 | 78.2 | 1.35 it/s | 1 GTX 1080 Ti | cuDNNGRU |

| 6W | 64 | 75 | 66.1 | 75.6 | 2.95 s/it | 1 GTX 1080 Ti | SRU |

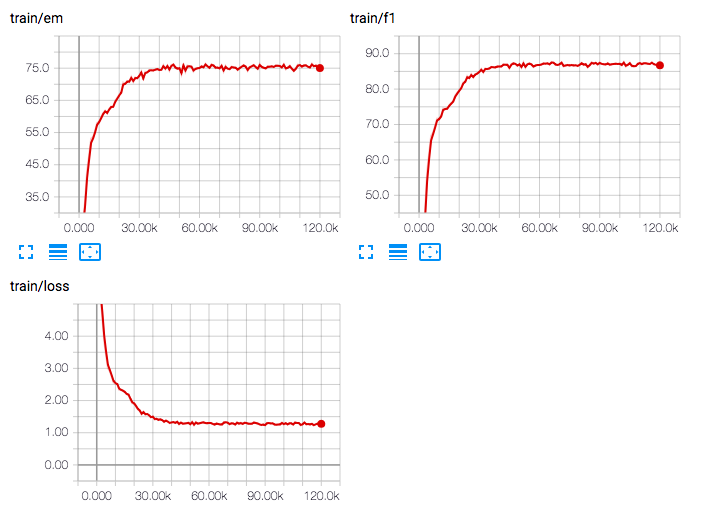

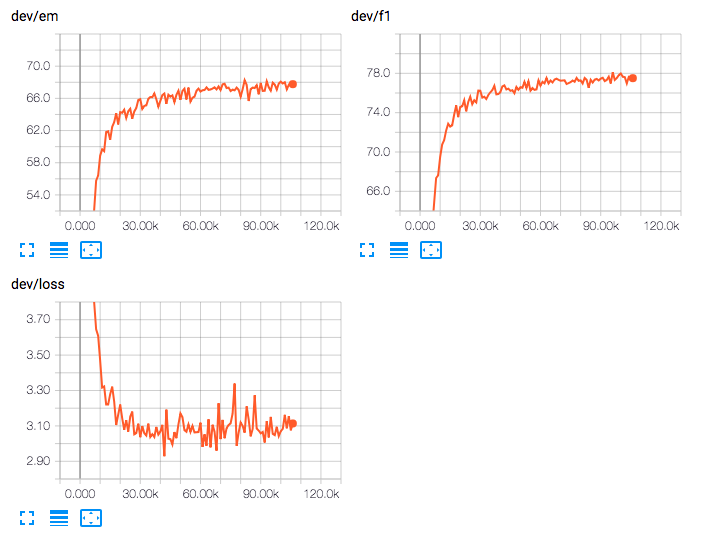

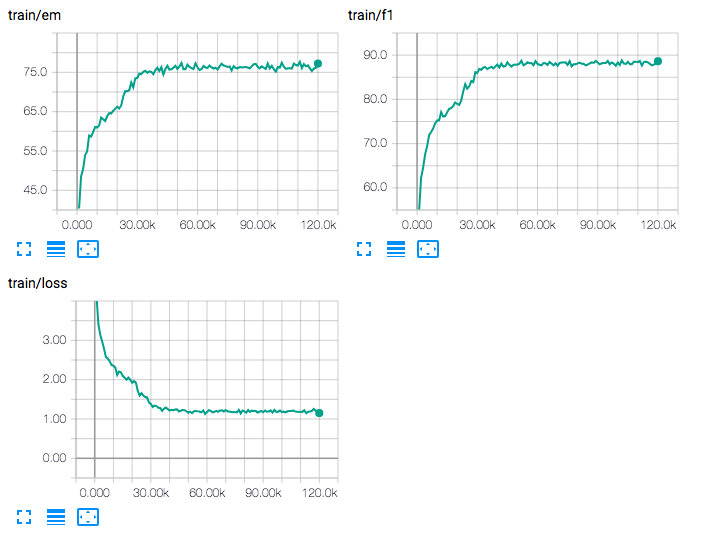

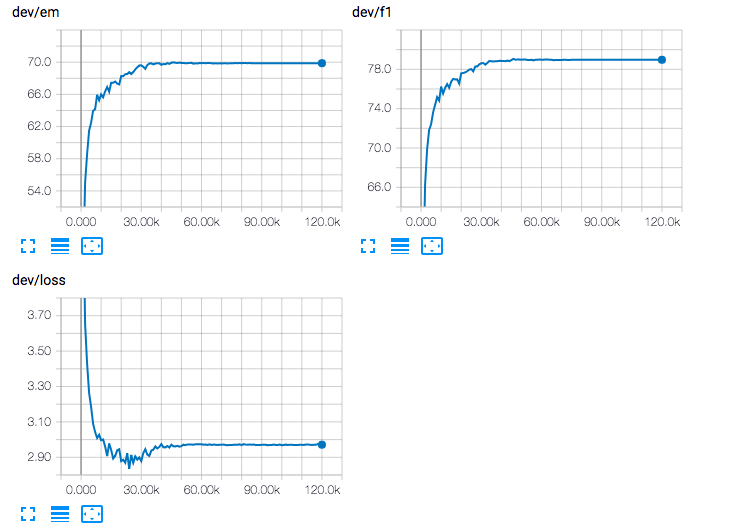

RNet trained with cuDNNGRU:

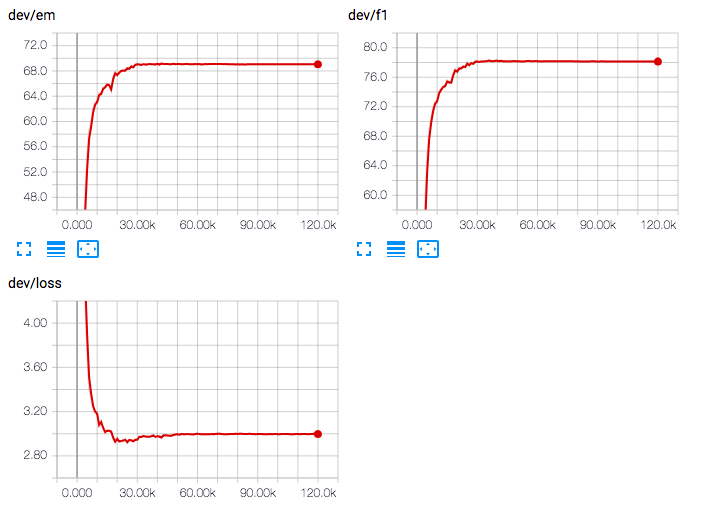

RNet trained with SRU(without optimization on operation efficiency):

Refer to:

The result on dev set(single model) under my experimental environment is shown as follows:

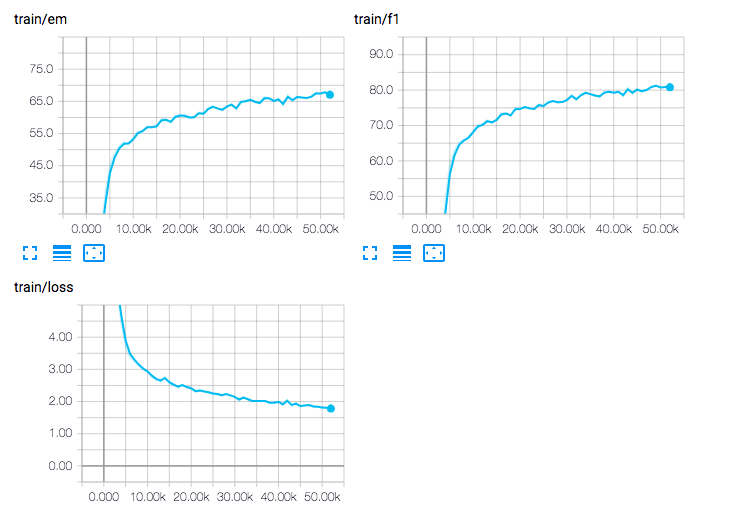

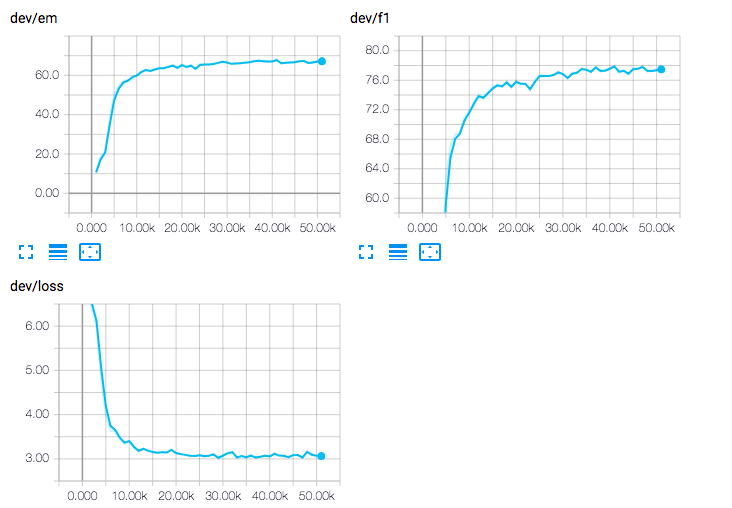

| training step | batch size | attention heads | hidden size | EM (%) | F1 (%) | speed | device |

|---|---|---|---|---|---|---|---|

| 6W | 32 | 1 | 96 | 70.2 | 79.7 | 2.4 it/s | 1 GTX 1080 Ti |

| 12W | 32 | 1 | 75 | 70.1 | 79.4 | 2.4 it/s | 1 GTX 1080 Ti |

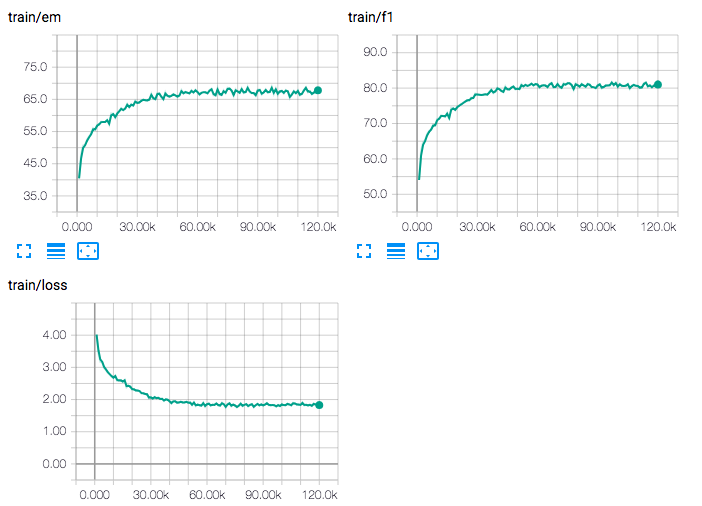

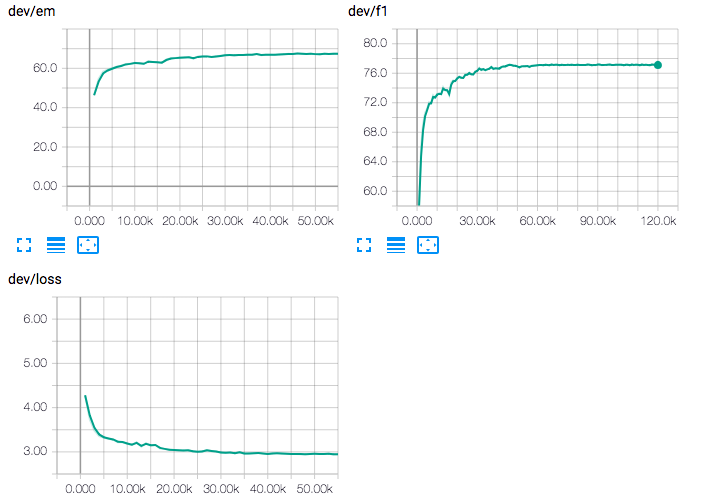

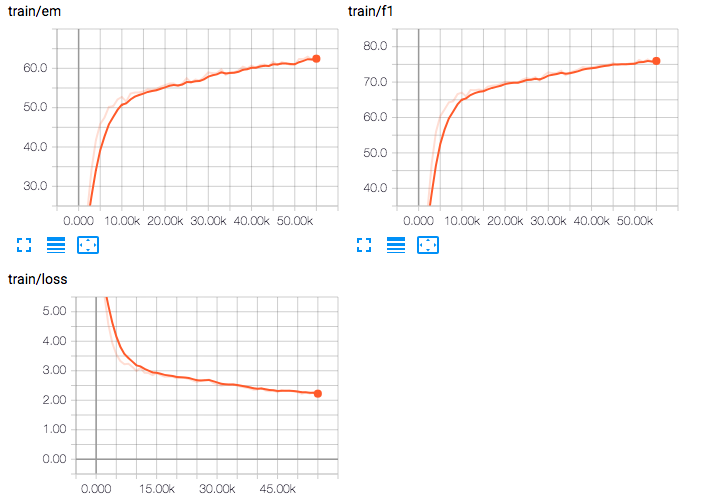

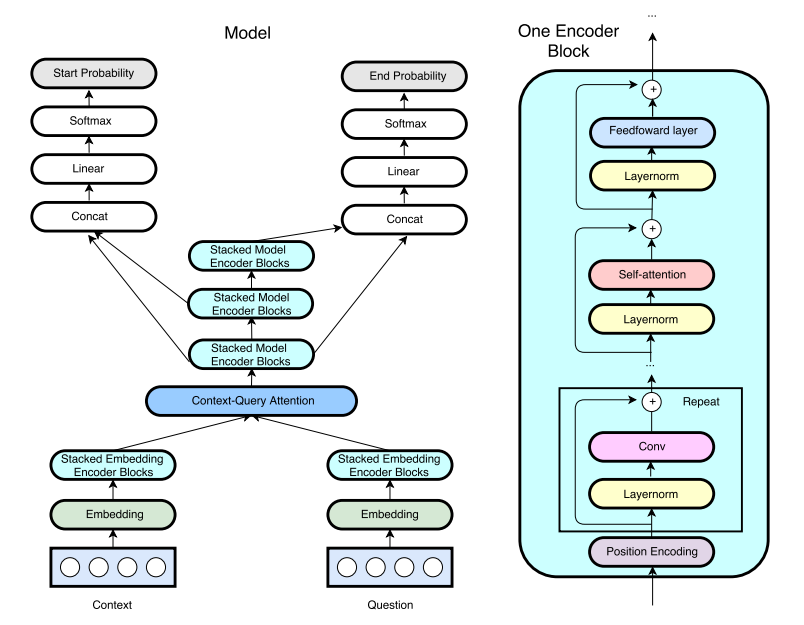

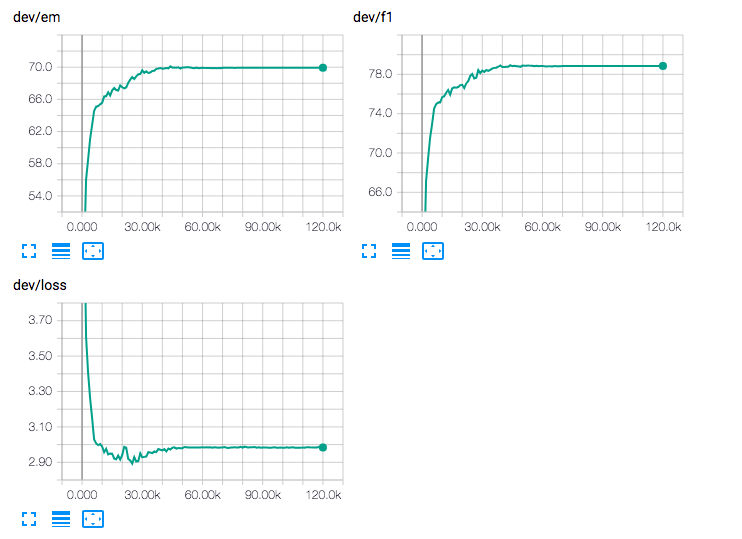

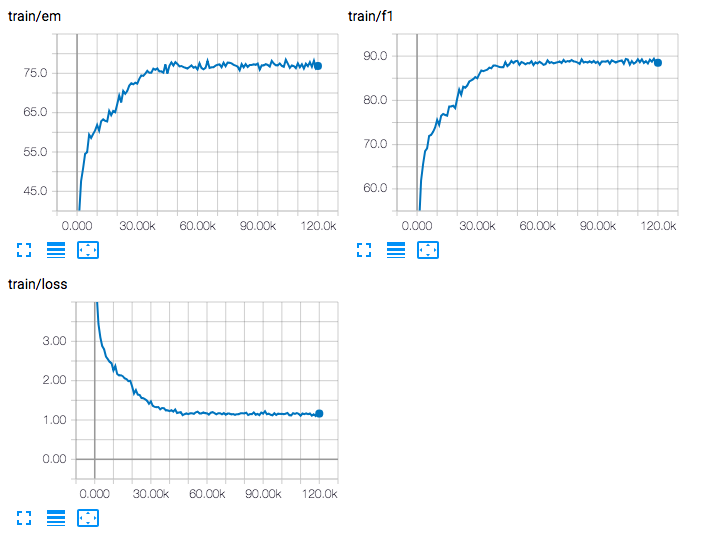

Experimental records for the first experiment:

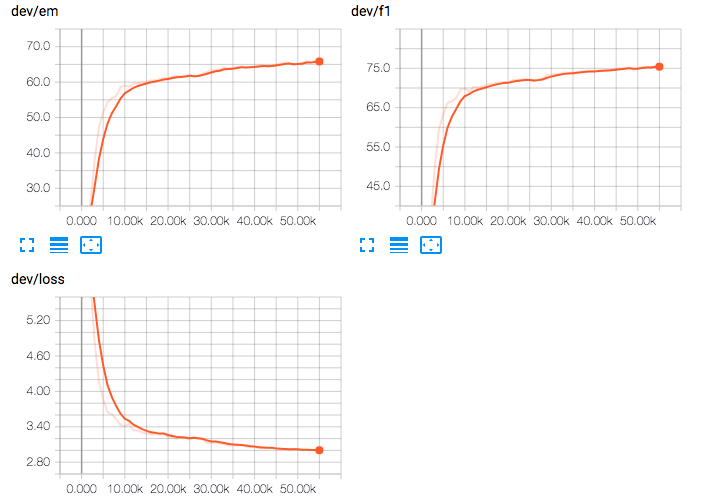

Experimental records for the second experiment(without smooth):

Refer to:

- QANet: Combining Local Convolution with Global Self-Attention for Reading Comprehension

- github repo of NLPLearn/QANet

This repo contains my experiments and attempt for MRC problems, and I'm still working on it.

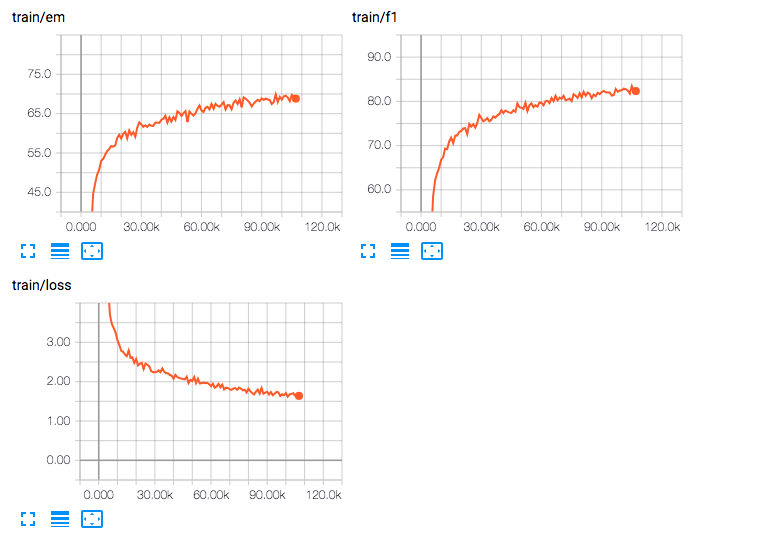

| training step | batch size | hidden size | EM (%) | F1 (%) | speed | device | description |

|---|---|---|---|---|---|---|---|

| 12W | 32 | 100 | 70.1 | 78.9 | 1.6 it/s | 1 GTX 1080 Ti | \ |

| 12W | 32 | 75 | 70.0 | 79.1 | 1.8 it/s | 1 GTX 1080 Ti | \ |

| 12W | 32 | 75 | 69.5 | 78.8 | 1.8 it/s | 1 GTX 1080 Ti | with spatial dropout on embeddings |

Experimental records for the first experiment(without smooth):

Experimental records for the second experiment(without smooth):

For more information, please visit http://skyhigh233.com/blog/2018/04/26/cqa-intro/.