Paper

Please download Refer-KITTI from the official RMOT.

Based on Refer-KITTI, you can download json files expression+ to structure Refer-KITTI+ as below.

├── refer-kitti

│ ├── KITTI

│ ├── training

│ ├── labels_with_ids

│ └── expression+

│ └── seqmap_kittt+

We select 50 videos from BDD100k tracking set as our training and validation videos. We put the entire dataset on GoogleDrive. The Refer-BDD are structured as below.

├── refer-bdd

│ ├── BDD

│ ├── training

│ ├── labels_with_ids

│ ├── expression

│ ├── refer-bdd.train

│ ├── seqmap_bdd

The seqmap+, refer-bdd.train and seqmap_bdd files are stored in the assets.

-

2024.4.25 Release text-based AR-MOT benchmarks, including Refer-KITTI+ and Refer-BDD.

-

2024.2.29 Init repository.

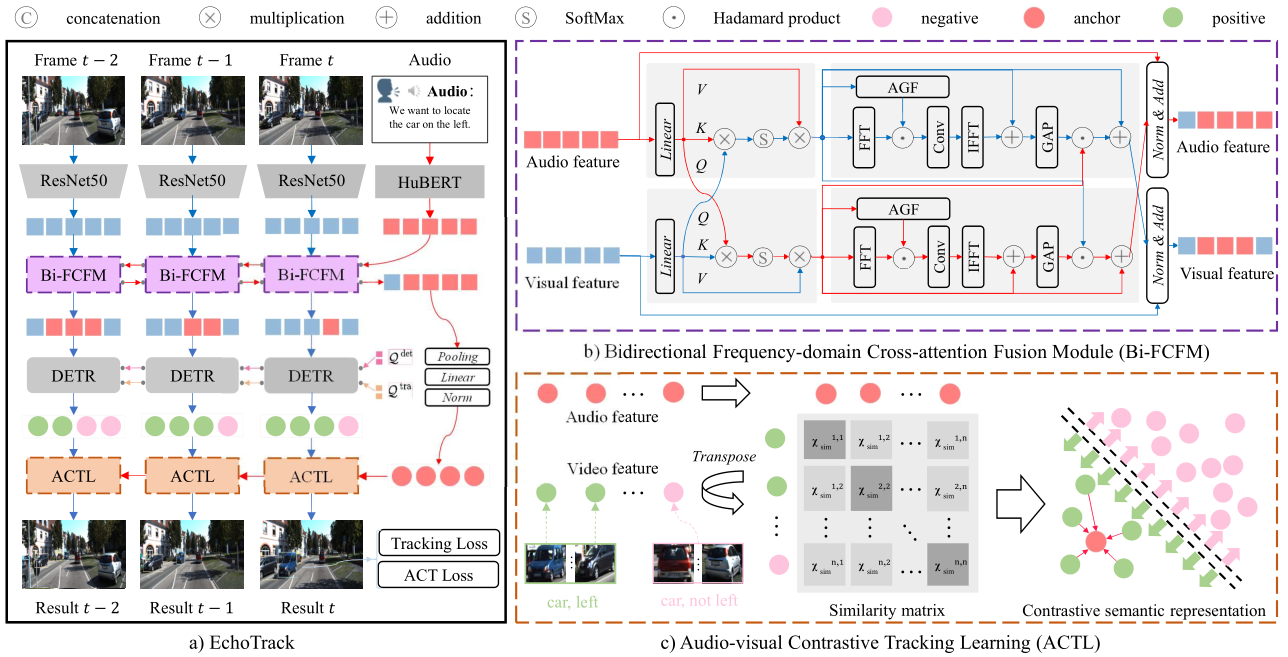

This paper introduces the task of Auditory Referring Multi-Object Tracking (AR-MOT), which dynamically tracks specific objects in a video sequence based on audio expressions and appears as a challenging problem in autonomous driving. Due to the lack of semantic modeling capacity in audio and video, existing works have mainly focused on text- based multi-object tracking, which often comes at the cost of tracking quality, interaction efficiency, and even the safety of assistance systems, limiting the application of such methods in autonomous driving. In this paper, we delve into the problem of AR-MOT from the perspective of audio-video fusion and audio-video tracking. We put forward EchoTrack, an end-to- end AR-MOT framework with dual-stream vision transformers. The dual streams are intertwined with our Bidirectional Frequency-domain Cross-attention Fusion Module (Bi-FCFM), which bidirectionally fuses audio and video features from both frequency- and spatiotemporal domains. Moreover, we propose the Audio-visual Contrastive Tracking Learning (ACTL) regime to extract homogeneous semantic features between expressions and visual objects by learning homogeneous features between different audio and video objects effectively. Aside from the architectural design, we establish the first set of large-scale AR- MOT benchmarks, including Echo-KITTI, Echo-KITTI+, and Echo-BDD. Extensive experiments on the established benchmarks demonstrate the effectiveness of the proposed EchoTrack model and its components.