A Semantic Space is Worth 256 Language Descriptions:

Make Stronger Segmentation Models with Descriptive Properties

Junfei Xiao1,

Ziqi Zhou2,

Wenxuan Li1,

Shiyi Lan3,

Jieru Mei1,

Zhiding Yu3,

Bingchen Zhao4,

Alan Yuille1,

Yuyin Zhou2,

Cihang Xie2

1Johns Hopkins University, 2UCSC, 3NVIDIA, 4University of Edinburgh

- [07/07/24] 🔥 ProLab: Property-level Label Space is accepted to ECCV 2024. A camera-ready version is coming in the next 1~2 weeks paper. Stay tuned.

- [12/21/23] 🔥 ProLab: Property-level Label Space is released. We propose to retrieve descriptive properties grounded in common sense knowledge to build a property-level label space which makes strong interpretable segmentation models. Please checkout the paper.

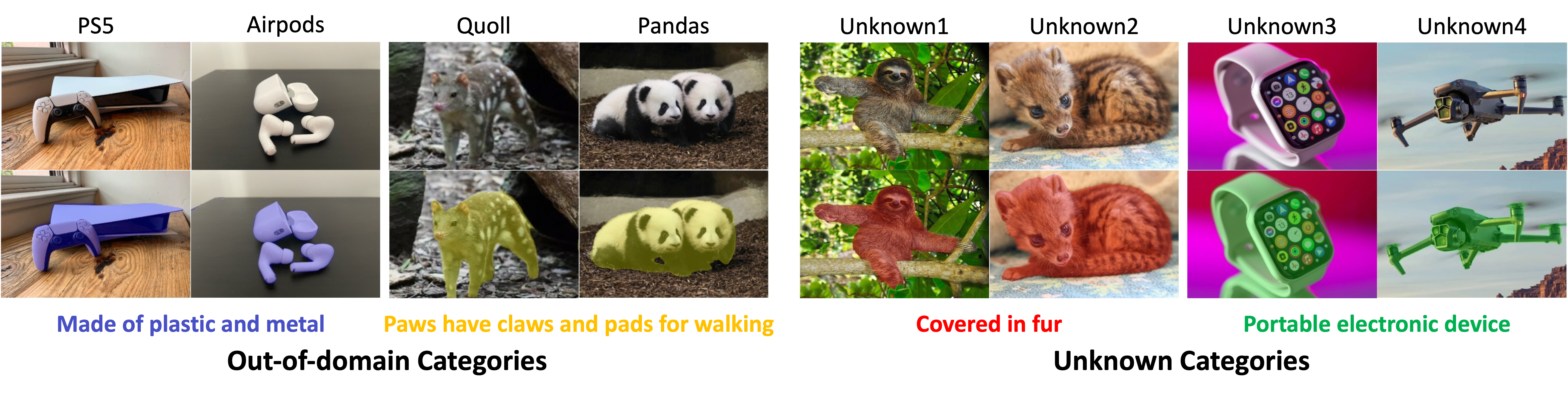

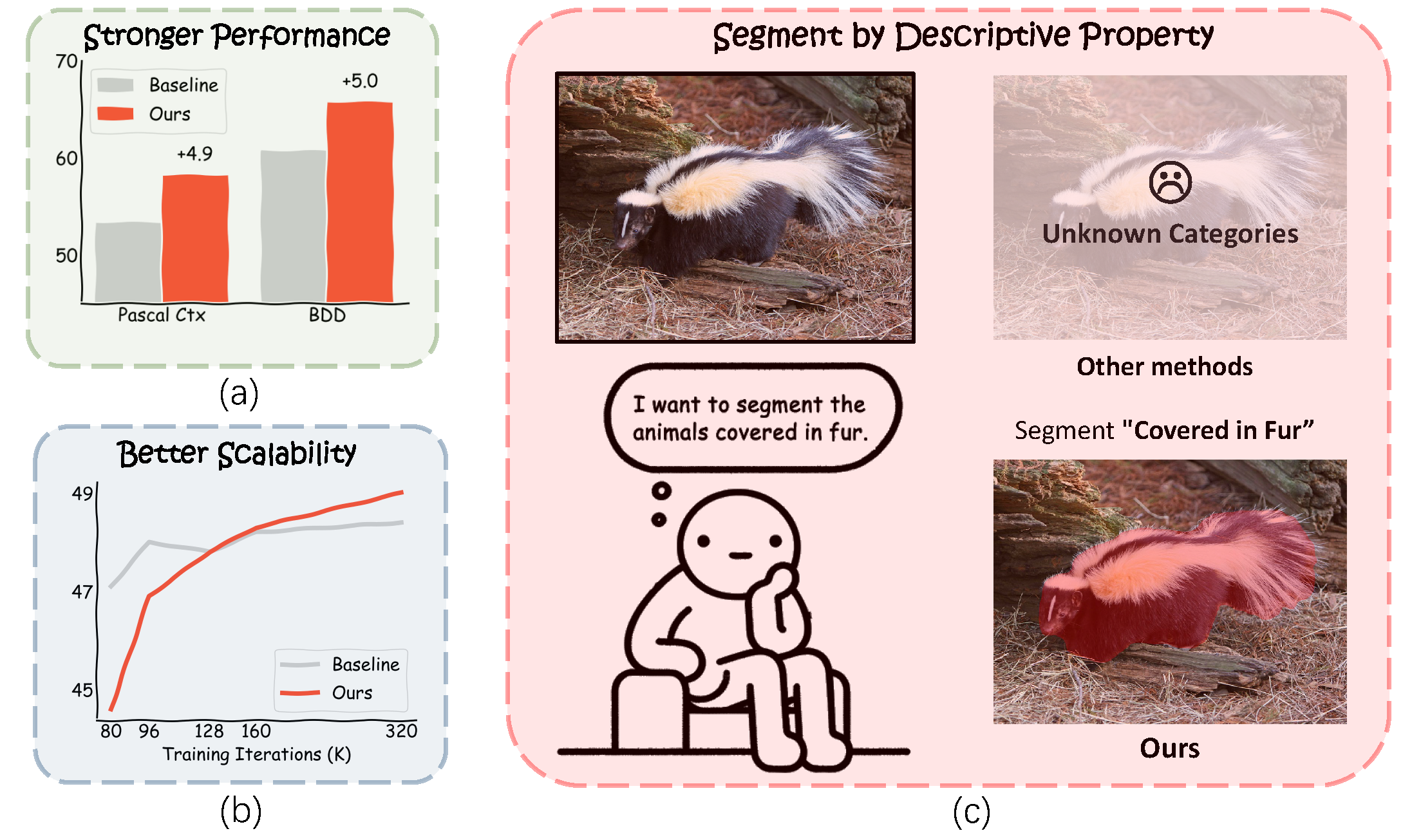

ProLab models have emerged generalization ability to out-of-domain categories and even unknown categories.

Our segmentation code is developed on top of MMSegmentation and ViT-Adapter.

We have two tested environments based on torch 1.9+cuda 11.1+MMSegmentation v0.20.2 and torch 1.13.1+torch11.7+MMSegmentation v0.27.0.

Environment 1 (torch 1.9+cuda 11.1+MMSegmentation v0.20.2)

conda create -n prolab python=3.8

conda activate prolab

pip install torch==1.9.0+cu111 torchvision==0.10.0+cu111 torchaudio==0.9.0 -f https://download.pytorch.org/whl/torch_stable.html

pip install mmcv-full==1.4.2 -f https://download.openmmlab.com/mmcv/dist/cu111/torch1.9.0/index.html

pip install timm==0.4.12

pip install mmdet==2.22.0 # for Mask2Former

pip install mmsegmentation==0.20.2

pip install -r requirements.txt

cd ops & sh make.sh # compile deformable attention

Environment 2 (torch 1.13.1+cuda 11.7+MMSegmentation v0.27.0)

pip install torch==1.13.1+cu117 torchvision==0.14.1+cu117 torchaudio==0.13.1 --extra-index-url https://download.pytorch.org/whl/cu117

pip install mmcv-full==1.7.0 -f https://download.openmmlab.com/mmcv/dist/cu117/torch1.13.0/index.html

pip install timm==0.4.12

pip install mmdet==2.22.0 # may need modification on the limitation of mmcv version

pip install mmsegmentation==0.27.0

pip install -r requirements.txt

cd ops & sh make.sh # compile deformable attention

Please follow the guidelines in MMSegmentation to download ADE20K, Cityscapes, COCO Stuff and Pascal Context.

Please visit the official website to download the BDD dataset.

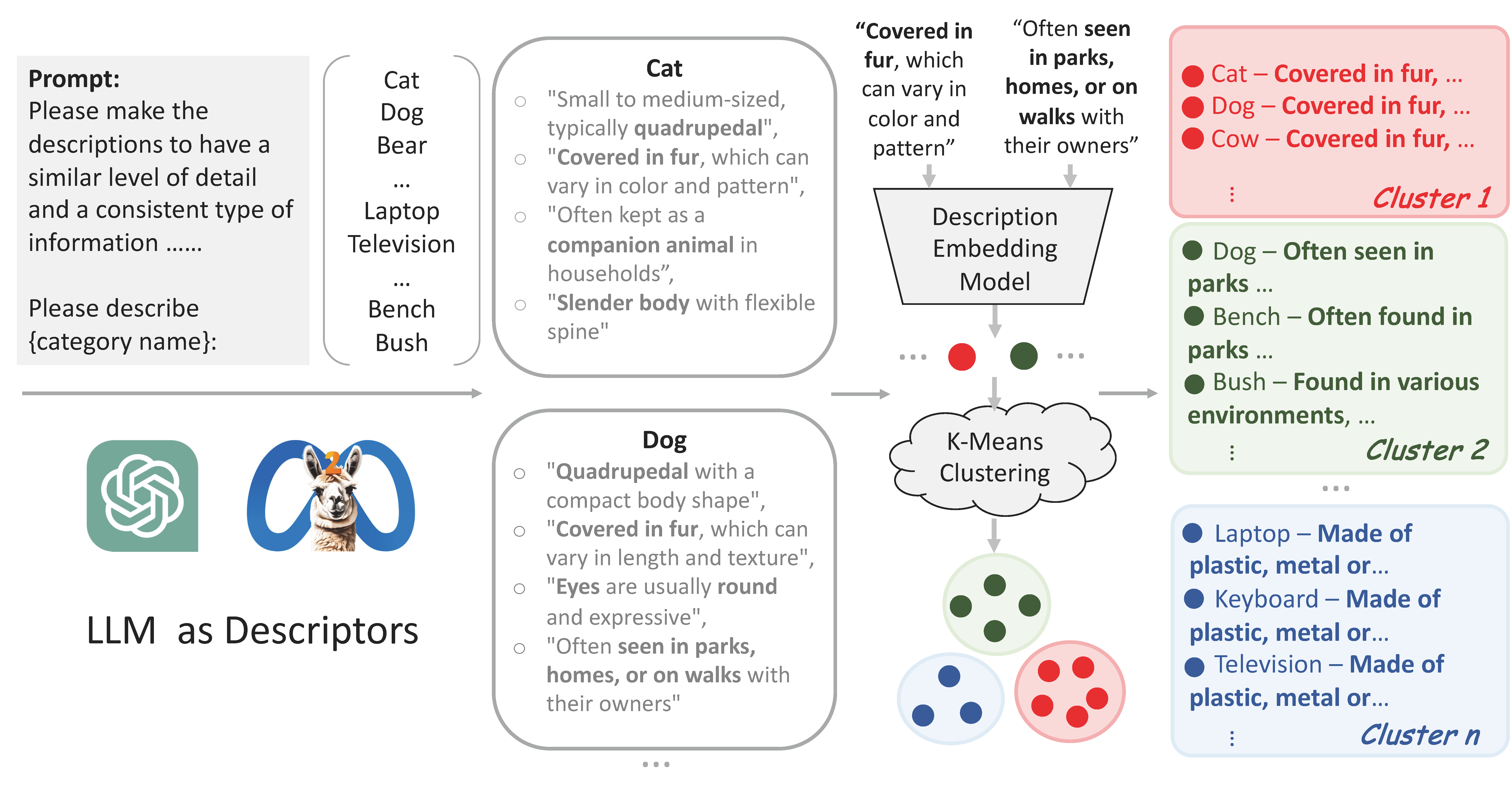

We provide the retrieved descriptive properties (with GPT-3.5) and property-level labels (language embeddings) .

We provide generate_descrtiptions.ipynb using GPT 3.5 (API) and LLAMA-2 (local deploy) to retrieve descriptive properties.

We also provide generate_embeddings.ipynb to encode and cluster the descriptive properties into embeddings with Sentence Transformer (huggingface, paper) and BAAI-BGE models (huggingface, paper) step-by-step.

ADE20K

| Framework | Backbone | Pretrain | Lr schd | Crop Size | mIoU | Config | Checkpoint |

|---|---|---|---|---|---|---|---|

| UperNet | ViT-Adapter-B | DeiT-B | 320k | 512 | 49.0 | config | Google Drive |

| UperNet | ViT-Adapter-L | BEiT-L | 160k | 640 | 58.2 | config | Google Drive |

| UperNet | ViT-Adapter-L | BEiTv2-L | 80K | 896 | 58.7 | config | Google Drive |

COCO-Stuff-164K

| Framework | Backbone | Pretrain | Lr schd | Crop Size | mIoU | Config | Checkpoint |

|---|---|---|---|---|---|---|---|

| UperNet | ViT-Adapter-B | DeiT-B | 160K | 512 | 45.4 | config | Google Drive |

Pascal Context

| Framework | Backbone | Pretrain | Lr schd | Crop Size | mIoU | Config | Checkpoint |

|---|---|---|---|---|---|---|---|

| UperNet | ViT-Adapter-B | DeiT-B | 160K | 512 | 58.2 | config | Google Drive |

Cityscapes

| Framework | Backbone | Pretrain | Lr schd | Crop Size | mIoU | Config | Checkpoint |

|---|---|---|---|---|---|---|---|

| UperNet | ViT-Adapter-B | DeiT-B | 160K | 768 | 81.4 | config | Google Drive |

BDD

| Framework | Backbone | Pretrain | Lr schd | Crop Size | mIoU | Config | Checkpoint |

|---|---|---|---|---|---|---|---|

| UperNet | ViT-Adapter-B | DeiT-B | 160K | 768 | 65.7 | config | Google Drive |

The following example script is to train ViT-Adapter-B + UperNet on ADE20k on a single node with 8 gpus:

sh dist_train.sh configs/ADE20K/upernet_deit_adapter_base_512_320k_ade20k_bge_base.py 8The following example script is to evaluate ViT-Adapter-B + UperNet on COCO_Stuff val on a single node with 8 gpus:

sh dist_test.sh configs/COCO_Stuff/upernet_deit_adapter_base_512_160k_coco_stuff_bge_base.py 8 --eval mIoUIf this paper is useful to your work, please cite:

@article{xiao2023semantic,

author = {Xiao, Junfei and Zhou, Ziqi and Li, Wenxuan and Lan, Shiyi and Mei, Jieru and Yu, Zhiding and Yuille, Alan and Zhou, Yuyin and Xie, Cihang},

title = {A Semantic Space is Worth 256 Language Descriptions: Make Stronger Segmentation Models with Descriptive Properties},

journal = {arXiv preprint arXiv:2312.13764},

year = {2023},

}GPT-3.5 and Llama-2 are used for retrieving descriptive properties.

Sentence Transformer and BAAI-BGE are used as description embedding models.

MMSegmentation and ViT-Adapter are used as the segmentation codebase.

Many thanks to all these great projects .