This repo provides a simple example of a ReAct-style agent with a tool to save memories. This is a simple way to let an agent persist important information to reuse later. In this case, we save all memories scoped to a configurable user_id, which lets the bot learn a user's preferences across conversational threads.

This quickstart will get your memory service deployed on LangGraph Cloud. Once created, you can interact with it from any API.

Assuming you have already installed LangGraph Studio, to set up:

- Create a

.envfile.

cp .env.example .env- Define required API keys in your

.envfile.

The defaults values for model are shown below:

model: anthropic/claude-3-5-sonnet-20240620Follow the instructions below to get set up, or pick one of the additional options.

To use Anthropic's chat models:

- Sign up for an Anthropic API key if you haven't already.

- Once you have your API key, add it to your

.envfile:

ANTHROPIC_API_KEY=your-api-key

To use OpenAI's chat models:

- Sign up for an OpenAI API key.

- Once you have your API key, add it to your

.envfile:

OPENAI_API_KEY=your-api-key

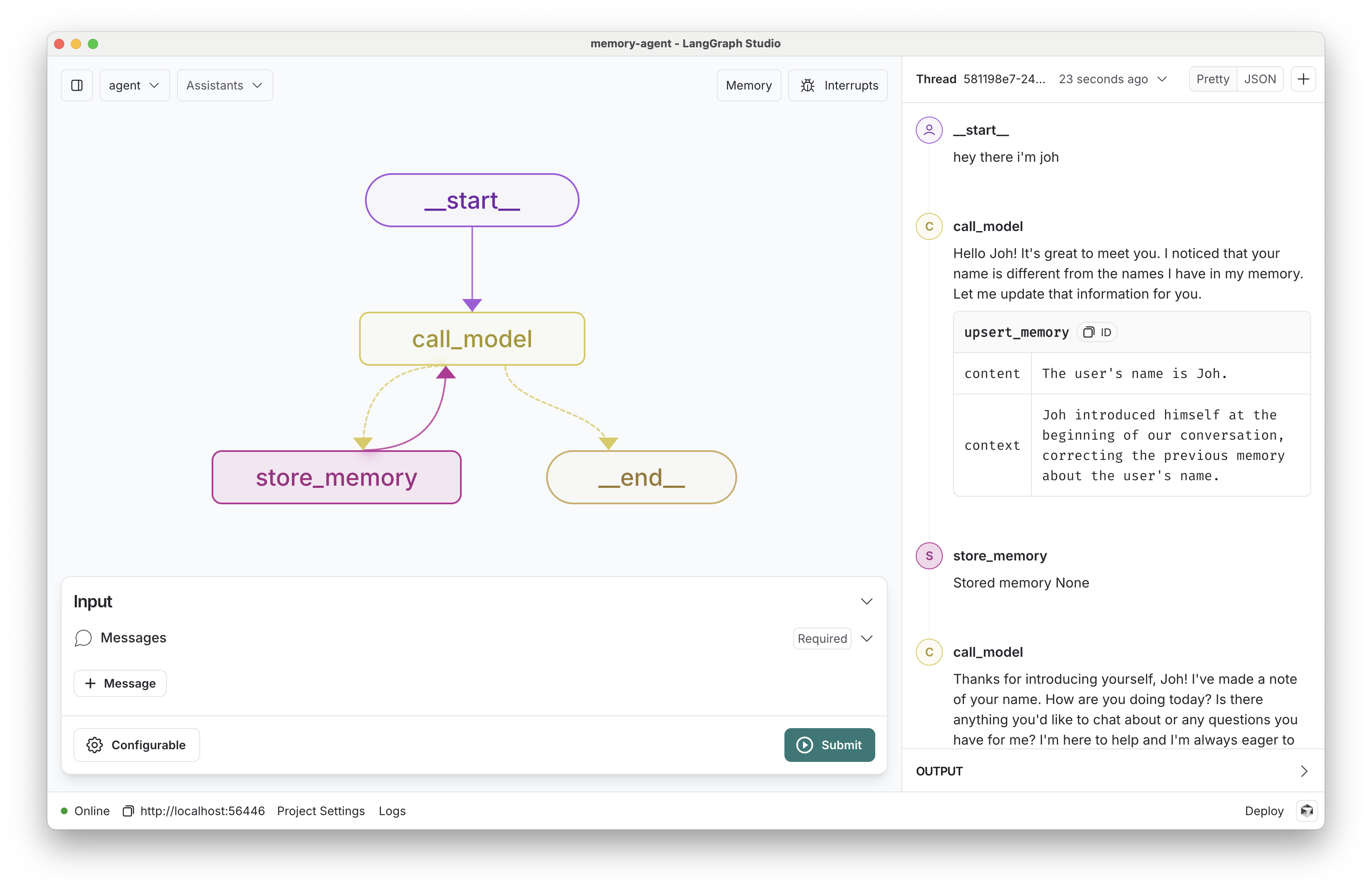

- Open in LangGraph studio. Navigate to the

memory_agentgraph and have a conversation with it! Try sending some messages saying your name and other things the bot should remember.

Assuming the bot saved some memories, create a new thread using the + icon. Then chat with the bot again - if you've completed your setup correctly, the bot should now have access to the memories you've saved!

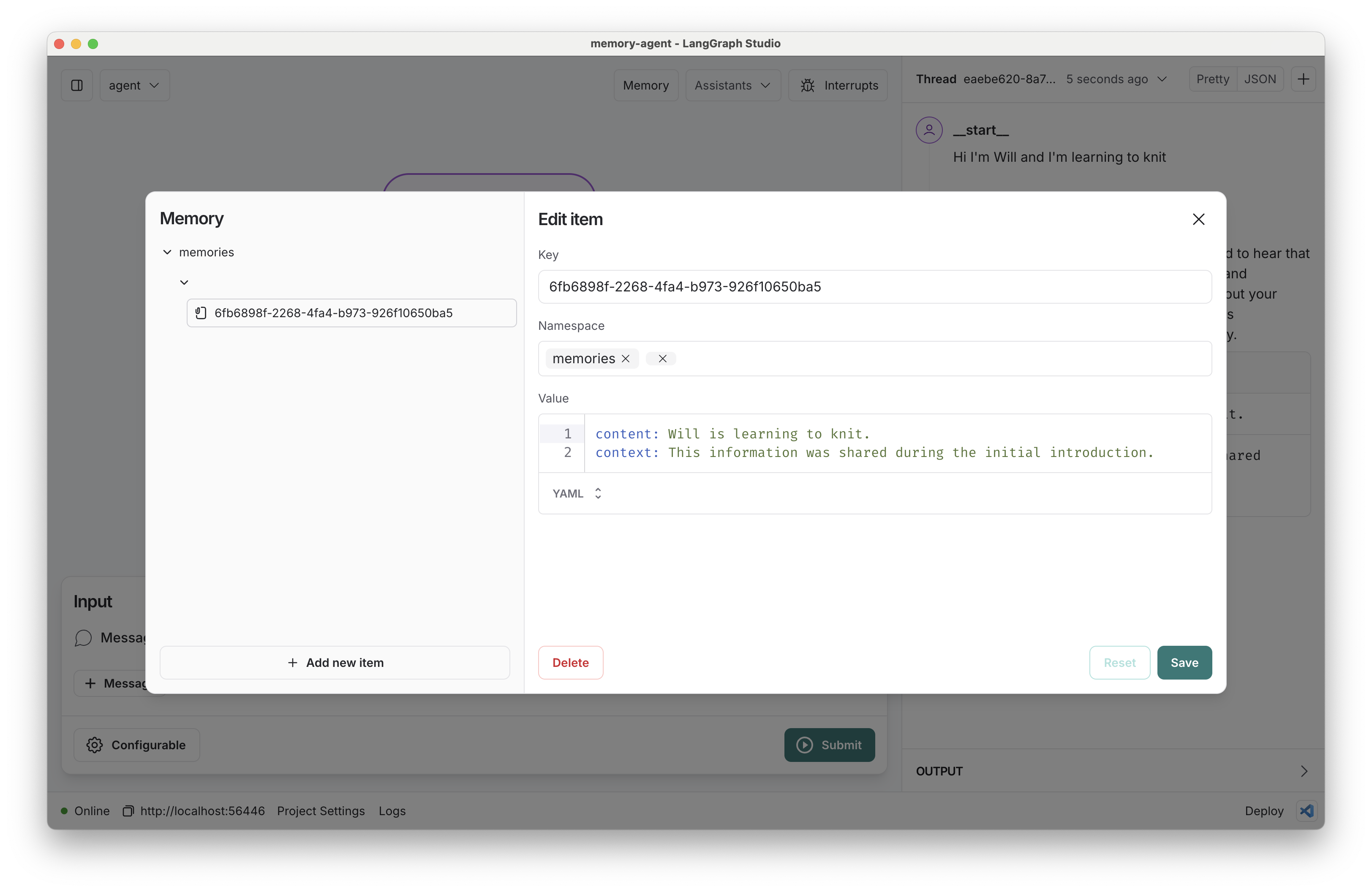

You can review the saved memories by clicking the "memory" button.

This chat bot reads from your memory graph's Store to easily list extracted memories. If it calls a tool, LangGraph will route to the store_memory node to save the information to the store.

Memory management can be challenging to get right, especially if you add additional tools for the bot to choose between. To tune the frequency and quality of memories your bot is saving, we recommend starting from an evaluation set, adding to it over time as you find and address common errors in your service.

We have provided a few example evaluation cases in the test file here. As you can see, the metrics themselves don't have to be terribly complicated, especially not at the outset.

We use LangSmith's @unit decorator to sync all the evaluations to LangSmith so you can better optimize your system and identify the root cause of any issues that may arise.

- Customize memory content: we've defined a simple memory structure

content: str, context: strfor each memory, but you could structure them in other ways. - Provide additional tools: the bot will be more useful if you connect it to other functions.

- Select a different model: We default to anthropic/claude-3-5-sonnet-20240620. You can select a compatible chat model using provider/model-name via configuration. Example: openai/gpt-4.

- Customize the prompts: We provide a default prompt in the prompts.py file. You can easily update this via configuration.