This repo contains pre-trained models and code-switched data generation script for GigaBERT:

@inproceedings{lan2020gigabert,

author = {Lan, Wuwei and Chen, Yang and Xu, Wei and Ritter, Alan},

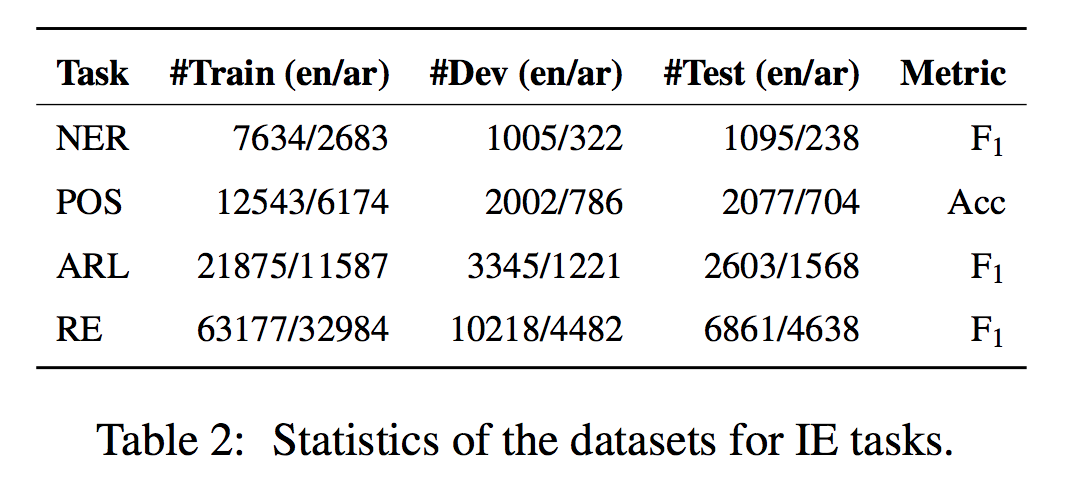

title = {An Empirical Study of Pre-trained Transformers for Arabic Information Extraction},

booktitle = {Proceedings of The 2020 Conference on Empirical Methods on Natural Language Processing (EMNLP)},

year = {2020}

}

Please check Yang Chen's GitHub for code and data.

The pre-trained models can be found here: GigaBERT-v3 and GigaBERT-v4

Please contact Wuwei Lan for code-switched GigaBERT with different configurations.

Apache License 2.0

This material is based in part on research sponsored by IARPA via the BETTER program (2019-19051600004).