Original paper: Jiang, Yifan, Shiyu Chang, and Zhangyang Wang. "Transgan: Two transformers can make one strong gan." arXiv preprint arXiv:2102.07074 (2021).

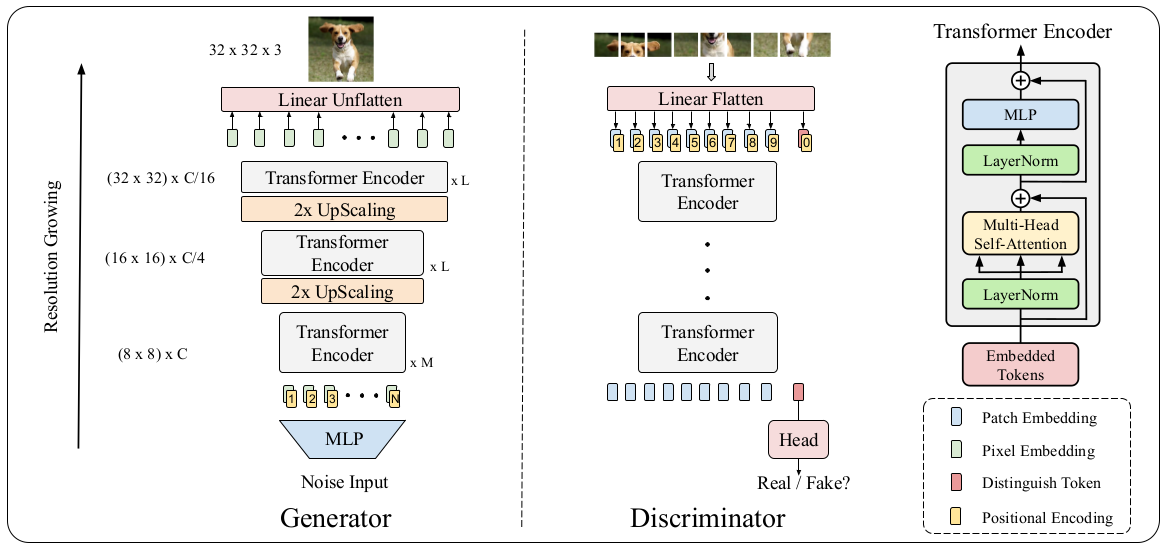

TransGAN: Two Transformers Can Make One Strong GAN

Code inspired from TransGAN

-

GELU activation is only approximated through:

to speed up computations done at GPU.

-

MLP is formed by Fully Connected => GELU ==> Fully Connected => GELU

pip install -r requirements.txtPython version >= 3.6

python train.pyUse Untitled.ipynb to easily modify training parameters