Powered by Text-To-Text Transfer Transformer model, heptabot is designed and built to be a practical example of a powerful user-friendly open-source error correction engine based on cutting-edge technology.

heptabot (heterogenous error processing transformer architecture-based online tool) is trained on 4 similar but distinct tasks: correction (default), which is just general text paragraph-wise text correction, jfleg, which is sentence-wise correction based on JFLEG competition, and conll and bea, based on CoNLL-2014 and BEA 2019 competitions respectively, which are also sentence-wise correction tasks, but more focused on grammar errors. While the core model of heptabot is T5, which performs the actual text correction for all of the described tasks, it also provides post-correction error classification for the correction task and uses spaCy's sentence parsing output to enhance the performance on conll and bea tasks. Note that while heptabot should in theory be able to correct English texts of any genre, it was trained specifically on student essays and, thus, works best on them.

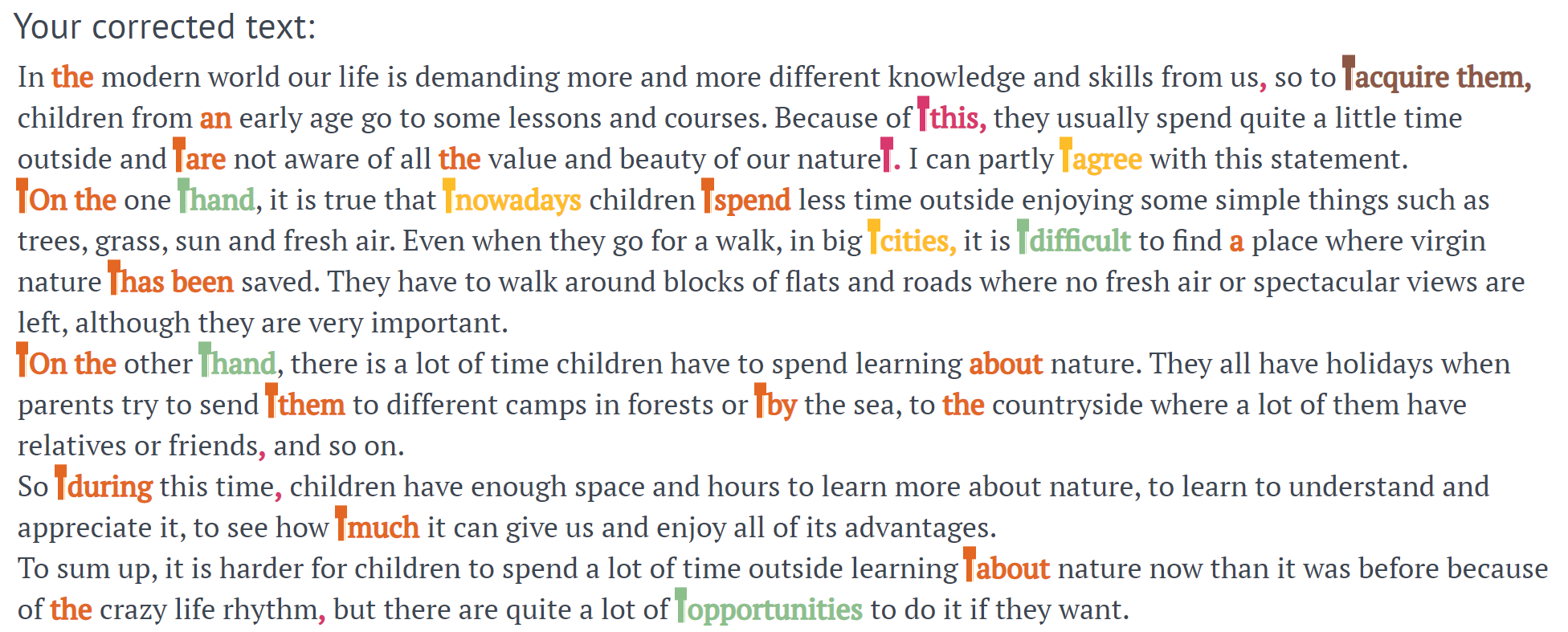

An example of text correction

Choose a convenient way of using heptabot depending on your needs:

- If you want to quickly test basic

heptabotwith a few texts or see our Web version, use Web demo. - If you want to test

tiny, CPU version of heptabot, usetinyversion in Colab CPU environment. - If you want to process a few texts with a more powerful

mediumversion, usemediumversion in Colab GPU environment. - If you want to process a large amount (hundreds) of texts, use

mediumversion in Colab TPU environment. - If you want to use our most powerful version, use

xxlversion in Kaggle TPU environment. - If you want to reproduce our scores, refer to the Measure performance section at the end of each corresponding notebook (

tiny,medium,xxl).

For cloning heptabot onto your hardware we suggest using our Docker images, as our former installation procedures were too complicated to follow and are now deprecated.

- If you want to install our CPU (

tiny) version/clone our Web demo, pull ourtiny-cpuDocker image:docker pull lclhse/heptabot(our legacy Install procedure is deprecated). - If you want to install our GPU (

medium) version/set up a Web version ofmediummodel (and you have a GPU), pull ourmedium-gpuDocker image:docker pull lclhse/heptabot:medium-gpu(our legacy Install procedure is deprecated). - To boot the image as a Web service, use

docker run -td -p 80:5000 -p 443:5000 lclhse/heptabot "source activate heptabot; ./start.sh; bash"and wait for around 75 seconds. In order to stopheptabot, just kill the container usingdocker container kill $(docker container ps -q -f ancestor=lclhse/heptabot). - To use the image internally, connect to it like

docker run -it lclhse/heptabot bashor connect to an externally deployed version on e. g. vast.ai. Once connected to the terminal, runsource activate heptabotand start Jupyter Lab: you will see our example notebook in the root directory. To killheptabotinside the running container, you may usekill $(lsof | grep -oP '^\S+\s+\K([0-9]+)(?=\s+(?![0-9]).*?9090)' | xargs -n1 | sort -u | xargs). In order to restartheptabotafter that, use./start.shor, if running in Jupyter Lab, useprompt_run.shgenerated in the notebook.

Here's how heptabot scores against state-of-the-art systems on some of the most common Grammar Error Correction tasks:

| CoNLL-2014 | JFLEG | BEA 2019 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

|

The performance measurements for different heptabot versions are as follows:

| Version | RAM load | GPU memory load | Avg time/text (correction) |

Avg time/symbol (correction) |

|---|---|---|---|---|

tiny, CPU |

2.176 GiB | - | 11.475 seconds | 9.18 ms |

medium, GPU |

0.393 GiB | 14.755 GiB | 14.825 seconds | 11.86 ms |

medium, TPU |

2.193 GiB | - | 2.075 seconds | 1.66 ms |

xxl, TPU |

2.563 GiB | - | 6.225 seconds | 4.98 ms |

-

Q: Why do you host

tinyversion and have its image as default while your other models produced better results? -

A: While it performs worse, our

tinymodel is a working proof of concept, guaranteed to work on virtually any Unix host with 4 GiB free RAM. This version is also the best fit for our hosting capabilities, as we currently cannot afford renting a high-end GPU on-demand 24/7. However, you are more than welcome to set up a working online version ofmediumversion on a GPU (in fact, we will be more than happy to hear from you if you do). -

Q: Why no CPU version of

mediummodel, GPU version oftinymodel etc.? -

A: There is a number of reasons.

- It is technically possible to run our

mediumversion on CPU: you may, for example, changeFROM nvidia/cuda:10.1-cudnn7-devel-ubuntu18.04toFROM ubuntu:18.04at the beginning of the Dockerfile to get a working environment withmediumversion on CPU architecture. However, its running time will be inadequately slow: in our tests, processing 1 file took somewhere between 1m30s and 5m. As such, we do not support this version. - The core of our

tinyversion is a distilledt5-smallmodel, which is, more importantly, quantized. Quantization is a CPU-specific technique, so quantized models cannot run on other architectures than CPUs. Hence, no GPU or TPU versions fortiny. - Likewise, our

xxlversion employs a fine-tuned version oft5-11Bcheckpoint, which is just too big for either CPU of GPU hosts (it is, in fact, too big even forv2-8TPU architecture available in Google Colab's instances, so we have to run it on Kaggle'sv3-8TPUs).

- It is technically possible to run our

-

Q: Are you planning to actively develop this project?

-

A: As of now, the project has reached a stage where we are fairly satisfied with its performance, so we plan only to maintain the existing functionality and fix whatever errors we may run into for now. This is not to say that there are no more major updates coming for

heptabot, or, conversely, thatheptabotwill be actively maintained forever: things may change in the future. -

Q: Can I contribute to this project?

-

A: Absolutely! Feel free to open issues and merge requests; we will process them in due time.

-

Q: How can I contact you?

-

A: Currently you can reach us at istorubarov@edu.hse.ru.

-

Q: Why is this section called FAQ if you haven't had so much of these questions prior to publishing it?

-

A: Following a fine example, in this case, "FAQ" stands for "Fully Anticipated Questions".

Feel free to reproduce our research: to do so, follow the notebooks from the retrain folder. Please note that you have to get access to some of the datasets we used before obtaining them, so this portion of code is omitted.