This sample aims to demonstrate how to create a serverless web crawler (or web scraper) using AWS Lambda and AWS Step Functions. It scales to crawl large websites that would time out if we used just a single lambda to crawl a site. The web crawler is written in Typescript, and uses Puppeteer to extract content and URLs from a given webpage.

Additionally, this sample demonstrates an example use-case for the crawler by indexing crawled content into Amazon Kendra, providing a machine-learning powered search over our crawled content. The CloudFormation stack for the Kendra resources is optional, you can deploy just the web crawler if you like. Make sure to review kendra's pricing and free tier before deploying the kendra part of the sample.

The AWS Cloud Development Kit (CDK) is used to define the infrastructure for this sample as code.

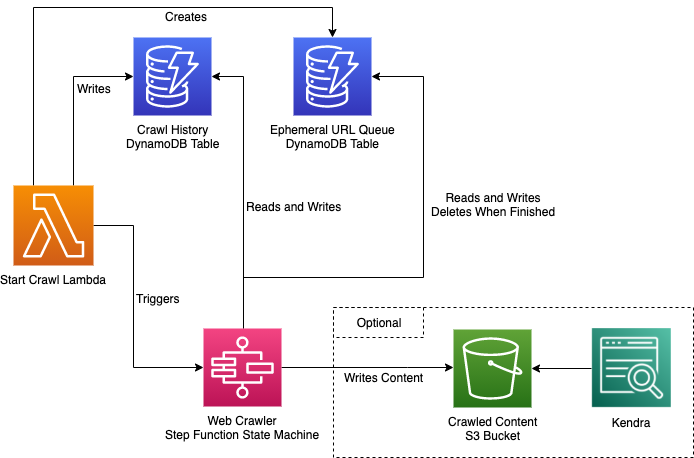

- The Start Crawl Lambda is invoked with details of the website to crawl.

- The Start Crawl Lambda creates a Dynamo DB table which will be used as the URL queue for the crawl.

- The Start Crawl Lambda writes the initial URLs to the queue.

- The Start Crawl Lambda triggers an execution of the web crawler state machine (see the section below).

- The Web Crawler State Machine crawls the website, visiting URLs it discovers and optionally writing content to S3.

- Kendra provides us with the ability to search our crawled content in S3.

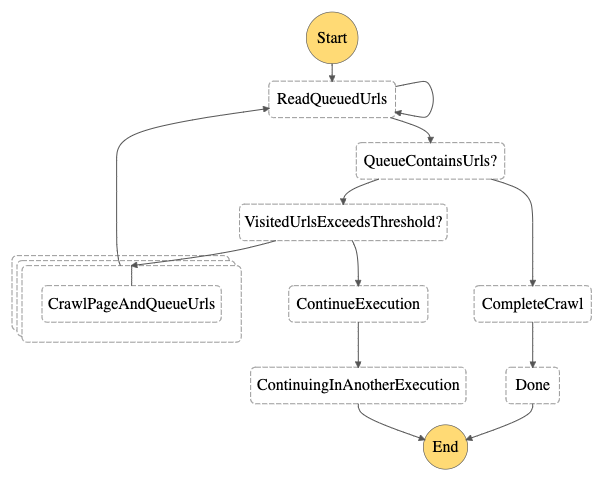

The web crawler is best explained by the AWS Step Functions State Machine diagram:

- Read Queued Urls: Reads a batch of non-visited URLs from the URL queue DynamoDB table. The default batch size is configured in

src/constructs/webcrawler/constants.ts. You can also update the environment variablePARALLEL_URLS_TO_SYNCto change the batch size after the CDK stack is deployed. - Crawl Page And Queue Urls: Visits a single webpage, extracts its content, and writes new URLs to the URL queue. This step is executed

in parallel across the batch of URLs that is passed on from the

Read Queued Urlsstep. - Continue Execution: This is responsible for spawning a new state machine execution as we approach the execution history limit.

- Complete Crawl: Delete the URL queue DynamoDB table and trigger a sync of the Kendra data source if applicable.

- The aws-cli must be installed and configured with an AWS account with a profile (see https://docs.aws.amazon.com/cli/latest/userguide/cli-chap-install.html for instructions on how to do this on your preferred development platform). Please ensure your profile is configured with a default AWS region.

- This project requires Node.js ≥ 16 and NPM ≥ 8.3.0. To make sure you have them available on your machine, try running the following command.

npm -v && node -v- Install or update the AWS CDK CLI from npm. This uses CDK v2.

npm i -g aws-cdk- Bootstrap your AWS account for CDK if you haven't done so already

This repository provides a utility script to build and deploy the sample.

To deploy the web crawler on its own, run:

./deploy --profile <YOUR_AWS_PROFILE>

Or you can deploy the web crawler with Kendra too:

./deploy --profile <YOUR_AWS_PROFILE> --with-kendra

Note that if deploying with Kendra, ensure your profile is configured with one of the AWS regions that supports Kendra. See the AWS Regional Services List for details.

When the infrastructure has been deployed, you can trigger a run of the crawler with the included utility script:

./crawl --profile <YOUR_AWS_PROFILE> --name lambda-docs --base-url https://docs.aws.amazon.com/ --start-paths /lambda --keywords lambda/latest/dg

You can play with the arguments above to try different websites.

--base-urlis used to specify the target website to crawl, only URLs starting with the base url will be queued.--start-pathsspecifies one or more paths in the website to start at.--keywordsparameter filters the URLs which are queued to only ones containing one or more of the given keywords, (ie above, only URLs containinglambda/latest/dgare added to the queue)--nameis optional, and is used to help identify which step function execution or dynamodb table corresponds to which crawl.

The crawl script will print a link to the AWS console so you can watch your Step Function State Machine execution in action.

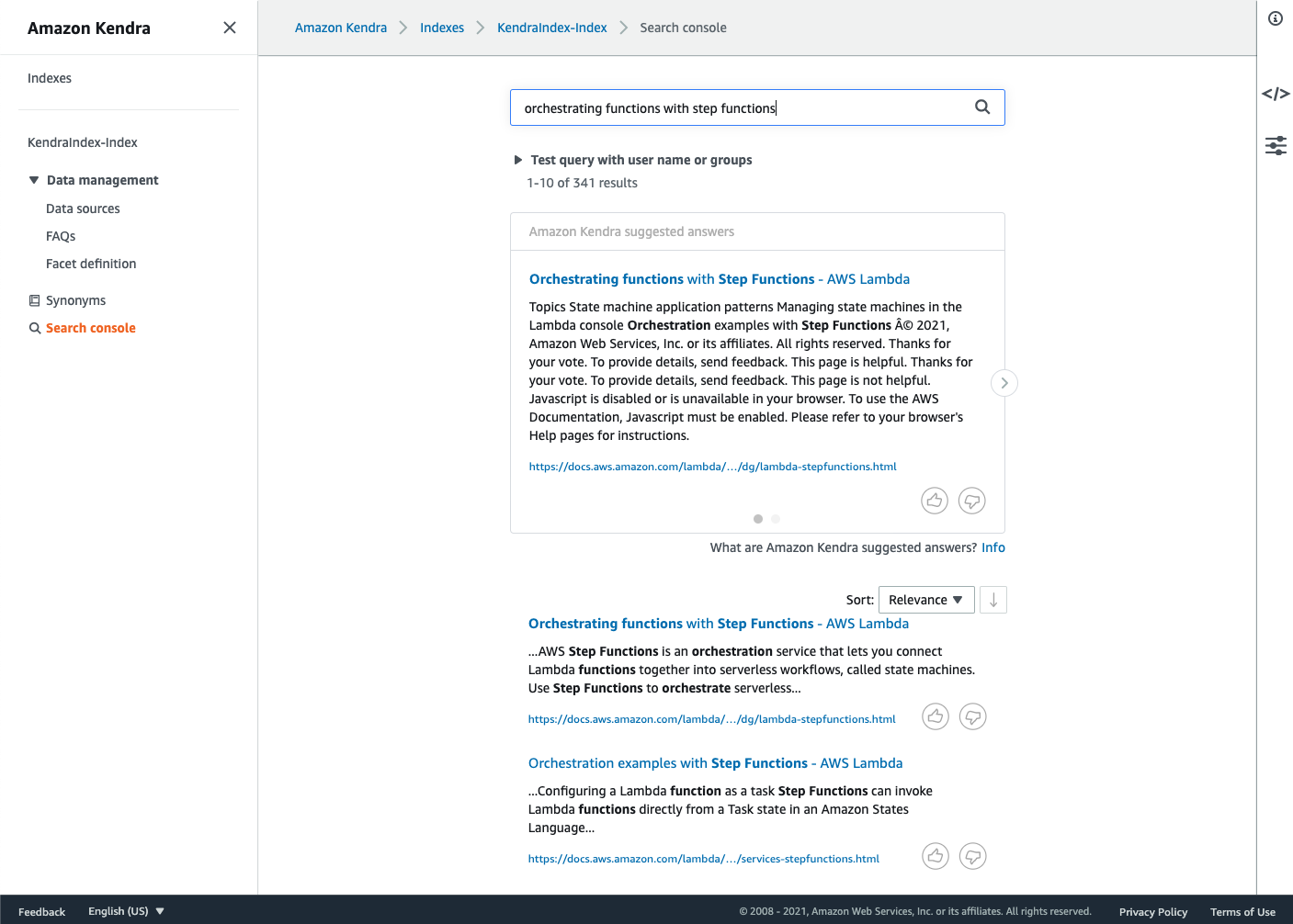

If you also deployed the Kendra stack (--with-kendra), you can visit the Kendra console to see an example

search page for the Kendra index. The crawl script will print a link to this page if you deployed Kendra. Note that it will

take a few minutes once the crawler has completed for Kendra to index the newly stored content.

If you're playing with the core crawler logic, it might be handy to test it out locally.

You can run the crawler locally with:

./local-crawl --base-url https://docs.aws.amazon.com/ --start-paths /lambda --keywords lambda/latest/dg

You can clean up all your resources when you're done via the destroy script.

If you deployed just the web crawler:

./destroy --profile <YOUR_AWS_PROFILE>

Or if you deployed the web crawler with Kendra too:

./destroy --profile <YOUR_AWS_PROFILE> --with-kendra

See CONTRIBUTING for more information.

This library is licensed under the MIT-0 License. See the LICENSE file.