Ruibin Li1 | Ruihuang Li1 |Song Guo2 | Lei Zhang1*

1The Hong Kong Polytechnic University, 2The Hong Kong University of Science and Technology.

In ECCV2024

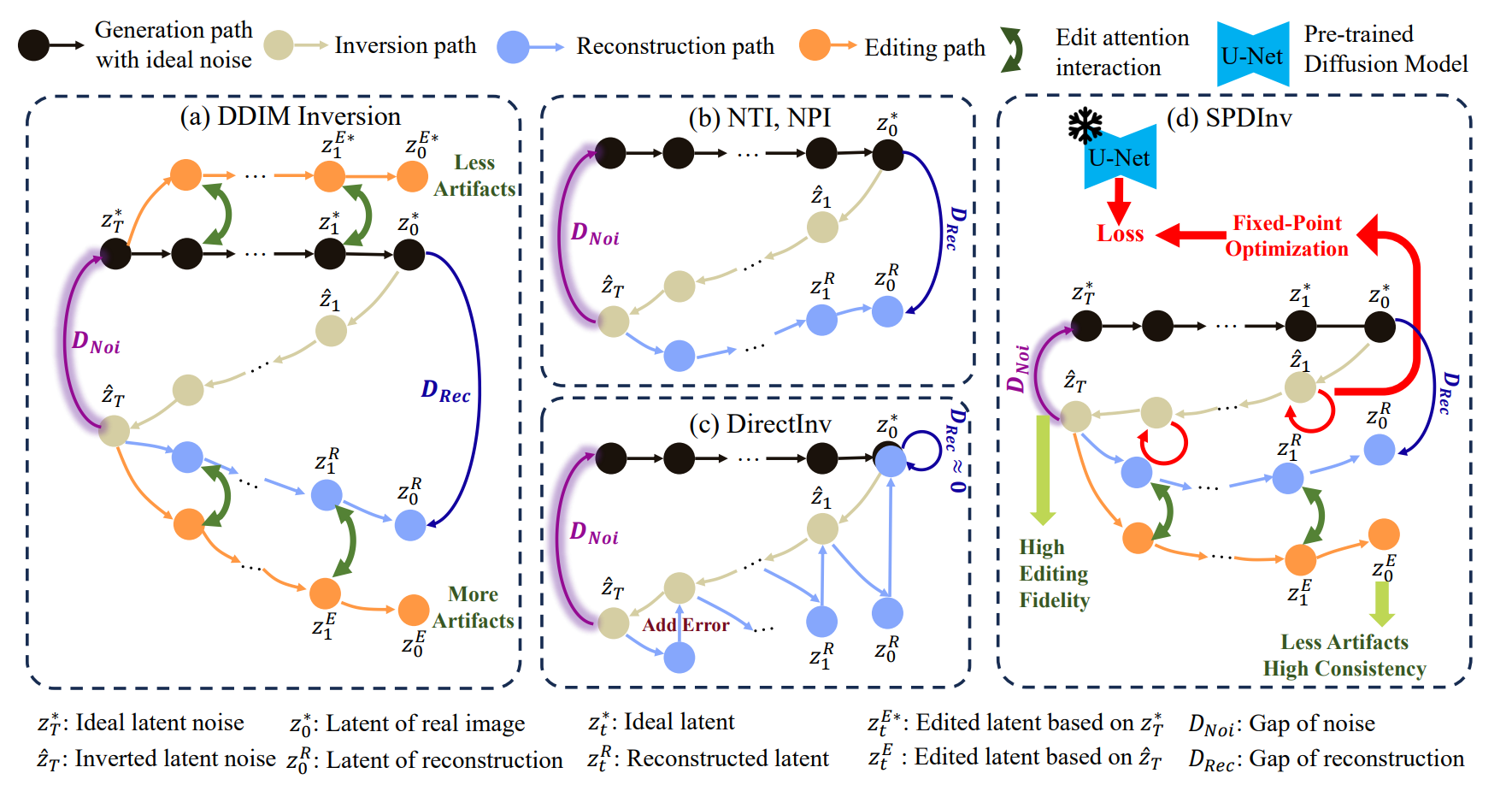

Pipelines of different inversion methods in text-driven editing. (a) DDIM inversion inverts a real image to a latent noise code, but the inverted noise code often results in large gap of reconstruction

## git clone this repository

git clone https://github.com/leeruibin/SPDInv.git

cd SPDInv

# create an environment with python >= 3.8

conda env create -f environment.yaml

conda activate SPDInv

python run_SPDInv_P2P.py --input xxx --source [source prompt] --target [target prompt] --blended_word "word1 word2"

python run_SPDInv_MasaCtrl.py --input xxx --source [source prompt] --target [target prompt]

To run PNP, you should first upgrade diffusers to 0.17.1 by

pip install diffusers==0.17.1

then, you can run

python run_SPDInv_PNP.py --input xxx --source [source prompt] --target [target prompt]

For ELITE, you should first download the pre-trained global_mapper.pt checkpoint provided by the ELITE, put it into the checkpoints folder.

python run_SPDInv_ELITE.py --input xxx --source [source prompt] --target [target prompt]

@article{li2024source,

title={Source Prompt Disentangled Inversion for Boosting Image Editability with Diffusion Models},

author={Li, Ruibin and Li, Ruihuang and Guo, Song and Zhang, Lei},

booktitle={European Conference on Computer Vision},

year={2024}

}

This code is built on diffusers version of Stable Diffusion.

Meanwhile, the code is heavily based on the Prompt-to-Prompt, Null-Text Inversion, MasaCtrl, ProxEdit, ELITE, Plug-and-Play, DirectInversion, thanks to all the contributors!.