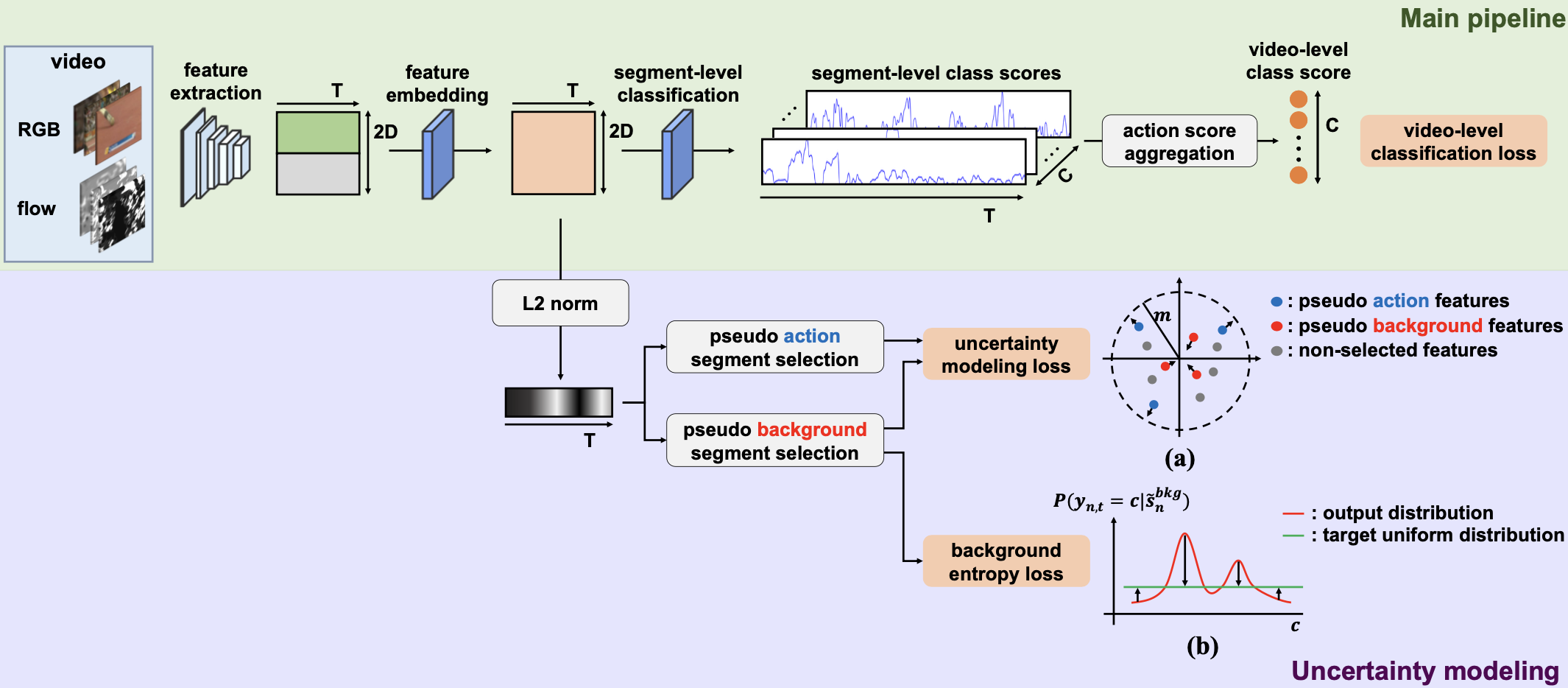

A PyTorch implementation of UM based on AAAI 2021 paper Weakly-supervised Temporal Action Localization by Uncertainty Modeling.

conda install pytorch=1.10.0 torchvision cudatoolkit=11.3 -c pytorch

pip install git+https://github.com/open-mmlab/mim.git

mim install mmaction2

THUMOS 14 and

ActivityNet datasets are used in this repo, you could download these datasets

from official websites. The I3D features of THUMOS 14 dataset can be downloaded from

Google Drive, I3D features

of ActivityNet 1.2 dataset can be downloaded from

OneDrive

, I3D features of ActivityNet 1.3 dataset can be downloaded

from Google Drive. The data

directory structure is shown as follows:

├── thumos14 | ├── activitynet

├── features | ├── features_1.2

├── val | ├── train

├── flow | ├── flow

├── video_validation_0000051.npy | ├── v___dXUJsj3yo.npy

└── ... | └── ...

├── rgb (same structure as flow) | ├── rgb

├── test | ├── v___dXUJsj3yo.npy

├── flow | └── ...

├── video_test_0000004.npy | ├── val (same structure as tain)

└── ... | ├── features_1.3 (same structure as features_1.2)

├── rgb (same structure as flow) | ├── videos

├── videos | ├── train

├── val | ├── v___c8enCfzqw.mp4

├── video_validation_0000051.mp4 | └──...

└──... | ├── val

├── test | ├── v__1vYKA7mNLI.mp4

├──video_test_0000004.mp4 | └──...

└──... | annotations_1.2.json

annotations.json | annotations_1.3.json

You can easily train and test the model by running the script below. If you want to try other options, please refer to

utils.py.

python train.py --data_name activitynet1.2 --num_segments 50 --seed 0 --scale 16

python test.py --model_file --data_name thumos14 --model_file result/thumos14_model.pth

The models are trained on one NVIDIA GeForce GTX 1080 Ti GPU (11G). All the hyper-parameters are the default values according to the papers.

| Method | THUMOS14 | Download | |||||||

|---|---|---|---|---|---|---|---|---|---|

| mAP@0.1 | mAP@0.2 | mAP@0.3 | mAP@0.4 | mAP@0.5 | mAP@0.6 | mAP@0.7 | mAP@AVG | ||

| Ours | 60.3 | 54.3 | 45.7 | 37.2 | 27.8 | 18.2 | 9.2 | 36.1 | kb79 |

| Official | 67.5 | 61.2 | 52.3 | 43.4 | 33.7 | 22.9 | 12.1 | 41.9 | - |

mAP@AVG is the average mAP under the thresholds 0.1:0.1:0.7.

| Method | ActivityNet 1.2 | ActivityNet 1.3 | Download | ||||||

|---|---|---|---|---|---|---|---|---|---|

| mAP@0.5 | mAP@0.75 | mAP@0.95 | mAP@AVG | mAP@0.5 | mAP@0.75 | mAP@0.95 | mAP@AVG | ||

| Ours | 1.7 | 0.5 | 0.0 | 0.7 | 0.1 | 0.1 | 0.0 | 0.1 | wexe |

| Official | 41.2 | 25.6 | 6.0 | 25.9 | 37.0 | 23.9 | 5.7 | 23.7 | - |

mAP@AVG is the average mAP under the thresholds 0.5:0.05:0.95.

This repo is built upon the repo WTAL-Uncertainty-Modeling.