This repository contains the implementation of the paper Network Pruning that Matters: A Case Study on Retraining Variants.

Duong H. Le, Binh-Son Hua (ICLR 2021)

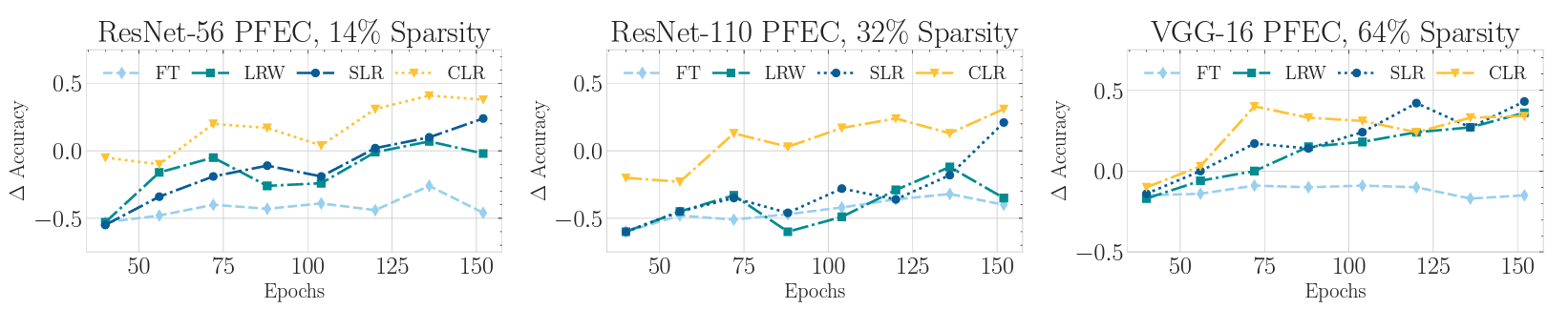

In this work, we study the behavior of pruned networks under different retraining settings. By leveraging the right learning rate schedule in retraining, we demonstrate a counter-intuitive phenomenon in that randomly pruned networks could even achieve better performance than methodically pruned networks (fine-tuned with the conventional approach) in many scenariors. Our results emphasize the cruciality of the learning rate schedule in pruned network retraining – a detail often overlooked by practioners during the implementation of network pruning.

If you find the paper/code helpful, please cite our paper:

@inproceedings{

le2021network,

title={Network Pruning That Matters: A Case Study on Retraining Variants},

author={Duong Hoang Le and Binh-Son Hua},

booktitle={International Conference on Learning Representations},

year={2021},

url={https://openreview.net/forum?id=Cb54AMqHQFP}

}

To run the code:

- Copy the Imagenet/CIFAR-10 dataset to

./datafolder - Run

init.sh - Download checkpoints here then uncompress it here

- Run the desired script in each subfolder.

Our implementation is based on the official code of HRank, Taylor Pruning, Soft Filter Pruning, Rethinking.