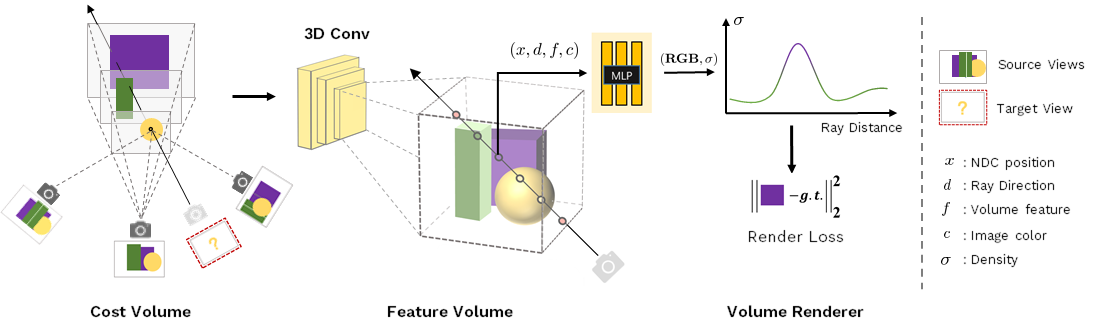

This repository contains a pytorch lightning implementation for the ICCV 2021 paper: MVSNeRF: Fast Generalizable Radiance Field Reconstruction from Multi-View Stereo. Our work present a novel neural rendering approach that can efficiently reconstruct

geometric and neural radiance fields for view synthesis, Moreover, if dense images are captured, our estimated radiance field representation can be easily fine-tuned; this leads to fast per-scene reconstruction.

Install environment:

conda create -n mvsnerf python=3.8

conda activate mvsnerf

pip install torch==1.10.1+cu113 torchvision==0.11.2+cu113 torchaudio==0.10.1+cu113 -f https://download.pytorch.org/whl/cu113/torch_stable.html

pip install pytorch-lightning==1.3.5 imageio pillow scikit-image opencv-python configargparse lpips kornia warmup_scheduler matplotlib test-tube imageio-ffmpeg

Please see each subsection for training on different datasets. Available training datasets:

- DTU

- Blender (Realistic Synthetic)

- LLFF (Real Forward-Facing)

- Your own data (images/intrinsic/extrinsic/nearfar boundles)

Download the preprocessed DTU training data and Depth_raw from original MVSNet repo and unzip. We provide a DTU example, please follow with the example's folder structure.

Run

CUDA_VISIBLE_DEVICES=$cuda python train_mvs_nerf_pl.py \

--expname $exp_name

--num_epochs 6

--use_viewdirs \

--dataset_name dtu \

--datadir $DTU_DIR

More options refer to the opt.py, training command example:

CUDA_VISIBLE_DEVICES=0 python train_mvs_nerf_pl.py

--with_depth --imgScale_test 1.0 \

--expname mvs-nerf-is-all-your-need \

--num_epochs 6 --N_samples 128 --use_viewdirs --batch_size 1024 \

--dataset_name dtu \

--datadir path/to/dtu/data \

--N_vis 6

You may need to add --with_depth if you want to quantity depth during training. --N_vis denotes the validation frequency.

--imgScale_test is the downsample ratio during validation, like 0.5. The training process takes about 30h on single RTX 2080Ti

for 6 epochs.

Important: please always set batch_size to 1 when you are trining a genelize model, you can enlarge it when fine-tuning.

Checkpoint: a pre-trained checkpint is included in ckpts/mvsnerf-v0.tar.

Evaluation: We also provide a rendering and quantity scipt in renderer.ipynb,

and you can also use the run_batch.py if you want to testing or finetuning on different dataset. More results can be found from

Here,

please check your configuration if your rendering result looks absnormal.

Rendering from the trained model should have result like this:

CUDA_VISIBLE_DEVICES=0 python train_mvs_nerf_finetuning_pl.py --dataset_name blender --datadir objaverse/0d251b65dfa04e3e88cd8774b77c3a27 --expname objaverse --with_rgb_loss --batch_size 1024 --num_epochs 1 --imgScale_test 1.0 --white_bkgd --pad 0 --ckpt ./ckpts/mvsnerf-v0.tar --N_vis 1