A fork of so-vits-svc with realtime support and greatly improved interface. Based on branch 4.0 (v1) and the models are compatible.

Windows:

py -3.10 -m venv venv

venv\Scripts\activateLinux/MacOS:

python3.10 -m venv venv

source venv/bin/activateAnaconda:

conda create -n so-vits-svc-fork python=3.10 pip

conda activate so-vits-svc-forkInstall this via pip (or your favourite package manager that uses pip):

pip install -U torch torchaudio --index-url https://download.pytorch.org/whl/cu117

pip install -U so-vits-svc-forkPlease update this package regularly to get the latest features and bug fixes.

pip install -U so-vits-svc-fork- Realtime voice conversion (enhanced in v1.1.0)

- More accurate pitch estimation using CREPE

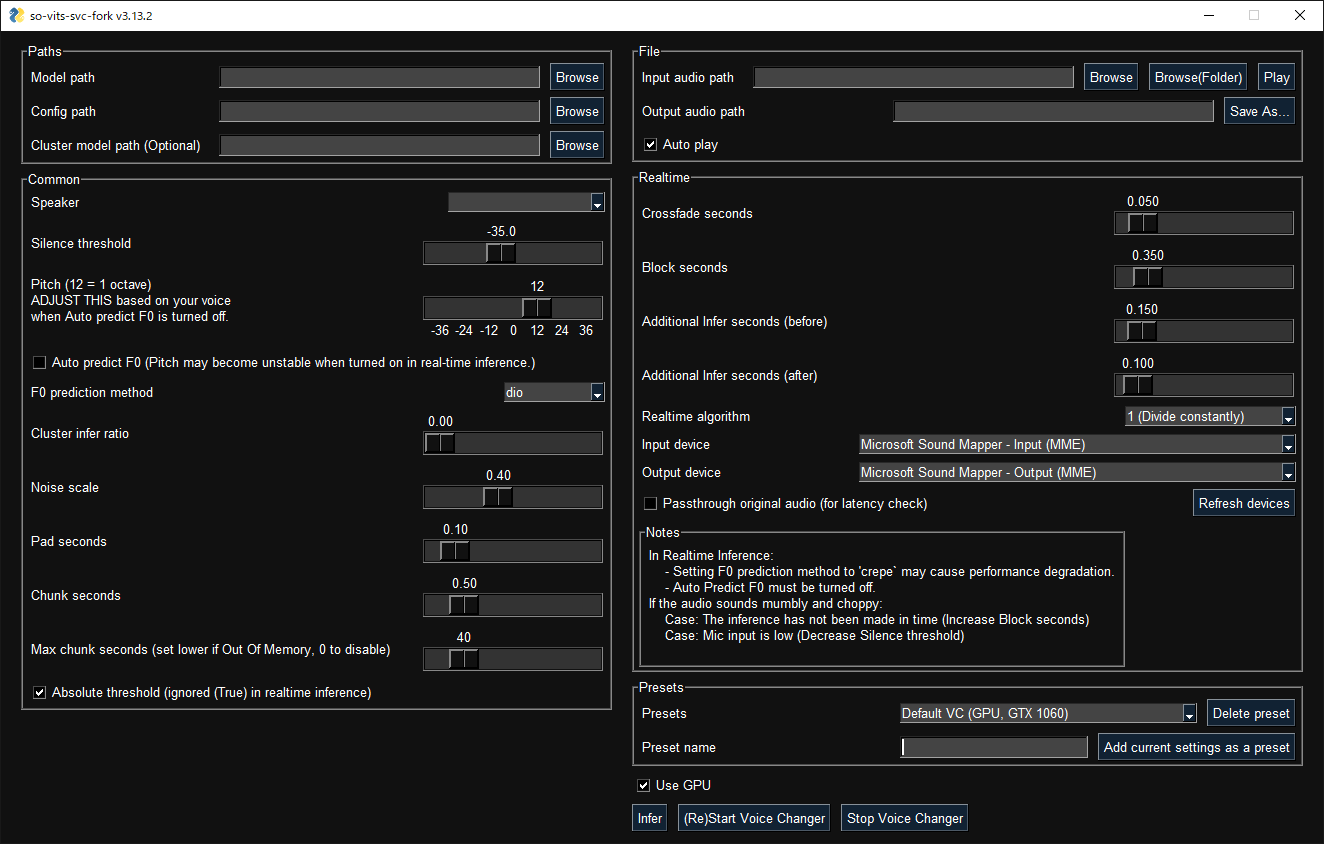

- GUI available

- Unified command-line interface (no need to run Python scripts)

- Ready to use just by installing with

pip. - Automatically download pretrained base model and HuBERT model

- Code completely formatted with black, isort, autoflake etc.

- Volume normalization in preprocessing

- Other minor differences

GUI launches with the following command:

svcg- Realtime (from microphone)

svc vc --model-path <model-path>- File

svc --model-path <model-path> source.wavPretrained models are available on HuggingFace.

- If using WSL, please note that WSL requires additional setup to handle audio and the GUI will not work without finding an audio device.

- In real-time inference, if there is noise on the inputs, the HuBERT model will react to those as well. Consider using realtime noise reduction applications such as RTX Voice in this case.

Place your dataset like dataset_raw/{speaker_id}/**/{wav_file}.{any_format} (subfolders are acceptable) and run:

svc pre-resample

svc pre-config

svc pre-hubert

svc train- Dataset audio duration per file should be <~ 10s or VRAM will run out.

- It is recommended to change the batch_size in

config.jsonbefore thetraincommand to match the VRAM capacity. As tested, the default requires about 14 GB.

For more details, run svc -h or svc <subcommand> -h.

> svc -h

Usage: svc [OPTIONS] COMMAND [ARGS]...

so-vits-svc allows any folder structure for training data.

However, the following folder structure is recommended.

When training: dataset_raw/{speaker_name}/{wav_name}.wav

When inference: configs/44k/config.json, logs/44k/G_XXXX.pth

If the folder structure is followed, you DO NOT NEED TO SPECIFY model path, config path, etc.

(The latest model will be automatically loaded.)

To train a model, run pre-resample, pre-config, pre-hubert, train.

To infer a model, run infer.

Options:

-h, --help Show this message and exit.

Commands:

clean Clean up files, only useful if you are using the default file structure

infer Inference

onnx Export model to onnx

pre-config Preprocessing part 2: config

pre-hubert Preprocessing part 3: hubert If the HuBERT model is not found, it will be...

pre-resample Preprocessing part 1: resample

train Train model If D_0.pth or G_0.pth not found, automatically download from hub.

train-cluster Train k-means clustering

vc Realtime inference from microphoneThanks goes to these wonderful people (emoji key):

34j 💻 🤔 📖 💡 🚇 🚧 👀 |

GarrettConway 💻 🐛 📖 |

BlueAmulet 🤔 💬 |

ThrowawayAccount01 🐛 |

This project follows the all-contributors specification. Contributions of any kind welcome!