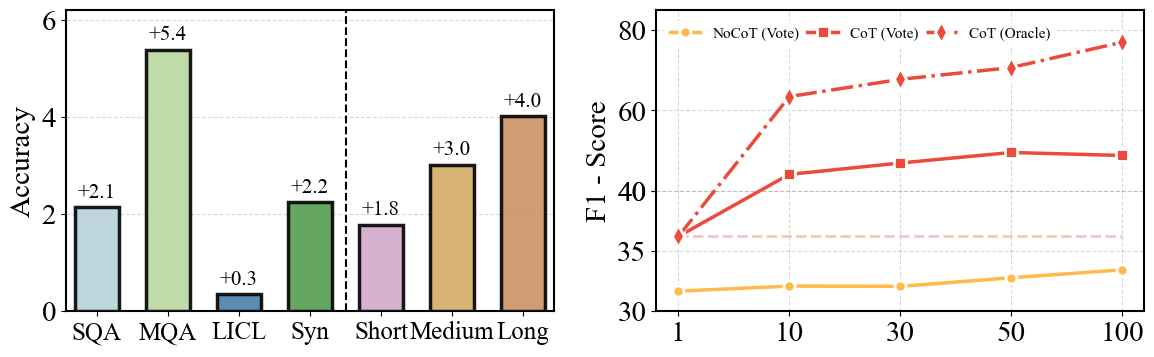

LongRePS tackles quality bottlenecks in CoT reasoning for extended contexts by integrating process supervision. As shown in the figure, we have discovered that in complex task scenarios, using the chain of thought always enables the model performance to achieve a universal gain. Furthermore, we figure out that while vanilla CoT improves with context length, self-sampled reasoning paths exhibit significant inconsistency and hallucination risks, especially in multi-hop QA and complex scenarios.

The framework operates in two phases: (1) Self-sampling generates diverse CoT candidates to capture reasoning variability, and (2) Context-aware assessment enforces answer correctness, grounding via text matching, and intrinsic consistency via LLM-based scoring.

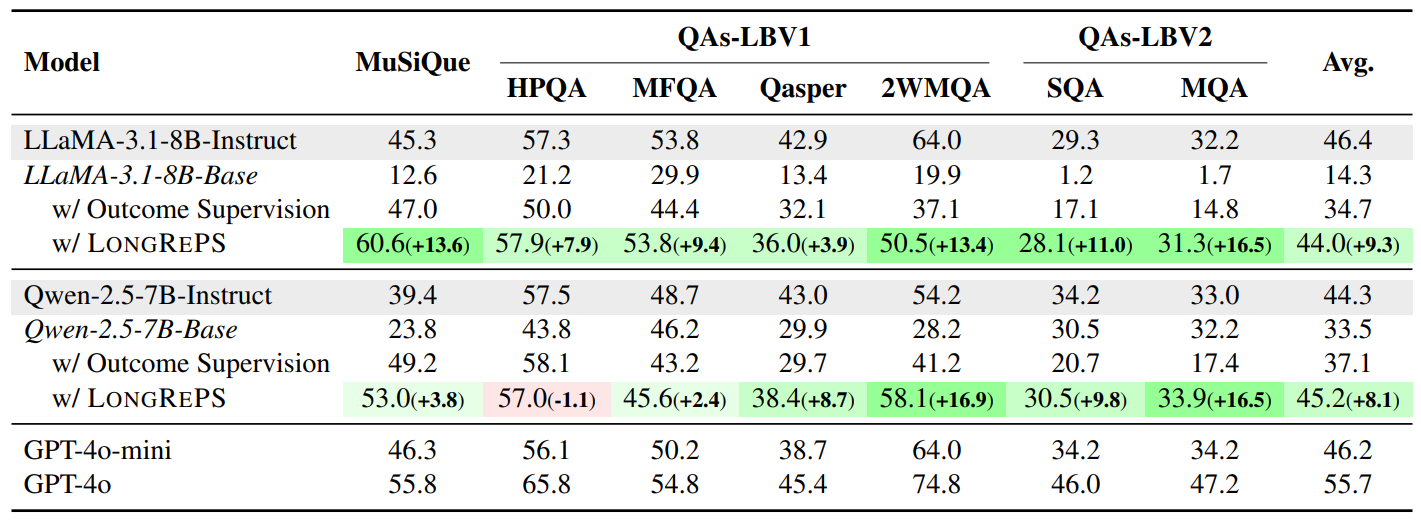

Evaluations on long-context tasks show LongRePS achieves 13.6/3.8-point gains on MuSiQue (LLaMA/Qwen) and cross-task robustness, outperforming outcome supervision. The results validate process supervision as pivotal for scalable long-context reasoning, with open-source code enabling community adoption.

[2025/03/03] Release training and evaluation data for LongRePS. The model parameters and complete codes will be available soon.

- 🔨 Requirements

- ⚙️ How to Prepare Data for Training

- 🖥️ How to Prepare Data for Evaluating

- 🍧 Training

- 📊 Evaluation

- 📄 Acknowledgement

Install LLaMA-Factory

Please refer to this tutorial for installation. Or you can use following command:

git clone --depth 1 https://github.com/hiyouga/LLaMA-Factory.git

cd LLaMA-Factory

pip install -e ".[torch,metrics]"Install Other Supporting Libraries

cd ..

git clone https://github.com/lemon-prog123/LongRePS.git

cd LongRePS

pip install -r requirements.txtLlama-3.1-8B:

from datasets import load_dataset

import jsonlines

model="Llama-3.1-8B"

dataset = load_dataset("Lemon123prog/Llama-3.1-8B-LongRePS")

warmup_data=dataset['warmup'].to_list()

orm_data=dataset['train_orm'].to_list()

prm_data=dataset['train_prm'].to_list()

with jsonlines.open(f"data/{model}_warmup.jsonl", 'w') as writer:

writer.write_all(warmup_data)

with jsonlines.open(f"data/{model}_orm.jsonl", 'w') as writer:

writer.write_all(orm_data)

with jsonlines.open(f"data/{model}_prm.jsonl", 'w') as writer:

writer.write_all(prm_data)Qwen-2.5-7B:

from datasets import load_dataset

import jsonlines

model="Qwen-2.5-7B"

dataset = load_dataset("Lemon123prog/Qwen-2.5-7B-LongRePS")

warmup_data=dataset['warmup'].to_list()

orm_data=dataset['train_orm'].to_list()

prm_data=dataset['train_prm'].to_list()

with jsonlines.open(f"data/{model}_warmup.jsonl", 'w') as writer:

writer.write_all(warmup_data)

with jsonlines.open(f"data/{model}_orm.jsonl", 'w') as writer:

writer.write_all(orm_data)

with jsonlines.open(f"data/{model}_prm.jsonl", 'w') as writer:

writer.write_all(prm_data)Or you can simply run preprocess.py

python preprocess_train.pybash scripts/preprocess_lb.shThen you will obtain the processed evaluation data in the dataset directory.

from huggingface_hub import snapshot_download

from pathlib import Path

repo_id ="Qwen/Qwen2.5-7B"

root_dir = Path("Your own path for Qwen")

snapshot_download(repo_id=repo_id,local_dir=root_dir/repo_id,repo_type="model")

repo_id ="meta-llama/Llama-3.1-8B"

root_dir = Path("Your own path for Llama")

snapshot_download(repo_id=repo_id,local_dir=root_dir/repo_id,repo_type="model")Set Model_Path in the scripts before training.

Llama-3.1-8B

bash scripts/llama_warmup.shQwen-2.5-7B

bash scripts/qwen_warmup.shSet Model-Name & Model-Path & File-Name in the scripts before sampling.

cd evaltoolkits

bash loop_sample.shAfter the sampling process, you can use filter_data.py to launch the filtering framework.

cd evaltoolkits

python filter_data.py \

--path_to_src_file [Sampling Data] \

--path_to_stage1_file [Output Data Path]You can modify dataset_info.json to enable the added filtered dataset to be included in the file list.

Finally, by set the warm-up model path and datset_name in the scripts, you can launch the fine-tuning process.

Llama-3.1-8B

bash scripts/llama_sft.shQwen-2.5-7B

bash scripts/qwen_sft.shLongBench v1

cd evaltoolkits

bash launch_lbv1.shLongBench v2

cd evaltoolkits

bash launch_lbv2.shNote: Set model_path and mode to the desired target model.

@article{zhu2025chain,

title={Chain-of-Thought Matters: Improving Long-Context Language Models with Reasoning Path Supervision},

author={Zhu, Dawei and Wei, Xiyu and Zhao, Guangxiang and Wu, Wenhao and Zou, Haosheng and Ran, Junfeng and Wang, Xun and Sun, Lin and Zhang, Xiangzheng and Li, Sujian},

journal={arXiv preprint arXiv:2502.20790},

year={2025}

}

We are deeply thankful for the following projects that serve as the foundation for LongRePS: