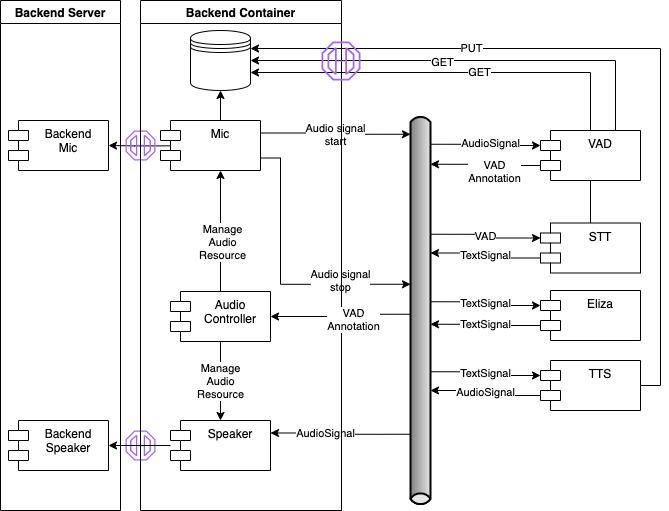

Leolani implementation using the combot framework.

This application also serves as a blue-print for applications in the combot framework.

The application is composed of the following components:

- Backend Server

- Backend Container

- Voice Activity Detection (VAD)

- Automatic Speech Recognition (ASR)

- Leolani module

- Text To Speech (TTS)

- Chat UI

You will find more details on the components on the dedicated README.

The event payploads used to communicate between the individual modules follow the EMISSOR framework. To be continued..

The application is setup for multiple runtime systems where it can be executed.

The simplest is a local Python installation. This uses Python packages built for each module of the application and has a main application script that configures and starts the modules from Python code.

The advantage is that communication between the modules can happen directly within the application, without the need to setup external infrastructure components, as e.g. a messaging bus. Also, debugging of the application can be easier, as everything run in a single process.

The disadvantage is, that this limits the application to first, use modules that are written in Python, and second, all modules must have compatible requirements (Python version, package versions, hardware requirements, etc.). As much as the latter is desirable, it is not always possible to fulfill.

The local Python application is setup in the py-app/ folder and has the following structure:

py-app

├── app.py

├── requirements.txt

├── config

│ ├── default.config

│ └── logging.config

└── storage

├── audio

└── video

The entry point of the application is the app.py script and from the py-app/ directory after running make build

from the leolani-mmai-parent it can be run via

source venv/bin/activate

python app.py

The Python application provides a Chat UI and monitoring pages through a web browser.

Please look at the dedicated README for instructions on how to run the Docker.

For the development workflow see the cltl-combot project.