🌈 Implementation of Named Entity Recognition using Python.

nerpy实现了BertSoftmax、BertCrf、BertSpan等多种命名实体识别模型,并在标准数据集上比较了各模型的效果。

Guide

- BertSoftmax:BertSoftmax基于BERT预训练模型实现实体识别,本项目基于PyTorch实现了BertSoftmax模型的训练和预测

- BertSpan:BertSpan基于BERT训练span边界的表示,模型结构更适配实体边界识别,本项目基于PyTorch实现了BertSpan模型的训练和预测

- 英文实体识别数据集的评测结果:

| Arch | Backbone | Model Name | CoNLL-2003 | QPS |

|---|---|---|---|---|

| BertSoftmax | bert-base-uncased | bert4ner-base-uncased | 90.43 | 235 |

| BertSoftmax | bert-base-cased | bert4ner-base-cased | 91.17 | 235 |

| BertSpan | bert-base-uncased | bertspan4ner-base-uncased | 90.61 | 210 |

| BertSpan | bert-base-cased | bertspan4ner-base-cased | 91.90 | 224 |

- 中文实体识别数据集的评测结果:

| Arch | Backbone | Model Name | CNER | PEOPLE | MSRA-NER | QPS |

|---|---|---|---|---|---|---|

| BertSoftmax | bert-base-chinese | bert4ner-base-chinese | 94.98 | 95.25 | 94.65 | 222 |

| BertSpan | bert-base-chinese | bertspan4ner-base-chinese | 96.03 | 96.06 | 95.03 | 254 |

- 本项目release模型的实体识别评测结果:

| Arch | Backbone | Model Name | CNER(zh) | PEOPLE(zh) | CoNLL-2003(en) | QPS |

|---|---|---|---|---|---|---|

| BertSpan | bert-base-chinese | shibing624/bertspan4ner-base-chinese | 96.03 | 96.06 | - | 254 |

| BertSoftmax | bert-base-chinese | shibing624/bert4ner-base-chinese | 94.98 | 95.25 | - | 222 |

| BertSoftmax | bert-base-uncased | shibing624/bert4ner-base-uncased | - | - | 90.43 | 243 |

说明:

- 结果值均使用F1

- 结果均只用该数据集的train训练,在test上评估得到的表现,没用外部数据

shibing624/bertspan4ner-base-chinese模型达到base级别里SOTA效果,是用BertSpan方法训练的, 运行examples/training_bertspan_zh_demo.py代码可在各中文数据集复现结果shibing624/bert4ner-base-chinese模型达到base级别里较好效果,是用BertSoftmax方法训练的, 运行examples/training_ner_model_zh_demo.py代码可在各中文数据集复现结果shibing624/bert4ner-base-uncased模型是用BertSoftmax方法训练的, 运行examples/training_ner_model_en_demo.py代码可在CoNLL-2003英文数据集复现结果- 各预训练模型均可以通过transformers调用,如中文BERT模型:

--model_name bert-base-chinese - 中文实体识别数据集下载链接见下方

- QPS的GPU测试环境是Tesla V100,显存32GB

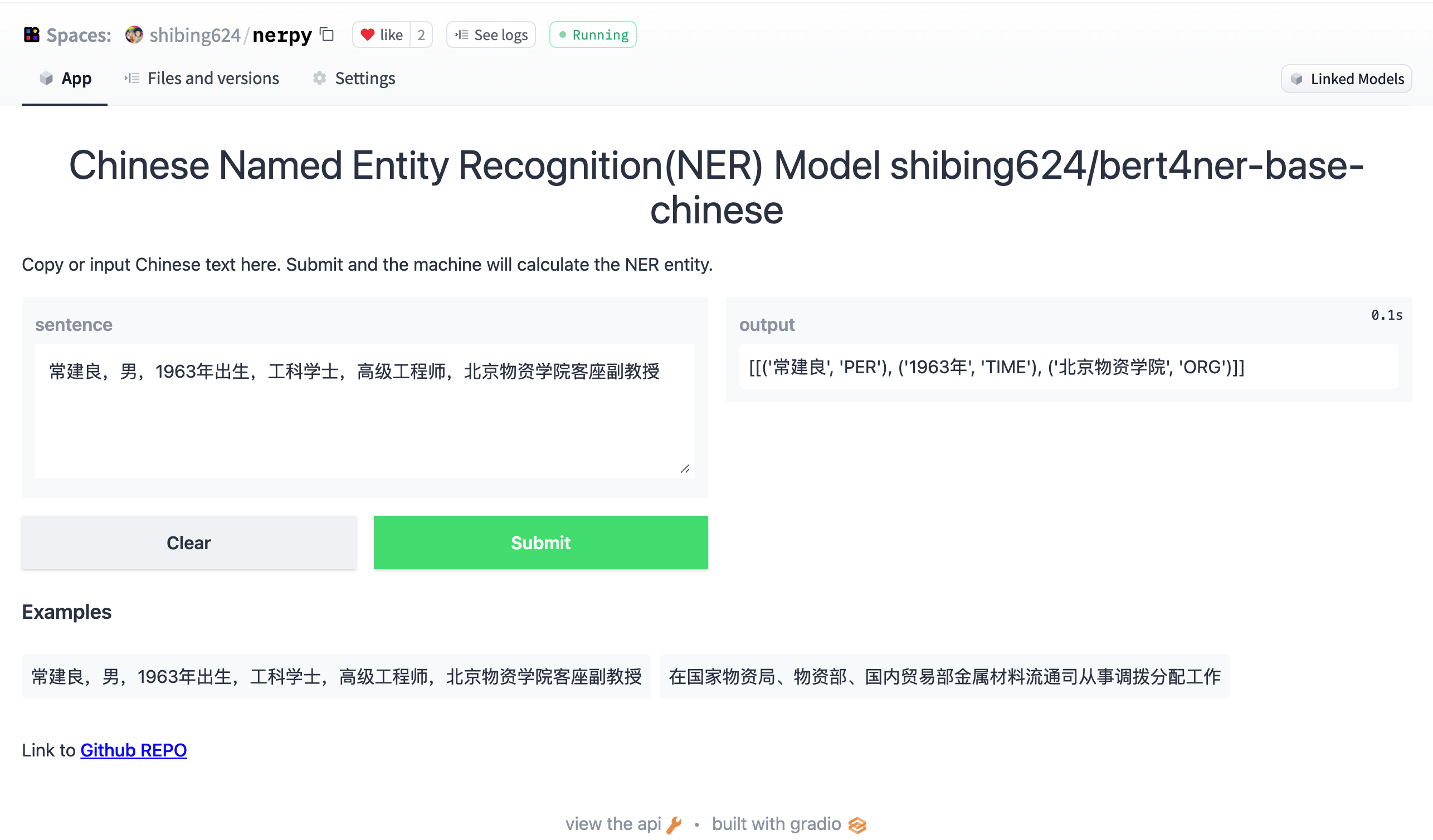

Demo: https://huggingface.co/spaces/shibing624/nerpy

run example: examples/gradio_demo.py to see the demo:

python examples/gradio_demo.pypython 3.8+

pip install torch # conda install pytorch

pip install -U nerpyor

pip install torch # conda install pytorch

pip install -r requirements.txt

git clone https://github.com/shibing624/nerpy.git

cd nerpy

pip install --no-deps .>>> from nerpy import NERModel

>>> model = NERModel("bert", "shibing624/bert4ner-base-uncased")

>>> predictions, raw_outputs, entities = model.predict(["AL-AIN, United Arab Emirates 1996-12-06"], split_on_space=True)

entities: [('AL-AIN,', 'LOC'), ('United Arab Emirates', 'LOC')]>>> from nerpy import NERModel

>>> model = NERModel("bert", "shibing624/bert4ner-base-chinese")

>>> predictions, raw_outputs, entities = model.predict(["常建良,男,1963年出生,工科学士,高级工程师"], split_on_space=False)

entities: [('常建良', 'PER'), ('1963年', 'TIME')]example: examples/base_zh_demo.py

import sys

sys.path.append('..')

from nerpy import NERModel

if __name__ == '__main__':

# BertSoftmax中文实体识别模型: NERModel("bert", "shibing624/bert4ner-base-chinese")

# BertSpan中文实体识别模型: NERModel("bertspan", "shibing624/bertspan4ner-base-chinese")

model = NERModel("bert", "shibing624/bert4ner-base-chinese")

sentences = [

"常建良,男,1963年出生,工科学士,高级工程师,北京物资学院客座副教授",

"1985年8月-1993年在国家物资局、物资部、国内贸易部金属材料流通司从事国家统配钢材中特种钢材品种的调拨分配工作,先后任科员、主任科员。"

]

predictions, raw_outputs, entities = model.predict(sentences)

print(entities)output:

[('常建良', 'PER'), ('1963年', 'TIME'), ('北京物资学院', 'ORG')]

[('1985年', 'TIME'), ('8月', 'TIME'), ('1993年', 'TIME'), ('国家物资局', 'ORG'), ('物资部', 'ORG'), ('国内贸易部金属材料流通司', 'ORG')]

shibing624/bert4ner-base-chinese模型是BertSoftmax方法在中文PEOPLE(人民日报)数据集训练得到的,模型已经上传到huggingface的 模型库shibing624/bert4ner-base-chinese, 是nerpy.NERModel指定的默认模型,可以通过上面示例调用,或者如下所示用transformers库调用, 模型自动下载到本机路径:~/.cache/huggingface/transformersshibing624/bertspan4ner-base-chinese模型是BertSpan方法在中文PEOPLE(人民日报)数据集训练得到的,模型已经上传到huggingface的 模型库shibing624/bertspan4ner-base-chinese

Without nerpy, you can use the model like this:

First, you pass your input through the transformer model, then you have to apply the bio tag to get the entity words.

example: examples/predict_use_origin_transformers_zh_demo.py

import os

import torch

from transformers import AutoTokenizer, AutoModelForTokenClassification

from seqeval.metrics.sequence_labeling import get_entities

os.environ["KMP_DUPLICATE_LIB_OK"] = "TRUE"

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained("shibing624/bert4ner-base-chinese")

model = AutoModelForTokenClassification.from_pretrained("shibing624/bert4ner-base-chinese")

label_list = ['I-ORG', 'B-LOC', 'O', 'B-ORG', 'I-LOC', 'I-PER', 'B-TIME', 'I-TIME', 'B-PER']

sentence = "王宏伟来自北京,是个警察,喜欢去王府井游玩儿。"

def get_entity(sentence):

tokens = tokenizer.tokenize(sentence)

inputs = tokenizer.encode(sentence, return_tensors="pt")

with torch.no_grad():

outputs = model(inputs).logits

predictions = torch.argmax(outputs, dim=2)

char_tags = [(token, label_list[prediction]) for token, prediction in zip(tokens, predictions[0].numpy())][1:-1]

print(sentence)

print(char_tags)

pred_labels = [i[1] for i in char_tags]

entities = []

line_entities = get_entities(pred_labels)

for i in line_entities:

word = sentence[i[1]: i[2] + 1]

entity_type = i[0]

entities.append((word, entity_type))

print("Sentence entity:")

print(entities)

get_entity(sentence)output:

王宏伟来自北京,是个警察,喜欢去王府井游玩儿。

[('王', 'B-PER'), ('宏', 'I-PER'), ('伟', 'I-PER'), ('来', 'O'), ('自', 'O'), ('北', 'B-LOC'), ('京', 'I-LOC'), (',', 'O'), ('是', 'O'), ('个', 'O'), ('警', 'O'), ('察', 'O'), (',', 'O'), ('喜', 'O'), ('欢', 'O'), ('去', 'O'), ('王', 'B-LOC'), ('府', 'I-LOC'), ('井', 'I-LOC'), ('游', 'O'), ('玩', 'O'), ('儿', 'O'), ('。', 'O')]

Sentence entity:

[('王宏伟', 'PER'), ('北京', 'LOC'), ('王府井', 'LOC')]| 数据集 | 语料 | 下载链接 | 文件大小 |

|---|---|---|---|

CNER中文实体识别数据集 |

CNER(12万字) | CNER github | 1.1MB |

PEOPLE中文实体识别数据集 |

人民日报数据集(200万字) | PEOPLE github | 12.8MB |

MSRA-NER中文实体识别数据集 |

MSRA-NER数据集(4.6万条,221.6万字) | MSRA-NER github | 3.6MB |

CoNLL03英文实体识别数据集 |

CoNLL-2003数据集(22万字) | CoNLL03 github | 1.7MB |

CNER中文实体识别数据集,数据格式:

美 B-LOC

国 I-LOC

的 O

华 B-PER

莱 I-PER

士 I-PER

我 O

跟 O

他 O

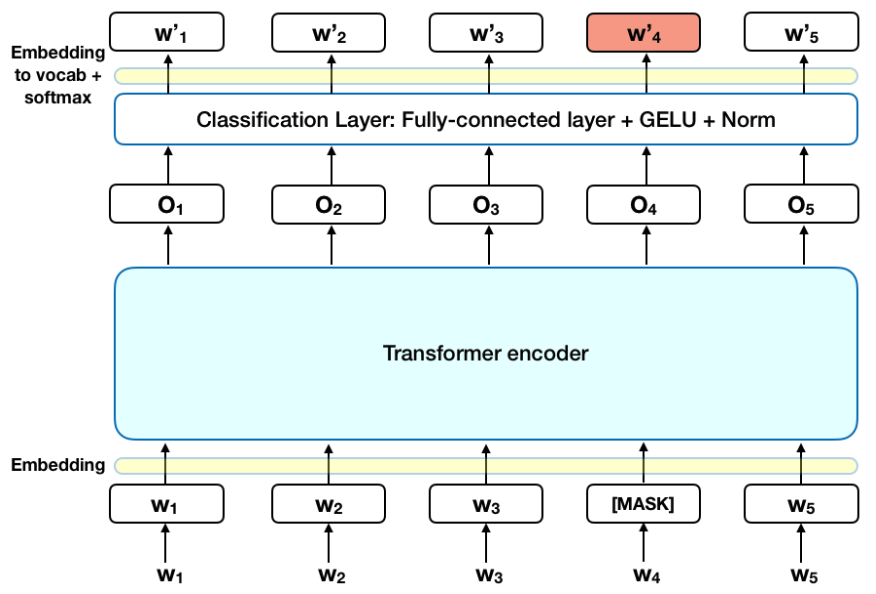

BertSoftmax实体识别模型,基于BERT的标准序列标注方法:

Network structure:

模型文件组成:

shibing624/bert4ner-base-chinese

├── config.json

├── model_args.json

├── pytorch_model.bin

├── special_tokens_map.json

├── tokenizer_config.json

└── vocab.txt

training example: examples/training_ner_model_toy_demo.py

import sys

import pandas as pd

sys.path.append('..')

from nerpy.ner_model import NERModel

# Creating samples

train_samples = [

[0, "HuggingFace", "B-MISC"],

[0, "Transformers", "I-MISC"],

[0, "started", "O"],

[0, "with", "O"],

[0, "text", "O"],

[0, "classification", "B-MISC"],

[1, "Nerpy", "B-MISC"],

[1, "Model", "I-MISC"],

[1, "can", "O"],

[1, "now", "O"],

[1, "perform", "O"],

[1, "NER", "B-MISC"],

]

train_data = pd.DataFrame(train_samples, columns=["sentence_id", "words", "labels"])

test_samples = [

[0, "HuggingFace", "B-MISC"],

[0, "Transformers", "I-MISC"],

[0, "was", "O"],

[0, "built", "O"],

[0, "for", "O"],

[0, "text", "O"],

[0, "classification", "B-MISC"],

[1, "Nerpy", "B-MISC"],

[1, "Model", "I-MISC"],

[1, "then", "O"],

[1, "expanded", "O"],

[1, "to", "O"],

[1, "perform", "O"],

[1, "NER", "B-MISC"],

]

test_data = pd.DataFrame(test_samples, columns=["sentence_id", "words", "labels"])

# Create a NERModel

model = NERModel(

"bert",

"bert-base-uncased",

args={"overwrite_output_dir": True, "reprocess_input_data": True, "num_train_epochs": 1},

use_cuda=False,

)

# Train the model

model.train_model(train_data)

# Evaluate the model

result, model_outputs, predictions = model.eval_model(test_data)

print(result, model_outputs, predictions)

# Predictions on text strings

sentences = ["Nerpy Model perform sentence NER", "HuggingFace Transformers build for text"]

predictions, raw_outputs, entities = model.predict(sentences, split_on_space=True)

print(predictions, entities)- 在中文CNER数据集训练和评估

BertSoftmax模型

example: examples/training_ner_model_zh_demo.py

cd examples

python training_ner_model_zh_demo.py --do_train --do_predict --num_epochs 5 --task_name cner- 在英文CoNLL-2003数据集训练和评估

BertSoftmax模型

example: examples/training_ner_model_en_demo.py

cd examples

python training_ner_model_en_demo.py --do_train --do_predict --num_epochs 5- 在中文CNER数据集训练和评估

BertSpan模型

example: examples/training_bertspan_zh_demo.py

cd examples

python training_bertspan_zh_demo.py --do_train --do_predict --num_epochs 5 --task_name cner- Issue(建议):

- 邮件我:xuming: xuming624@qq.com

- 微信我: 加我微信号:xuming624, 备注:姓名-公司-NLP 进NLP交流群。

如果你在研究中使用了nerpy,请按如下格式引用:

APA:

Xu, M. nerpy: Named Entity Recognition Toolkit (Version 0.0.2) [Computer software]. https://github.com/shibing624/nerpyBibTeX:

@software{Xu_nerpy_Text_to,

author = {Xu, Ming},

title = {{nerpy: Named Entity Recognition Toolkit}},

url = {https://github.com/shibing624/nerpy},

version = {0.0.2}

}授权协议为 The Apache License 2.0,可免费用做商业用途。请在产品说明中附加nerpy的链接和授权协议。

项目代码还很粗糙,如果大家对代码有所改进,欢迎提交回本项目,在提交之前,注意以下两点:

- 在

tests添加相应的单元测试 - 使用

python -m pytest来运行所有单元测试,确保所有单测都是通过的

之后即可提交PR。