In this repository I delve into three different types of regression.

This is a collection of end-to-end regression problems. Topics are introduced theoretically in the README.md and tested practically in the notebooks linked below.

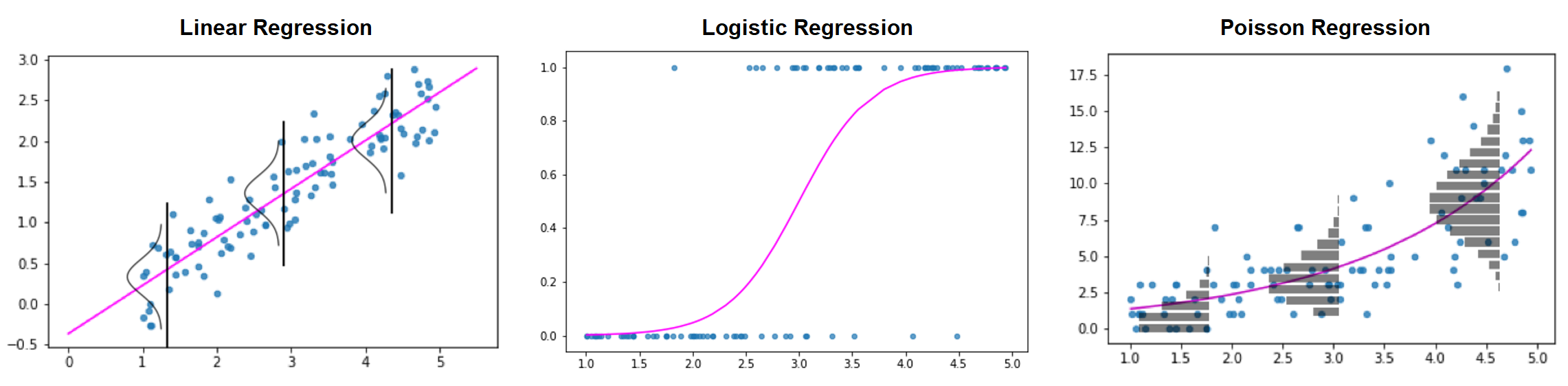

First, I tested the theory on toy simulations. I made four different simulations in python, taking advantage of the sklearn and statsmodels libraries:

- GLM Simulation pt. 1 - Linear Regression

- GLM Simulation pt. 2 - Logistic Regression

- GLM Simulation pt. 3 - Poisson Regression

- GLM Simulation pt. 4 - Customized Regression

After that I moved onto some real-world-data cases, developing three different end-to-end projects:

- Linear Regression - Human brain weights

- Logistic Regression - HR dataset

- Poisson Regression - Smoking and lung cancer

Further details can be found in the 'Practical Examples' section below in this README.md.

Note. A good dataset resource for linear/logistic/poisson regression, multinomial responses, survival data.

Note. To further explore feature selection: source 1, source 2, source 3, source 4, source 5.

A generalized linear model (GLM) is a flexible generalization of ordinary linear regression. The GLM generalizes linear regression by allowing the linear model to be related to the response variable via a link function. In a generalized linear model, the outcome

where

🟥 For the sake of clarity, from now on we consider the case of the scalar outcome,

Every GLM consists of three elements:

- a distribution (from the family of exponential distributions) for modeling

$Y$ - a linear predictor

$\boldsymbol{X},\boldsymbol{\beta}$ - a link function

$g(\cdot)$ such that$\mathbb{E}[\boldsymbol{Y}|\boldsymbol{X}] = \boldsymbol{\mu} = g^{-1}(\boldsymbol{X},\boldsymbol{\beta})$

The following are the most famous/used examples.

| Distribution | Support | Typical uses | Link function |

Link name | Mean function | |

|---|---|---|---|---|---|---|

| Normal |

Linear-response data | Identity | ||||

| Gamma |

Exponential-response data | Negative inverse | ||||

| Inverse-Gaussian |

Inverse squared | |||||

| Poisson |

Count of occurrences in a fixed amount of time/space |

Log | ||||

| Bernoulli |

Outcome of single yes/no occurrence | Logit | ||||

| Binomial |

Count of yes/no in |

Logit |

As already mentioned, let

In the case of linear regression

As a case study for linear regression i analyzed a dataset of human brain weights.

- Exploratory Data Analysis (EDA)

- Feature Selection

- Linear Regression with

sklearn - Linear Regression with

statsmodels - Advanced Regression techniques with

sklearn

In the case of logistic regression

As a case study for logistic regression i analyzed an HR dataset.

- Exploratory Data Analysis (EDA)

- Feature Selection

- Logistic Regression with

sklearn - Logistic Regression with

statsmodels

For Advanced Classification techniques with Scikit-Learn check out Breast Cancer: End-to-End Machine Learning Project.

In the case of poisson regression

As a case study for poisson regression i analyzed a dataset of smoking and lung cancer.

- Exploratory Data Analysis (EDA)

- Feature Selection

- Poisson Regression with

sklearn - Poisson Regression with

statsmodels

What libraries should be used? In general, scikit-learn is designed for machine-learning, while statsmodels is made for rigorous statistics. Both libraries have their uses. Before selecting one over the other, it is best to consider the purpose of the model. A model designed for prediction is best fit using scikit-learn, while statsmodels is best employed for explanatory models. To completely disregard one for the other would do a great disservice to an excellent Python library.

To summarize some key differences:

- OLS efficiency: scikit-learn is faster at linear regression, the difference is more apparent for larger datasets

- Logistic regression efficiency: employing only a single core, statsmodels is faster at logistic regression

- Visualization: statsmodels provides a summary table

- Solvers/methods: in general, statsmodels provides a greater variety

- Logistic Regression: scikit-learn regularizes by default while statsmodels does not

- Additional linear models: scikit-learn provides more models for regularization, while statsmodels helps correct for broken OLS assumptions