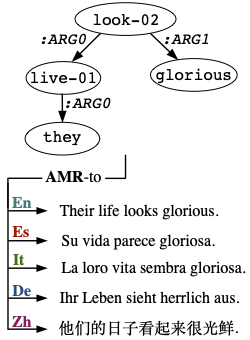

This repository contains the code for the EMNLP 2021 paper "Smelting Gold and Silver for Improved Multilingual AMR-to-Text Generation".

In our experiments, we use the following datasets: LDC2017T10, LDC2020T07.

The easiest way to proceed is to create a conda environment:

conda create -n structadapt python=3.6

Further, install PyTorch:

conda install -c pytorch pytorch=1.7.0

Finally, install the packages required:

pip install -r requirements.txt

For training mt5 using silverAMR and silverSent, execute:

./finetune.sh <SILVER_SENT_FILE> <SILVER_AMR_FILE> <DEV_FILE> <MODEL_DIR>

where <SILVER_SENT_FILE> and <SILVER_AMR_FILE> pointer to json files for training, <DEV_FILE> pointer to the dev file, and <MODEL_DIR> is the folder where the checkpoint will be saved.

This is an example for a line in the json file:

{"source": "translate AMR to Spanish: ( relevant :polarity - :ARG1 ( or :op1 ( involve :ARG1 ( and :op1 ( face :ARG1-of ( black ) ) :op2 ( noose ) ) :ARG2 ( thing :ARG2-of ( costume :ARG1 ( you ) ) ) ) :op2 ( costume :ARG1 you :ARG2 ( sandwich :ARG1-of ( grill ) :mod ( cheese :ARG1-of ( drip :degree ( too :degree ( little ) ) ) :ARG1-of ( think :ARG0 ( involve-01 ) ) ) ) ) ) )", "target": "Si tu traje tiene un rostro negro y un nausea, o si se trata de un sándwich fritado en el que creo que el queso es un poco demasiado ardiente es irrelevante."}

The AMR graphs need to be linearized to be fed into the model. We used the method from Ribeiro et al. 2021 for linearization: https://github.com/UKPLab/plms-graph2text.

For decoding, run:

./test.sh <MODEL_DIR> <TEST_FILE> <GPU_ID>

A checkpoint trained on SilverAMR and SilverSent can be found here. This model achieves a BLEU score of 30.7 (ES), 26.4 (IT), 20.6 (DE) and 24.2 (ZH). The outputs can be downloaded here.

For more details regarding hyperparameters, please refer to HuggingFace.

Contact person: Leonardo Ribeiro, ribeiro@aiphes.tu-darmstadt.de

@inproceedings{ribeiro-etal-2021-smelting,

title = "Smelting Gold and Silver for Improved Multilingual AMR-to-Text Generation",

author = "Ribeiro, Leonardo F. R. and

Pfeiffer, Jonas and

Zhang, Yue and

Gurevych, Iryna",

booktitle = "Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP)",

month = nov,

year = "2021",

address = "Punta Cana, Dominican Republic",

publisher = "Association for Computational Linguistics",

}