Extracting numbers from bankcard, based on Deep Learning with Keras.

Including auto and manual location, number identification, with GUI.

中文BLOG: 点击此处链接

- cnn_blstm_ctc

- EAST/manual locate

- GUI

Python == 3.6

pip install requirements

Windows10 x64, Anaconda, PyCharm 2018.3, NVIDIA GTX 1050.

- Download trained model, CRNN extracting-code:

6eqw, EAST extracting-code:qiw5. - Then put CRNN model into

crnn/model, put EAST model intoeast/model. - Run

python demo.py. - In GUI, press

Loadbutton to load one image about bankcard or load fromdataset/test/. - Press

Identifybutton, it will start locate and do identification. - Activate manual location by double click the Image view, then draw the interest area and press

Identify.

Download my dataset, CRNN extracting-code:1jax,

EAST extracting-code:pqba. and unzip dataset in ./dataset.

The structure of dataset looks like:

- dataset

- /card # for east

- /crad_nbr # for crnn

- /test

...

- Run

python crnn/preprocess.py. - Run

python crnn/run.pyto train, and you can change some parameters incrnn/cfg.py.

- My dataset is collecting from Internet: Baidu, Google, and thanks Kesci.

It has been labeld with ICDAR 2015 format, you can see it in

dataset/card/txt/. This tiny dataset is unable to cover all the situation, if you have rich one, it may perform better. - If you would like to get more data, make sure data has been labeled, or you can take

dataset/tagger.pyto label it. - Modify

east/cfg.py, see default values. - Run

python east/preprocess.py. If process goes well, you'll see generated data like this:

- Finally,

python east/run.py.

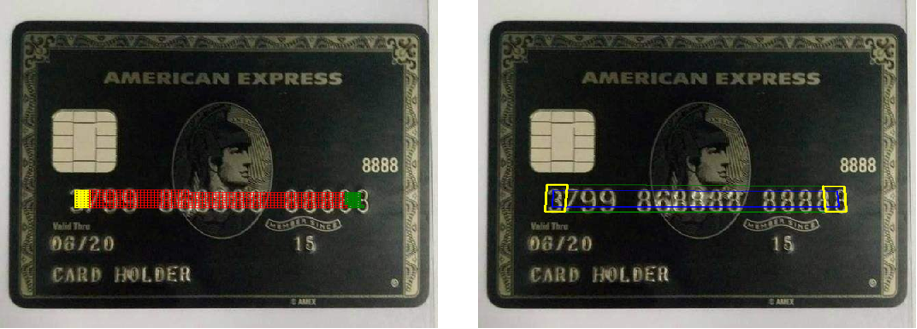

The model I used, refer to CNN_RNN_CTC. The CNN part is using VGG, with BLSTM as RNN and CTC loss.

The model's preview:

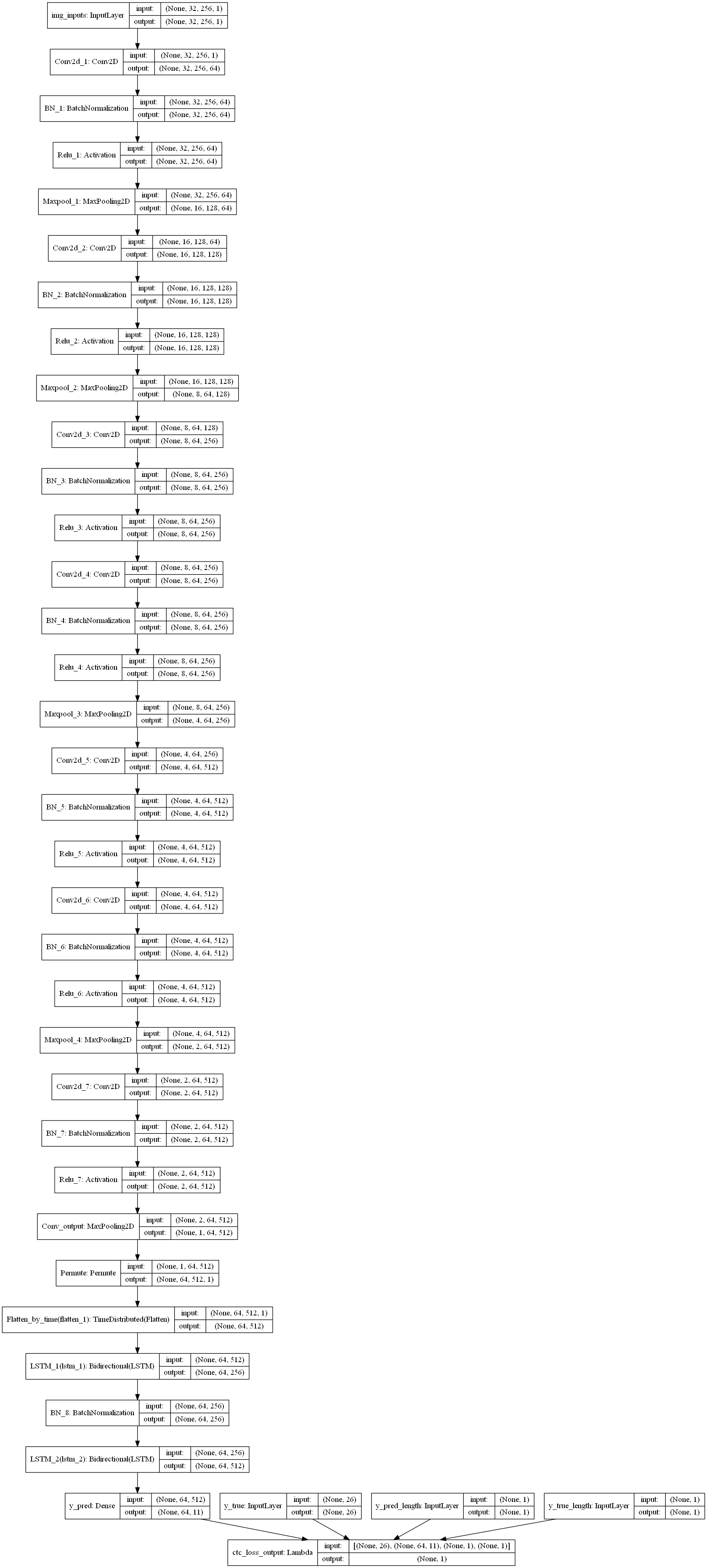

Auto locate is using one famous Text Detection Algorithm - EAST. See more details.

In this project, I prefer to use AdvancedEAST. It is an algorithm used for Scene-Image-Text-Detection, which is primarily based on EAST, and the significant improvement was also made, which make long text predictions more accurate. Original repo see Reference 1.

Also, training process is quiet quick and nice. As practical experience, img_size is better to be 384. The epoch_nbr is no longer important any more, for img_size like 384, usually training will early stop at epoch 20-22. But if you have a large dataset, try to play with these parameters.

This model's preview:

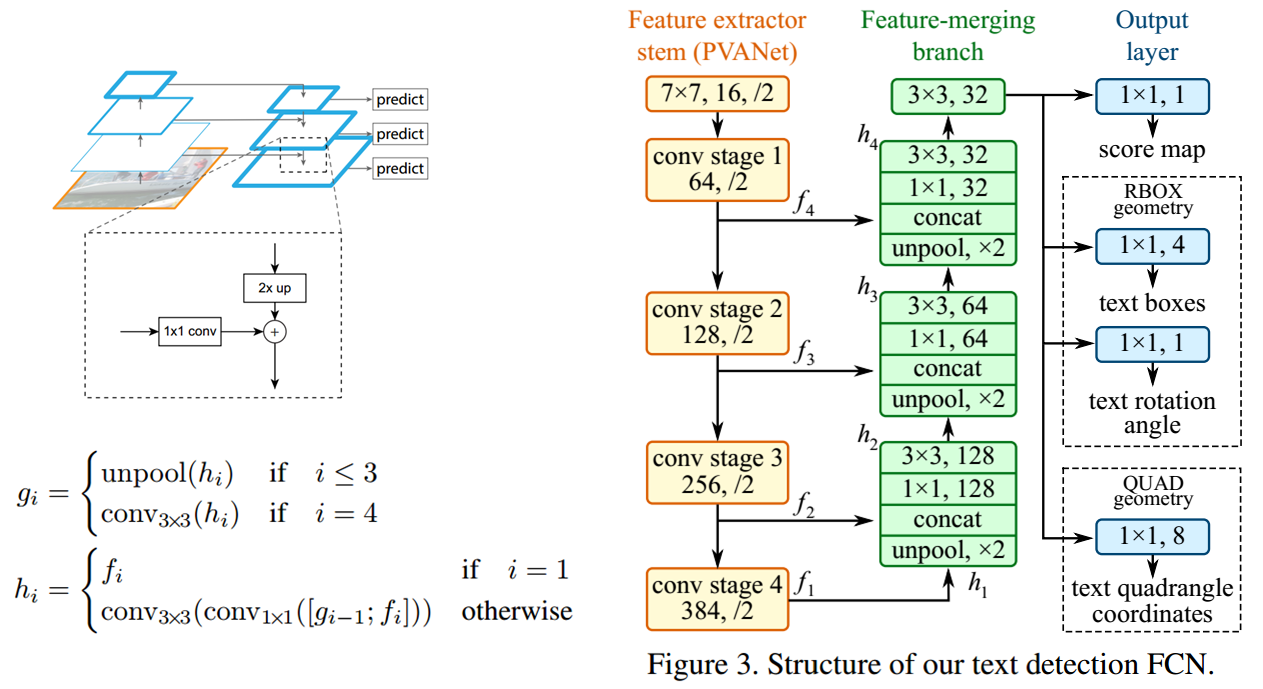

Manual locate is only available in GUI. Here're some performance in .gif:

Using QtDesigner to design UI, and PyQt5 to finish other works.

- The bankcard images are collecting from Internet, if any images make you feel uncomfortable, please contact me.

- If you have any issues, post it in Issues.

- Advanced EAST - https://github.com/huoyijie/AdvancedEAST

- EAST model - https://www.cnblogs.com/lillylin/p/9954981.html