This repository is the official PyTorch implementation of MO-VLN.

MO-VLN: A Multi-Task Benchmark for Open-set Zero-Shot Vision-and-Language Navigation

Xiwen Liang*,

Liang Ma*,

Shanshan Guo,

Jianhua Han,

Hang Xu,

Shikui Ma,

Xiaodan Liang

*Equal contribution

🚀🚀[8/17/2023]v0.2.0: More assets!2 new scenes,50 new walkers,954 new objects,1k+ new instructions

We have released

- Support for grabbing and navigation tasks.

- Added many different walker states, including 50 unique walkers across gender, skin color, and age groups, with smooth walking or running motions.

- Added walker control interface. This interface supports:

- Selecting the walker type to generate

- Specifying where walkers are generated

- Setting whether they move freely

- Controlling the speed of their movement

- Added 1k+ instructions to our four tasks.

- We modeled an additional 954 classes of models to construct the indoor scene.

- Two new scenes have been added, bringing the total to five:

[6/18/2023]v0.1.0: 3 scenes,2165 objects, real light, shadow characteristics, and support instruction tasks with four tasks

We have released

- Built on UE5.

- 3 scene types:

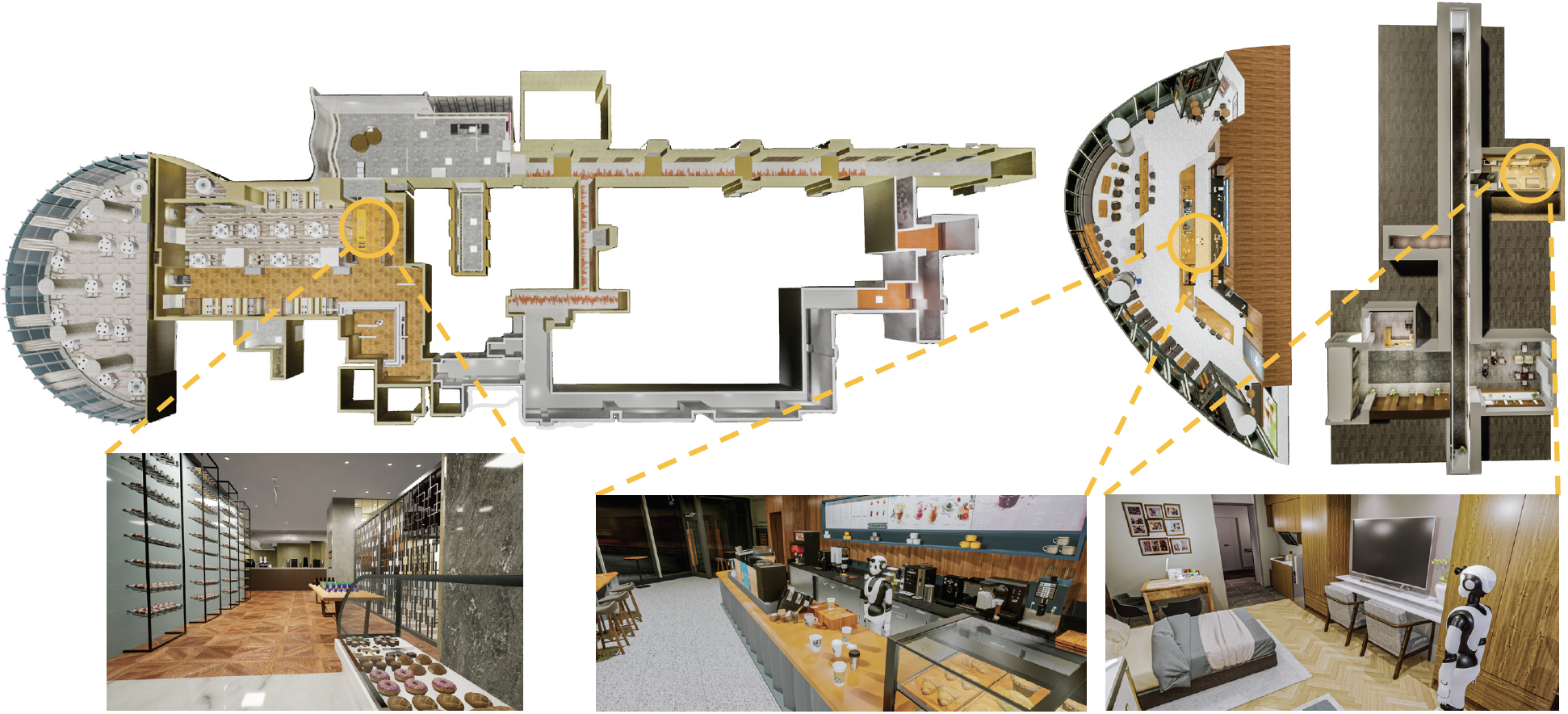

- Café -- Modelled on a 1:1 ratio to a Café

- Restaurant -- Modelled on a 1:1 ratio to a restaurant

- Nursing Room -- Modelled on a 1:1 ratio to a Nursing Room

- We handcrafted 2,165 classes of models at a 1:1 ratio to real-life scenarios to construct these three scenes. These three scenes were ultimately constructed from a total of 4,230 models.

- We selected 129 representative classes from the models built and supported navigation testing. Among them, 54 classes are fixed within the environment, while 73 classes support customization by users.

- With real light and shadow characteristics

- Support instruction tasks with four tasks:

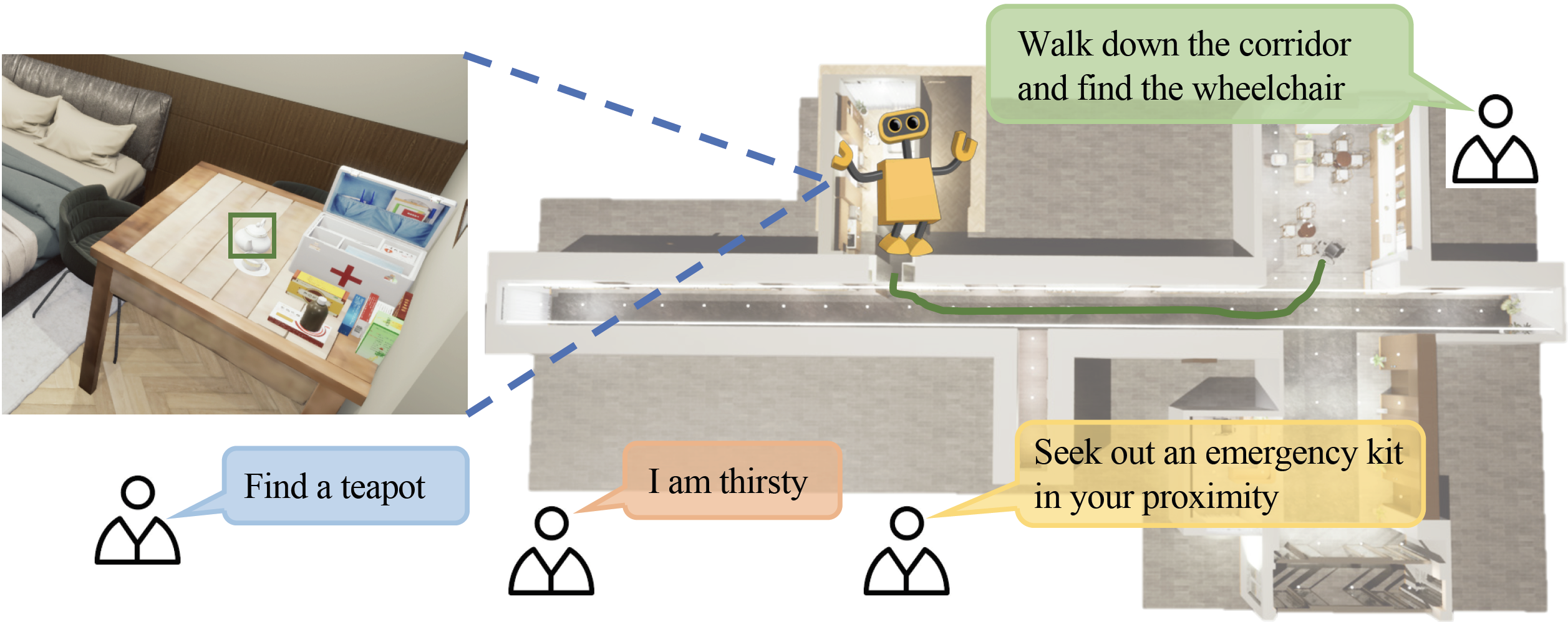

- goal-conditioned navigation given a specific object category (e.g., "fork");

- goal-conditioned navigation given simple instructions (e.g., "Search for and move towards a tennis ball");

- step-by-step instructions following;

- finding abstract objects based on high-level instruction (e.g., "I am thirsty").

MO-VLN provides four tasks: 1) goal-conditioned navigation given a specific object category (e.g., "fork"); 2) goal-conditioned navigation given simple instructions (e.g., "Search for and move towards a tennis ball"); 3) step-by-step instruction following; 4) finding abstract object based on high-level instruction (e.g., "I am thirsty"). The earlier version of our simulator covers three high-quality scenes: cafe, restaurant, and nursing house.

-

Installing the simulator following here.

-

Installing GLIP.

-

Installing Grounded-SAM.

Clone the repository and install other requirements:

git clone https://github.com/liangcici/MO-VLN.git

cd MO-VLN/

pip install -r requirements.txt

-

Downloading original datasets from here.

-

Generate data for ObjectNav (goal-conditioned navigation given a specific object category).

python data_preprocess/gen_objectnav.py --map_id 3

map_id indicates specific scene: {3: Starbucks; 4: TG; 5: NursingRoom}.

The implementation is based on frontier-based exploration (FBE). Exploration with commonsense knowledge as in our paper is based on ESC, which is not allowed to be released. dataset/objectnav/*.npy are knowledge extracted from LLMs, and can be used to reproduce exploration with commonsense knowledge.

Run models with FBE:

- For ObjectNav:

python zero_shot_eval.py --sem_seg_model_type glip --map_id 3

- The Semantic Mapping module is based on SemExp.

- Added more walker states.

- Added walker control interface.

- Provide more classes of generative objects.

- Construct complex tasks involving combined navigation and grasping.

- 10+ scenes are under construction and will be updated successively in the future.

- Generate high-quality instruction-ground truth pairs for the newly constructed scenes.

- Continue to update the simulator's physics engine effects to achieve more realistic dexterous hand-grabbing effects

- Adding more interactive properties to objects in the environment, such as a coffee machine that can be controlled to make coffee.

@article{liang2023mo,

title={MO-VLN: A Multi-Task Benchmark for Open-set Zero-Shot Vision-and-Language Navigation},

author={Liang, Xiwen and Ma, Liang and Guo, Shanshan and Han, Jianhua and Xu, Hang and Ma, Shikui and Liang, Xiaodan},

journal={arXiv preprint arXiv:2306.10322},

year={2023}

}