TorchFWI is an elastic full-waveform inversion (FWI) package integrated with the deep-learning framework PyTorch. On the one hand, it enables the integration of FWI with neural networks and makes it easy to create complex inversion workflows. On the other hand, the multi-GPU-accelerated FWI component with a boundary-saving method offers high computational efficiency. One can use the suite of built-in optimizers in PyTorch or Scipy (e.g., L-BFGS-B) for inversion.

As a quick start, go to directory src, and type

python main.py --generate_data

Then, type

python main.py

to start inversion. You can for example add the --ngpu flag to use multiple GPUs for forward simulation or inversion. For example,

python main.py --ngpu=4

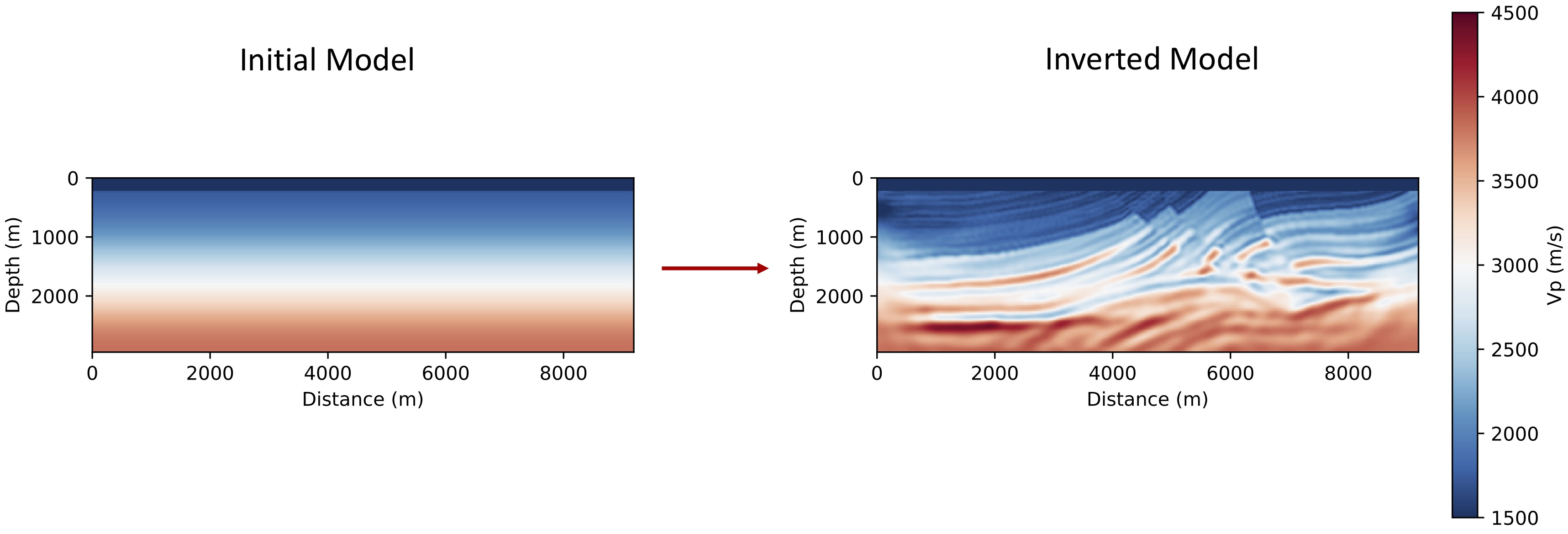

The initial model and the inverted model after 80 iterations are shown below.

This package uses just-in-time (JIT) compilation for the FWI code. It only compiles the first time you run the code, and no explicit ''make'' is required. An NVIDIA cuda compiler is needed.

This package uses just-in-time (JIT) compilation for the FWI code. It only compiles the first time you run the code, and no explicit ''make'' is required. An NVIDIA cuda compiler is needed.

If you find this package helpful in your research, please kindly cite

- Dongzhuo Li, Kailai Xu, Jerry M. Harris, and Eric Darve. Time-lapse Full-waveform Inversion for Subsurface Flow Problems with Intelligent Automatic Differentiation.

- Dongzhuo Li, Jerry M. Harris, Biondo Biondi, and Tapan Mukerji. 2019. Seismic full waveform inversion: nonlocal similarity, sparse dictionary learning, and time-lapse inversion for subsurface flow..

- Kailai Xu, Dongzhuo Li, Eric Darve, and Jerry M. Harris. Learning Hidden Dynamics using Intelligent Automatic Differentiation.

The FwiFlow package, which uses TensorFlow as the backend with additional subsurface flow inversion capabilities, is here.