pip install tensorflow-gpu==1.13.1

# If you are in China

pip install -i https://pypi.tuna.tsinghua.edu.cn/simple tensorflow-gpu==1.13.1

python lookahead_optimizer_test.py

python radam_optimizer_test.py

pip install tensorflow_datasets

# If you are in China

pip install -i https://pypi.tuna.tsinghua.edu.cn/simple tensorflow_datasets

dir=exp/cifar10

mkdir -p $dir

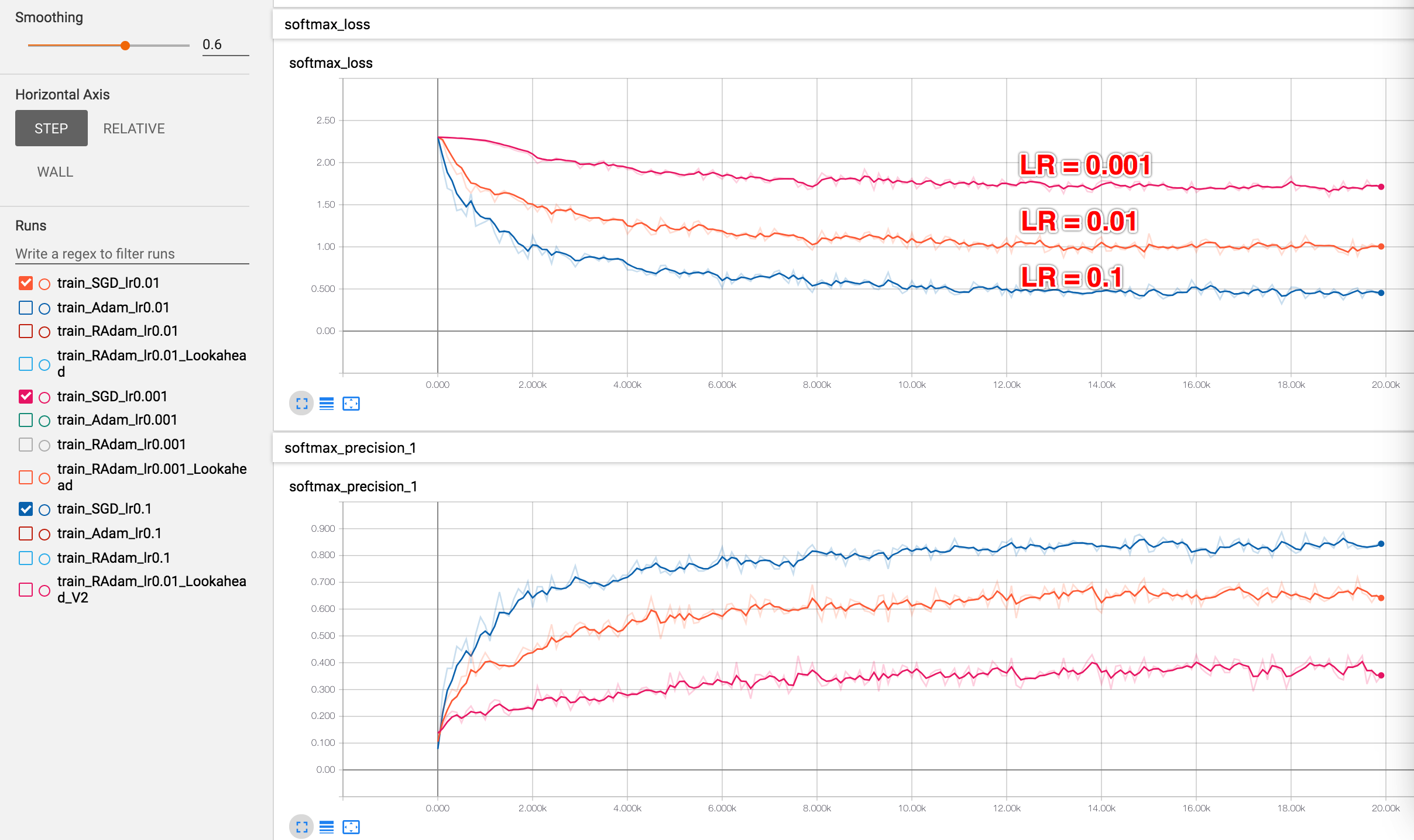

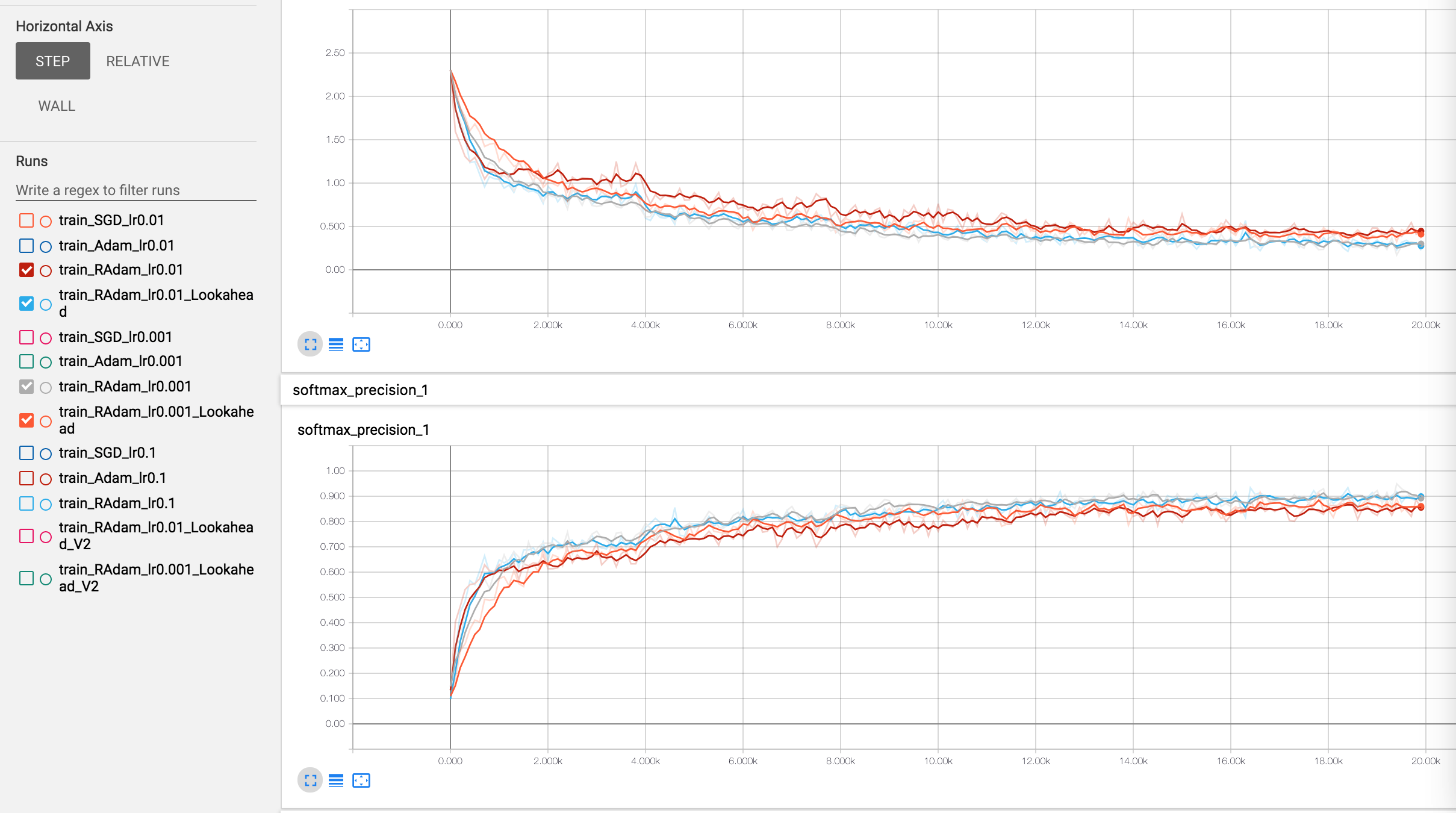

for lr in "0.01" "0.001" "0.1";do

python cifar10_train.py --learning_rate $lr --optimizer sgd --train_dir $dir/train_SGD_lr${lr} || exit 1

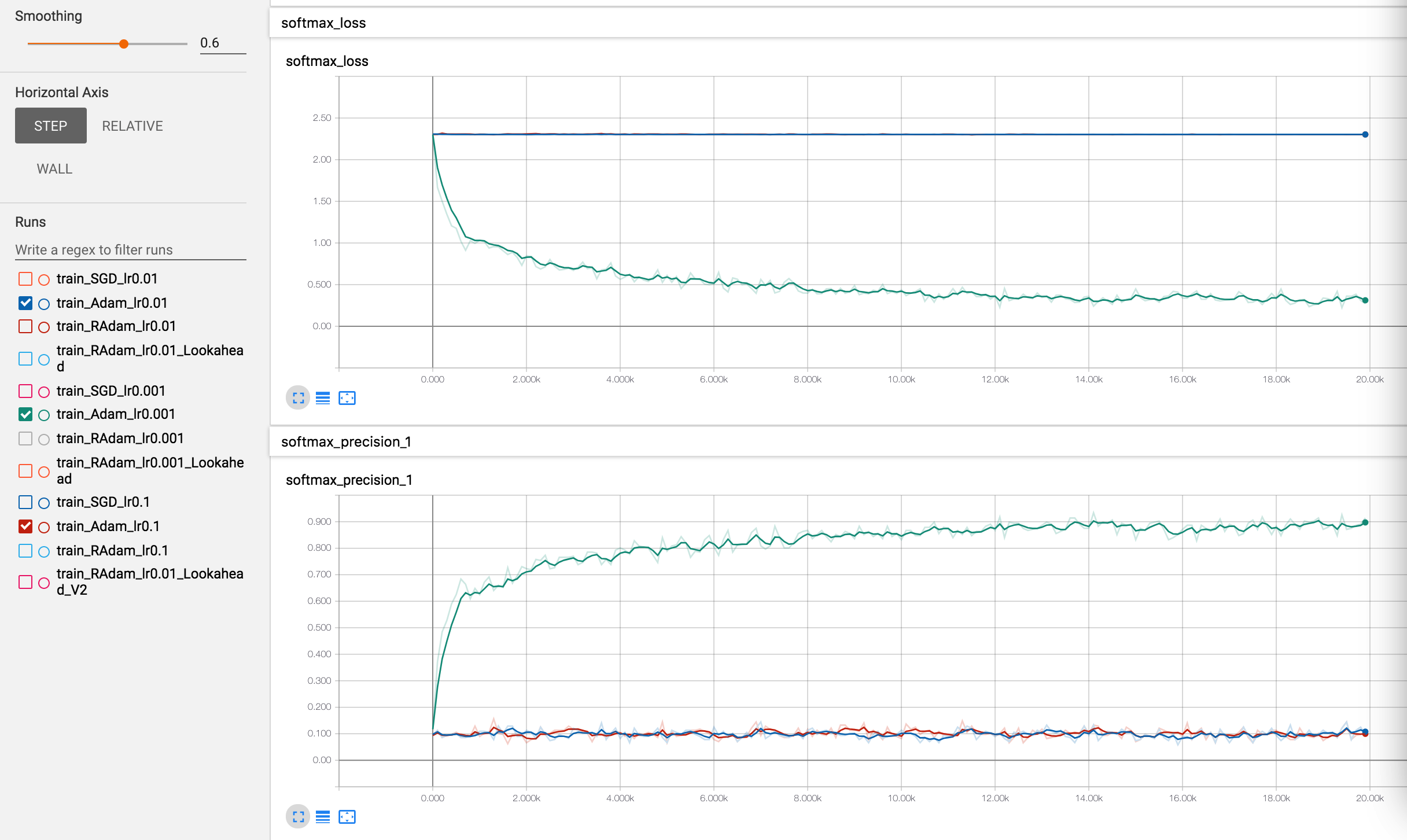

python cifar10_train.py --learning_rate $lr --optimizer adam --train_dir $dir/train_Adam_lr${lr}

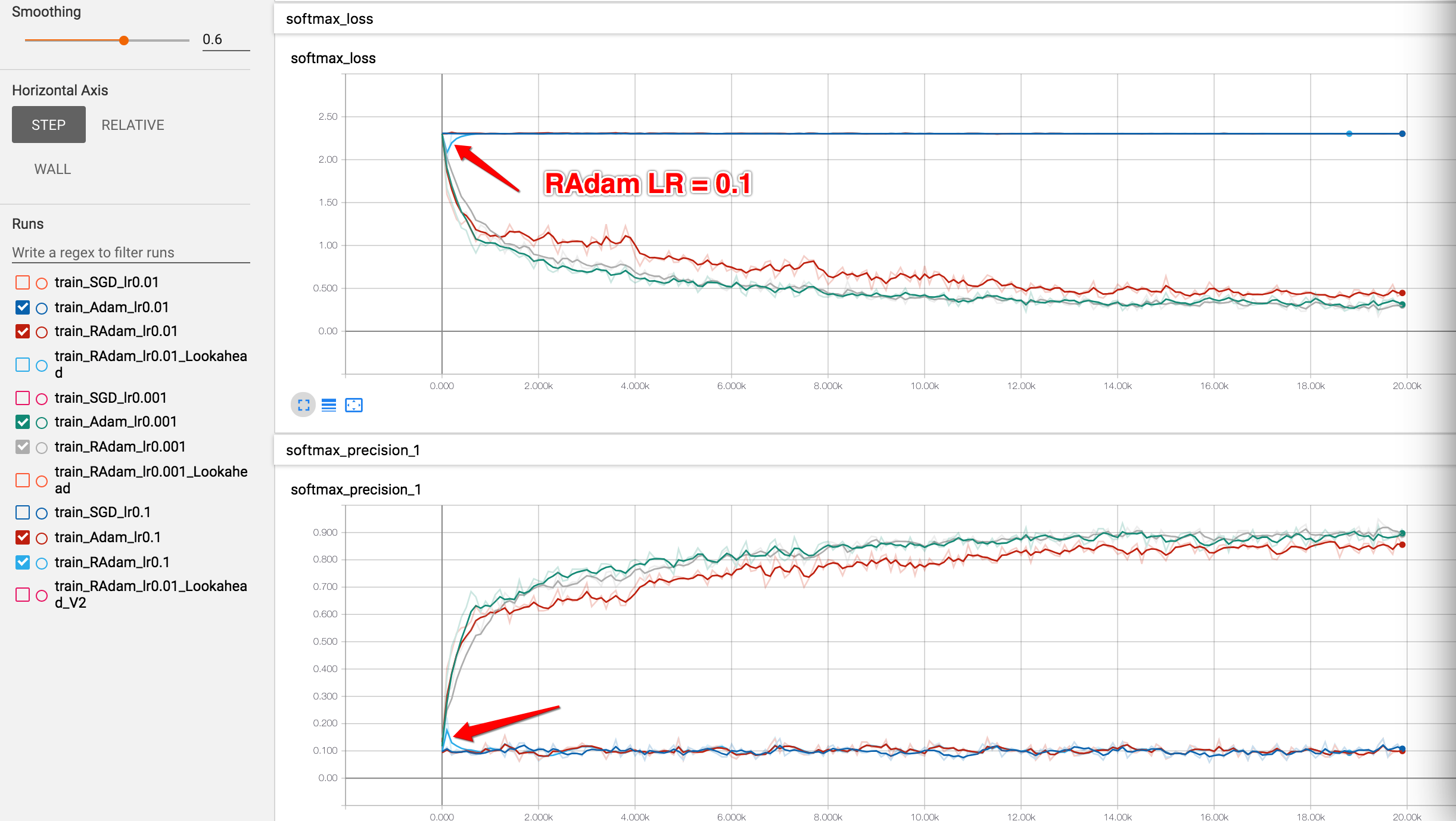

python cifar10_train.py --learning_rate $lr --optimizer radam --train_dir $dir/train_RAdam_lr${lr}

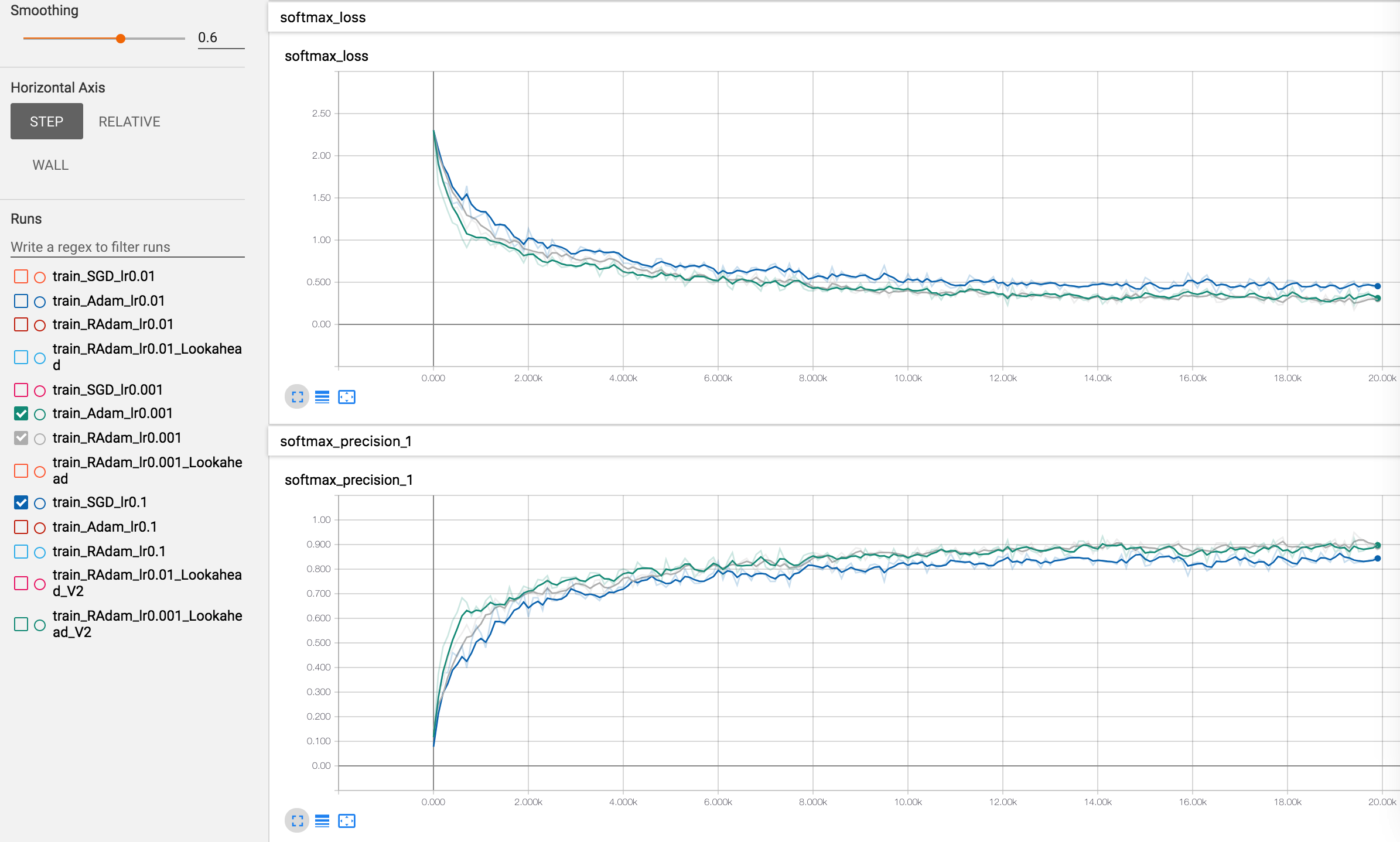

python cifar10_train.py --learning_rate $lr --optimizer lookaheadradam --train_dir $dir/train_RAdam_lr${lr}_Lookahead

done