This is the implementation for the CVPR 2023 paper:

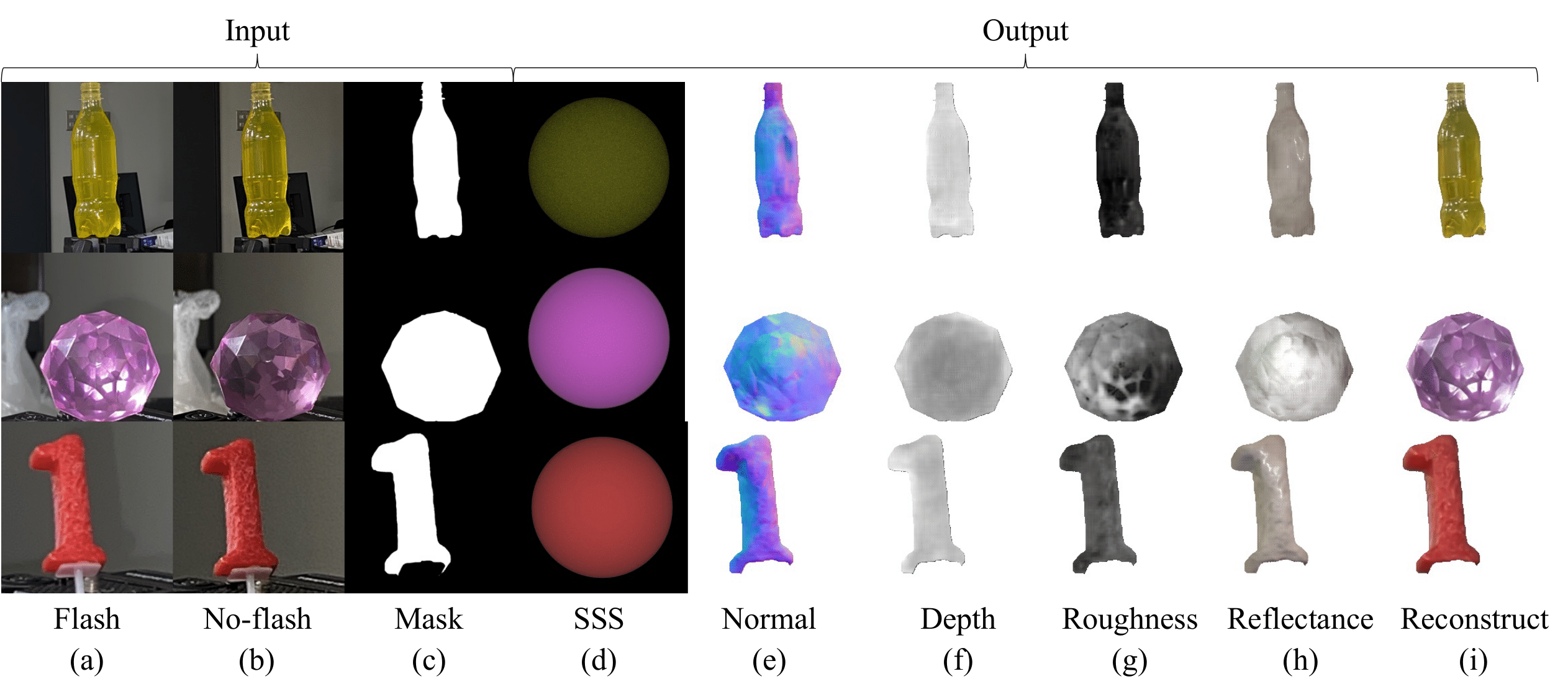

"Inverse Rendering of Translucent Objects using Physical and Neural Renderers"

By Chenhao Li, Trung Thanh Ngo, Hajime Nagahara

Project page | arXiv | Dataset | Video

- Linux

- NVIDIA GPU + CUDA CuDNN

- Python 3

- torch

- torchvision

- dominate

- visdom

- pandas

- scipy

- pillow

python ./inference_real.py --dataroot "./datasets/real" --dataset_mode "real" --name "edit_twoshot" --model "edit_twoshot" --eval- Download the dataset.

- Unzip it to ./datasets/

- To view training results and loss plots, run

python -m visdom.serverand click the URL http://localhost:8097. - Train the model (change gpu_ids according your device)

python ./train.py --dataroot "./datasets/translucent" --name "edit_twoshot" --model "edit_twoshot" --gpu_ids 0,1,2,3python ./test.py --dataroot "./datasets/translucent" --name "edit_twoshot" --model "edit_twoshot" --evalbash ./scripts.sh@inproceedings{li2023inverse,

title={Inverse Rendering of Translucent Objects using Physical and Neural Renderers},

author={Li, Chenhao and Ngo, Trung Thanh and Nagahara, Hajime},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={12510--12520},

year={2023}}Code derived and modified from: