This is a PyTorch implementation of 6-DoF GraspNet. The original Tensorflow implementation can be found here https://github.com/NVlabs/6dof-graspnet.

The source code is released under MIT License and the trained weights are released under CC-BY-NC-SA 2.0.

This code has been tested with python 3.6, PyTorch 1.4 and CUDA 10.0 on Ubuntu 18.04. To install do

-

pip3 install torch==1.4.0+cu100 torchvision==0.5.0+cu100 -f <https://download.pytorch.org/whl/torch_stable.html> -

Clone this repository:

git clone git@github.com:jsll/pytorch_6dof-graspnet.git. -

Clone pointnet++:

git@github.com:erikwijmans/Pointnet2_PyTorch.git. -

Run

cd Pointnet2_PyTorch && pip3 install -r requirements.txt -

cd pytorch_6dof-graspnet -

Run

pip3 install -r requirements.txtto install necessary python libraries. -

(Optional) Download the trained models either by running

sh checkpoints/download_models.shor manually from here. Trained models are released under CC-BY-NC-SA 2.0.

The pre-trained models released in this repo are retrained from scratch and not converted from the original ones https://github.com/NVlabs/6dof-graspnet trained in Tensorflow. I tried to convert the Tensorflow models but with no luck. Although I trained the new models for a substantial amount of time on the same training data, no guarantees to their performance compared to the original work can be given.

In the paper, the authors only used gradient-based refinement. Recently, they released a Metropolis-Hastings sampling method which they found to give better results in shorter time. As a result, I keep the Metropolis-Hastings sampling as the default for the demo.

This repository also includes an improved grasp sampling network which was proposed here https://github.com/NVlabs/6dof-graspnet. The new grasp sampling network is trained with Implicit Maximum Likelihood Estimation.

I have now uploaded new models that are trained for longer and until the test loss flattened. The new models can be downloaded in the same way as detailed in step 7 above.

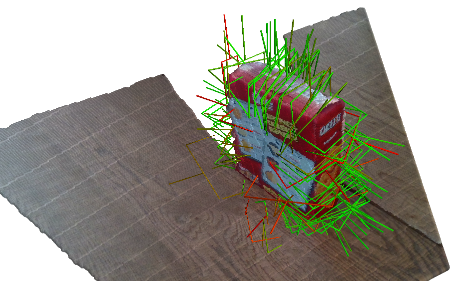

Run the demo using the command below

python -m demo.mainPer default, the demo script runs the GAN sampler with sampling based refinement. To use the VAE sampler and/or gradient refinement run:

python -m demo.main --grasp_sampler_folder checkpoints/vae_pretrained/ --refinement_method gradientDownload the meshes with ids written in shapenet_ids.txt from https://www.shapenet.org/. Some of the objects are in ShapenetCore and ShapenetSem.

- Clone and build: https://github.com/hjwdzh/Manifold

- Create a watertight mesh version assuming the object path is model.obj:

manifold model.obj temp.watertight.obj -s - Simplify it:

simplify -i temp.watertight.obj -o model.obj -m -r 0.02

The dataset can be downloaded from here. The dataset has 3 folders:

graspsfolder: contains all the grasps for each object.meshesfolder: has the folder for all the meshes used. Exceptcylinderandboxthe rest of the folders are empty and need to be populated by the downloaded meshes from shapenet.splitsfolder: contains the train/test split for each of the categories.

Verify the dataset by running python grasp_data_reader.py to visualize the evaluator data and python grasp_data_reader.py --vae-mode to visualize only the positive grasps.

To train the grasp sampler (vae or gan) or the evaluator with bare minimum configurations run:

python3 train.py --arch {vae,gan,evaluator} --dataset_root_folder $DATASET_ROOT_FOLDERwhere the $DATASET_ROOT_FOLDER is the path to the dataset you downloaded.

To monitor the training, run tensorboard --logdir checkpoints/ and click http://localhost:6006/.

For more training options run GAN Training Example Command:

python3 train.py --helpI have not converted the code for doing quantitative evaluation https://github.com/NVlabs/6dof-graspnet/blob/master/eval.py to PyTorch. I would appreciate it if someone could convert it and send in a pull request.

If you find this work useful in your research, please consider citing the original authors' work:

inproceedings{mousavian2019graspnet,

title={6-DOF GraspNet: Variational Grasp Generation for Object Manipulation},

author={Arsalan Mousavian and Clemens Eppner and Dieter Fox},

booktitle={International Conference on Computer Vision (ICCV)},

year={2019}

}

as well as my implementation

@article{pytorch_6dof-graspnet,

Author = {Jens Lundell},

Title = {6-DOF GraspNet Pytorch},

Journal = {<https://github.com/jsll/pytorch_6dof-graspnet},>

Year = {2020}

}