Project Page | Video | Paper

A Tensorflow implementation of our CVPR 2021 work on deep flash denoising.

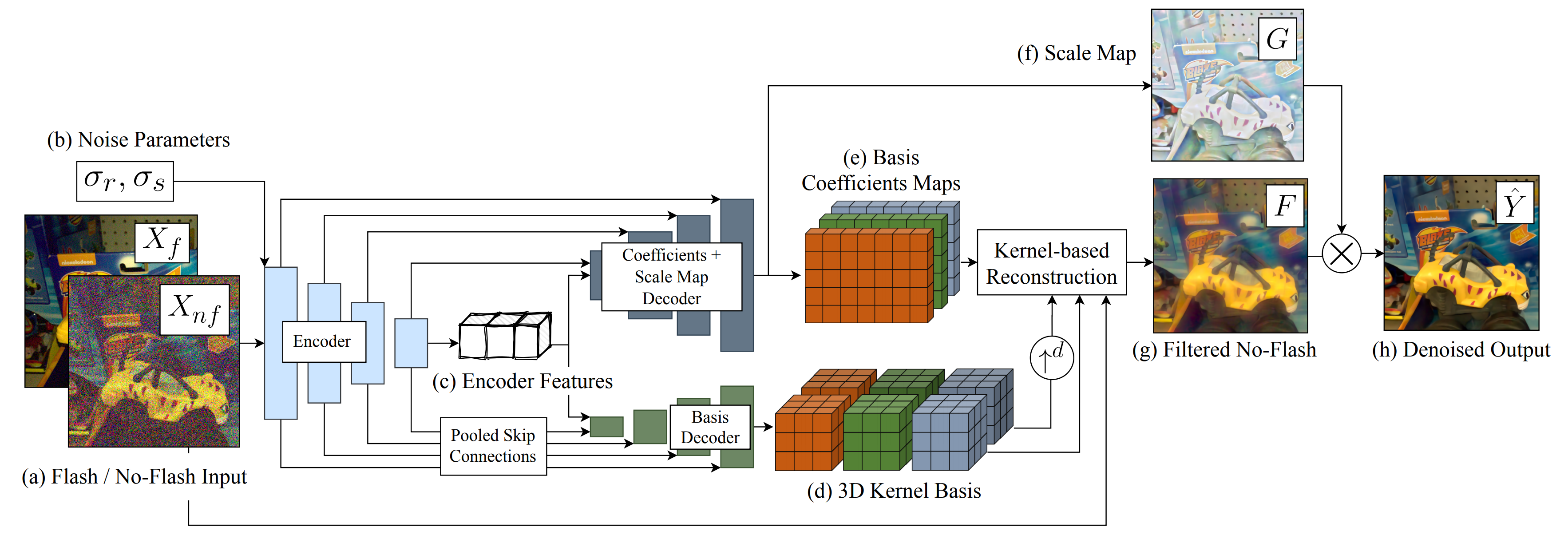

Deep Denoising of Flash and No-Flash Pairs for Photography in Low-Light Environments (CVPR 2021)

Zhihao Xia1,

Michael Gharbi2,

Federico Perazzi3,

Kalyan Sunkavalli2,

Ayan Chakrabarti1

1WUSTL, 2Adobe Research, 3Facebook

Python 3 + Tensorflow-1.14

We generate our test set with 128 images (filenames provided in data/test.txt) from the raw images of the Flash and Ambient Illuminations Dataset. The noisy and clean flash/no-flash pairs can be found here. You can also download the test set by running

bash ./scripts/download_testset.sh

Our pre-trained model for flash denoising can be found here. You can run

bash ./scripts/download_models.sh

to download it.

Run

python test.py [--wts path_to_model]

to test flash denoising with the pre-trained model on our test set.

Our model is trained on the raw images of the Flash and Ambient Illuminations Dataset. To train your own model, download the dataset and update data/train.txt and data/val.txt with path to each image. Note that you need to exclude images that are used in our test set (filenames provided in data/test.txt) from the training or val set.

The raw images of the Flash and Ambient Illuminations Dataset are in 16-bit PNG files. Exif information including the color matrix and calibration illuminant necessary for the color mapping are attached to the PNGs. To save them in pickle files for training and testing later, run

python dump_exif.py

After that, run

python gen_valset.py

to generate a validation dataset.

Finally, run

python train.py

to train the model. You can press ctrl-c at any time to stop the training and save the checkpoints (model weights and optimizer states). The training script will resume from the latest checkpoint (if any) in the model directory and continue training.

If you find the code useful for your research, we request that you cite the paper. Please contact zhihao.xia@wustl.edu with any questions.

@InProceedings{deepfnf2021,

author={Zhihao Xia and Micha{\"e}l Gharbi and Federico Perazzi and Kalyan Sunkavalli and Ayan Chakrabarti}

title = {Deep Denoising of Flash and No-Flash Pairs for Photography in Low-Light Environments},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2021},

pages = {2063-2072}

}

This work was supported by the National Science Foundation under award no. IIS-1820693. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors, and do not necessarily reflect the views of the National Science Foundation.

This implementation is licensed under the MIT License.