Project was created based on https://github.com/confluentinc/kafka-streams-examples and contains awesome ideas behind designing-event-driven-systems book (https://www.confluent.io/designing-event-driven-systems/)

-

2 Hats od events:

-

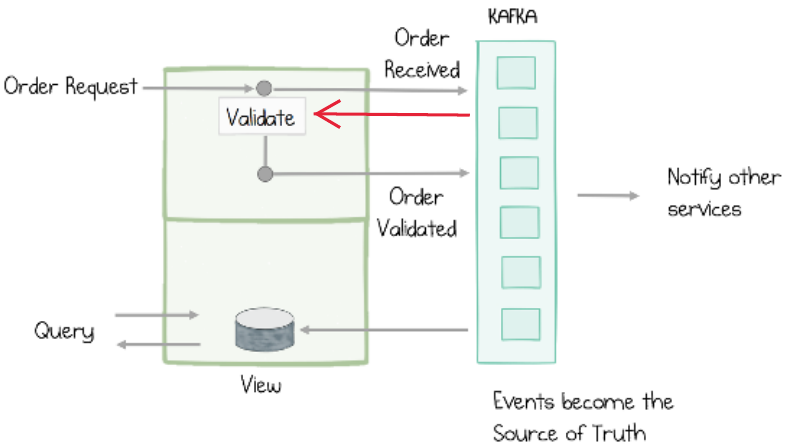

Event sourcing:

-

Every state change in a system is recorded (like a git).

'+' avoid object-relational mismatch

'+' provide history of changes

'+' efficient use exactly one delivery

-

-

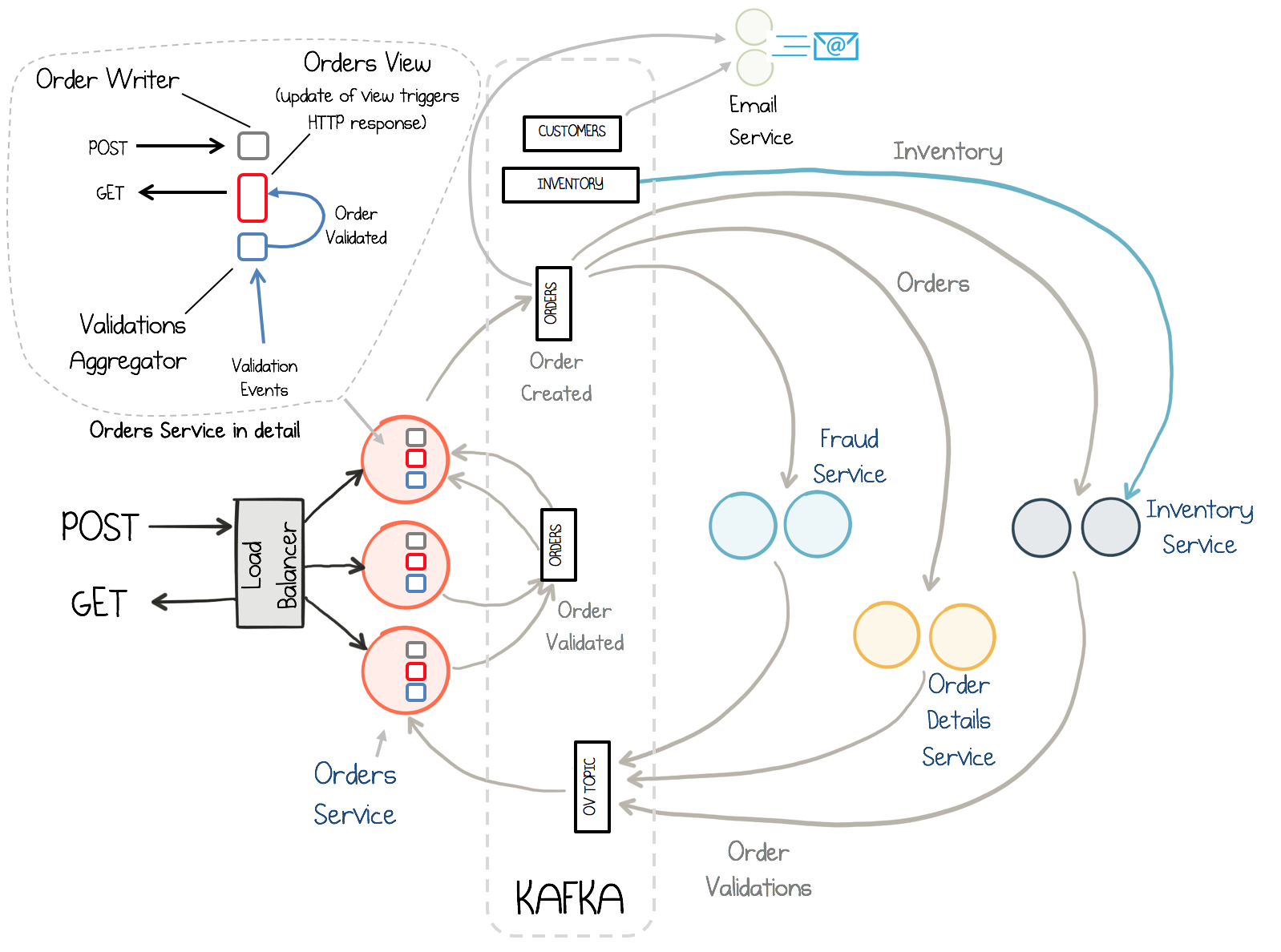

CQRS

-

Separete the write path from read path

Events can be sent via kafka connectors to external sink etc. Mongo or Elasticsearch Service (https://docs.confluent.io/home/connect/self-managed/kafka_connectors.html). Thanks to it, we are able to read from anything we would like to. In CQRS single write model can push data into many read models.

-

-

Handling errors during processing events

- DLQ

- Retries topics

- Handling poison pills

- Handling unexpected errors

-

Write ahead:

- write input data ASAP - without redundant operations (similar approaches in DB)

-

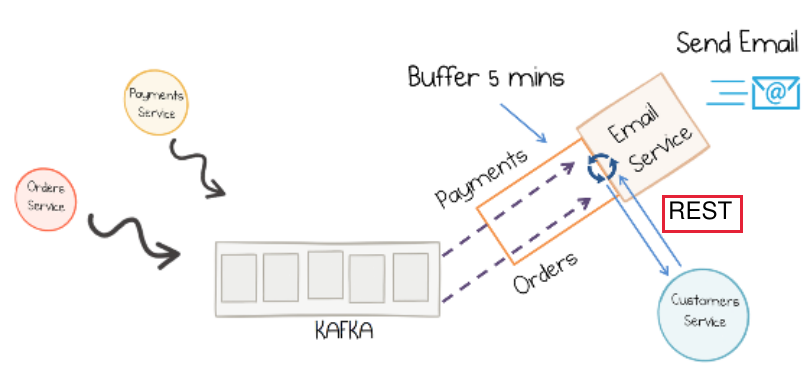

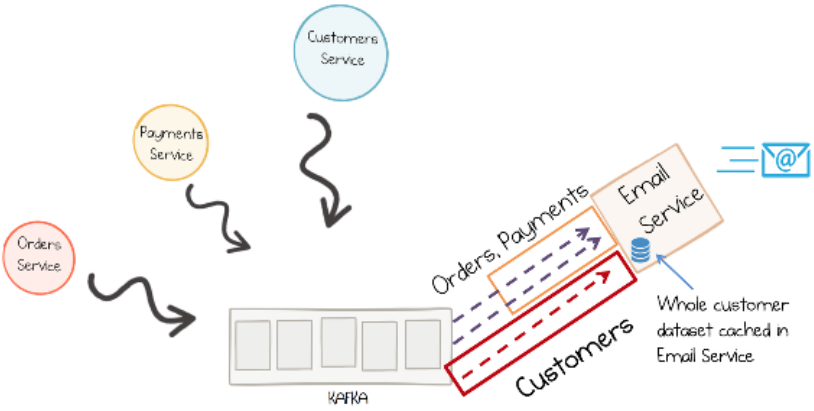

Sharing Data across services via events

Project can be deployed on k8s (folder k8s).

- Create infracture on GKE via terraform: https://github.com/mgumieniak/gke-template-cluster

- Deploy objects on k8s via

/k8s/dep.sh

Use port-forwarding or create ingress.

- Reserve static-ip

- Buy domain: https://www.namecheap.com/. Configure bought domain with ip-address.

- Deploy objects in ingress_stuff to k8