Peeking into occluded joints: A novel framework for crowd pose estimation(ECCV2020)

- PyTorch(>=0.4 && <=1.1)

- mmcv

- OpenCV

- visdom

- pycocotools

This code is tested under Ubuntu 18.04, CUDA 10.1, cuDNN 7.1 environment with two NVIDIA 1080Ti GPUs.

Python 3.6.5 version is used for development.

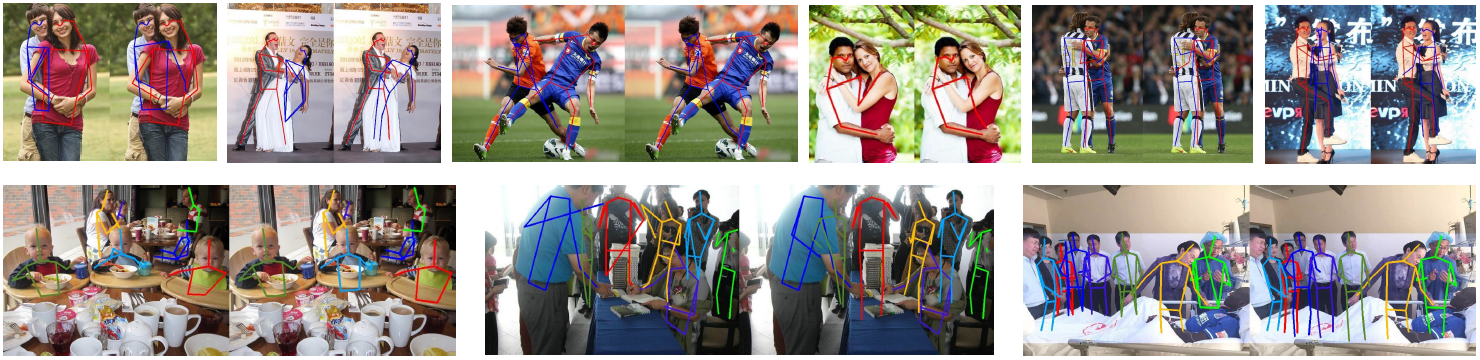

We build a new dataset, called Occluded Pose(OCPose), that includes more heavy occlusions to evaluate the MPPE. It contains challenging invisible jointsand complex intertwined human poses.

| Dataset | Total | IoU>0.3 | IoU>0.5 | IoU>0.75 | Avg IoU |

|---|---|---|---|---|---|

| CrowdPose | 20000 | 8704(44%) | 2909(15%) | 309(2%) | 0.27 |

| COCO2017 | 118287 | 6504(5%) | 1209(1%) | 106(<1%) | 0.06 |

| MPII | 24987 | 0 | 0 | 0 | 0.11 |

| OCHuman | 4473 | 3264(68%) | 3244(68%) | 1082(23%) | 0.46 |

| OCPose | 9000 | 8105(90%) | 6843(76%) | 2442(27%) | 0.47 |

Image code:euq6

Annotations code:3xgr

pls, Download annotations processed by sampling rules according to our paper

train_process_datasets

test_process_datasets

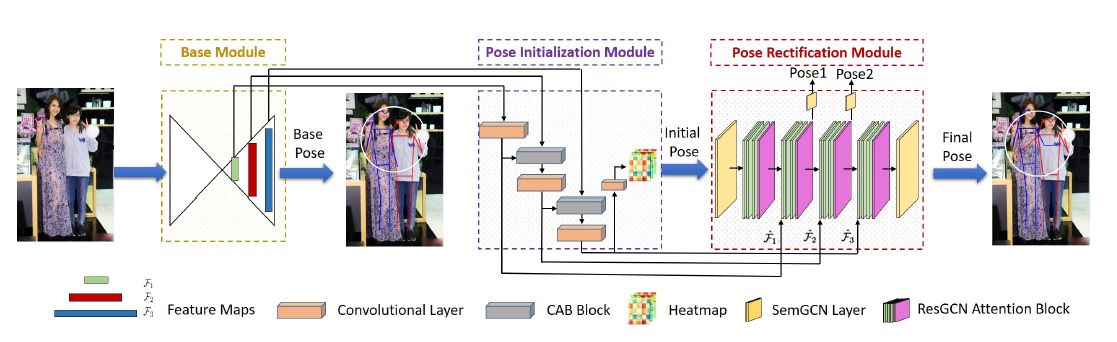

Here, we employ top-down module(Alphapose+ based on pytorch) as our initial module.

The pretrain checkpoints trained by official codes could be download as following:

SPPE

yolov3

Instead of using pretrain module in coco2017, we simply provide you quick-start version, where you merely train the OPEC-Net from processed data including both coco and CrowdPose.

Before training, the structure of projects like:

coco

| train2017

| xxxxx.jpg

crowdpose

| images

| xxxxx.jpg

project

│

│

│

└───test_process_datasets

│ download from Download processed annotations

│

│

└──────weights

│ │-- sppe

│ │ sppe weights

│ |

│ |-- ssd

| |

| |

| └───yolo

| yolow eights

└───train_process_datasets

download from Download processed annotations

e.g.

TRAIN_BATCH_SIZE=14

CONFIG_FILES=./configs/OPEC_GCN_GrowdPose_Test_FC.py

bash train.sh ${TRAIN_BATCH_SIZE} ${CONFIG_FILES}

after training, the result of CrowdPose is save into checkpoints/name/mAP.txt

the format of results like:

epoch (without best match) (use best match)

e.g.

CHECKPOINTS_DIRS='path to your checkpoints files'

CONFIG_FILES =./configs/OPEC_GCN_GrowdPose_Test_FC.py

bash test.sh ${CHECKPOINTS_DIRS} ${CONFIG_FILES}

Result on CrowdPose-test:

| Method | mAP@50:95 | AP50 | AP75 | AP80 | AP90 |

|---|---|---|---|---|---|

| Mask RCNN | 57.2 | 83.5 | 60.3 | - | - |

| Simple Pose | 60.8 | 81.4 | 65.7 | - | - |

| AlphaPose+ | 68.5 | 86.7 | 73.2 | 66.9 | 45.9 |

| OPEC-Net | 70.6 | 86.8 | 75.6 | 70.1 | 48.8 |

Result on OCHuman:

| Method | mAP@50:95 | AP50 | AP75 | AP80 | AP90 |

|---|---|---|---|---|---|

| AlphaPose+ | 27.5 | 40.8 | 29.9 | 24.8 | 9.5 |

| OPEC-Net | 29.1 | 41.3 | 31.4 | 27.0 | 12.8 |

Result on OCPose:

| Method | mAP@50:95 | AP50 | AP75 | AP80 | AP90 |

|---|---|---|---|---|---|

| Simple Pose | 27.1 | 54.3 | 24.2 | 16.8 | 4.7 |

| AlphaPose+ | 30.8 | 58.4 | 28.5 | 22.4 | 8.2 |

| OPEC-Net | 32.8 | 60.5 | 31.1 | 24.0 | 9.2 |

If you find our works useful in your reasearch, please consider citing:

@inproceedings{qiu2020peeking,

title={Peeking into occluded joints: A novel framework for crowd pose estimation},

author={Qiu, Lingteng and Zhang, Xuanye and Li, Yanran and Li, Guanbin and Wu, Xiaojun and Xiong, Zixiang and Han, Xiaoguang and Cui, Shuguang},

booktitle={European Conference on Computer Vision},

pages={488--504},

year={2020},

organization={Springer}

}