- Author: Jason Paul

- Email: jasonpa@gmail.com

For companion article, visit linuxtek.ca at this link.

This tech challenge consisted of the following challenge:

URL lookup service

We have an HTTP proxy that is scanning traffic looking for malware URLs. Before allowing HTTP connections to be made, this proxy asks a service that maintains several databases of malware URLs if the resource being requested is known to contain malware.

Write a small web service, in the language/framework your choice, that responds to GET requests where the caller passes in a URL and the service responds with some information about that URL. The GET requests look like this:

GET /urlinfo/1/{hostname_and_port}/{original_path_and_query_string}

The caller wants to know if it is safe to access that URL or not. As the implementer you get to choose the response format and structure. These lookups are blocking users from accessing the URL until the caller receives a response from your service.

Give some thought to the following:

- The size of the URL list could grow infinitely, how might you scale this beyond the memory capacity of this VM? Bonus if you implement this.

- The number of requests may exceed the capacity of this VM, how might you solve that? Bonus if you implement this.

- What are some strategies you might use to update the service with new URLs? Updates may be as much as 5 thousand URLs a day with updates arriving every 10 minutes.

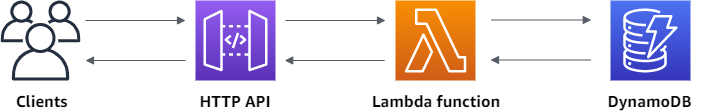

This implementation of the challenge uses a serverless model, using an HTTP API Gateway, Lambda functions, and a DynamoDB for the backend database:

The inspiration for this configuration was this AWS tutorial. Unlike the linked tutorial, the Lambda functions are written in Python 3.

The infrastructure for running this application is implemented using Terraform.

- This implementation can be done inexpensively as there are no persistent servers or containers that need to be running. The resources used can be done on the AWS free tier.

- There was no need to implement a VPC, subnets, or other networking; allowing to concentrate on implementing the required functionality.

- A NoSQL database was chosen for performance and ease of scalability. This is especially important considering the requirement that the URL list could grow infinitely.

- There was no need for multiple tables or relational queries, so NoSQL made sense from this perspective as well.

- DynamoDB offers built-in security, continuous backups, automated multi-Region replication, in-memory caching, and data export tools.

- The HTTP API Gateway provides an easy method to interface with the Lambda functions for the HTTP GET and POST commands without having to stand up a web server.

- This implementation scales up extremely well, which was another requirement for the scaling of the number of requests.

- Typical solutions would use a load balancer and VM autoscaling groups, or multiple containers.

- While it is possible to use a load balancer with Lambda, the API Gateway was a simpler option.

- There are a number of quota limitations for Lambda functions that may cause scalability issues.

- There is a concurrency limit to the number of executions that can happen at the same time. Excessive request could result in throttling.

- The scenario indicates that web requests will be blocked until the service provides a response. This could cause delays in seconds, so the service needs to have low latency and be responsive. There are a number of considerations to latency:

- First, the Lambda function when called has to be downloaded, started in a new environment, and initialized before it can be executed. This is referred to as a "cold-start", and can add quite a bit of latency to the first request. Subsequent requests will be faster.

- Keeping the Lambda function "warm" will limit the number of cold-starts. This could be done by adding a ping or health check that occasionally forces the function to respond and stay loaded so it doesn't time out. This would need to be run less than every 900 seconds (15 minutes), which is the function timeout value.

- Another option to look into would be Provisioned Concurrency. Provisioned Concurrency targets both causes of cold-start latency. First, the execution environment set-up happens during the provisioning process, rather during execution, which eliminates this issue. Second, by keeping functions initialized, which includes initialization actions and code dependencies, there is no unnecessary initialization in subsequent invocations.

An AWS API Gateway was designed using Terraform to deploy all of the needed resources:

- The API Gateway "lambdagateway"

- The

$defaultLambda Stage was used to deploy. The access log settings were specified to give as much information as possible to troubleshoot any issues with the incoming requests to the API Gateway. - Two API Gateway routes. This would ensure that any GET requests would be sent to the GET API integration, and any POST requests would be sent to the POST API integration.

- The API Gateway integrations were configured to invoke the Lambda function and pass the data in the HTTP request to it.

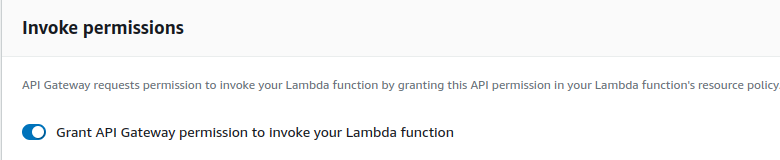

- Both of the integrations were also configured to have access to invoke the Lambda functions. This was tried by explicitly setting permissions, but using this option that can be enabled in the console was a better fit:

Usage

The API Gateway is designed to take in an HTTP GET or POST with parameters in the URL.

This can be built and submitted with a tool like Postman, or can be done on command line with a tool like curl.

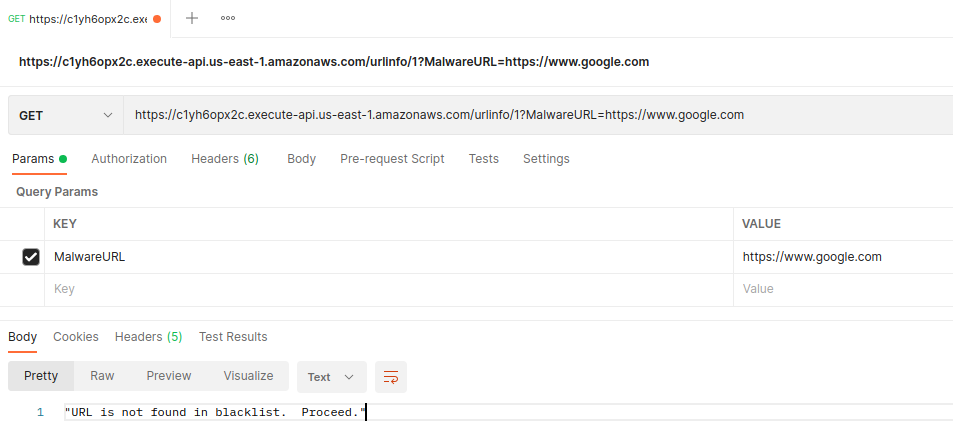

Example #1 - HTTP GET: To check if the URL is in the blacklist or not:

Curl Command:

curl -v -X GET https://apiid.execute-api.us-east-1.amazonaws.com/urlinfo/1?MalwareURL=https://www.google.com

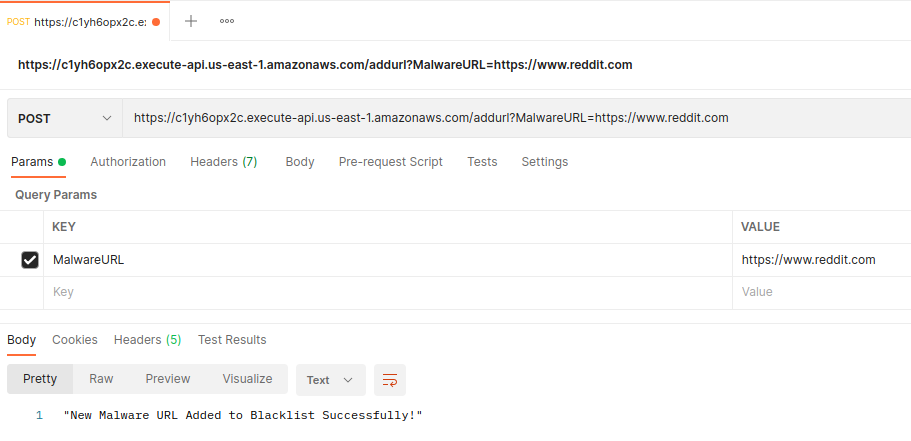

Example #2 - HTTP POST: To add a URL to the Blacklist:

Curl Command:

curl -v -X POST https://apiid.execute-api.us-east-1.amazonaws.com/addurl?MalwareURL=https://www.reddit.com

A Lambda layer is an archive containing additional code, such as libraries, dependencies, or even custom runtimes. In this case, using a Lambda layer made sense as it could be reused for multiple Lambda functions.

The lambdalayer folder includes a generated zip file which is uploaded and linked to the Lambda functions to provide access to the needed modules, such as validators.

Used this page as a resource for creating the zip file.

A similar practice would be to create a virtual environment for each Lambda function and install the required modules. I've blogged about this here.

The python folder contains the code for the Lambda functions. The challenge requirements were to be able to query for a URL to see if it is part of the existing blacklist, as well as being able to method for updating with new URLs.

-

getMalwareCheck.py - Allows for a query with a URL, to see if it is part of the existing blacklist. Will return an HTTP 200 with a response of whether the URL was found or not. If there is an exception, or the URL is not valid, it will return an HTTP 400 with an appropriate response.

-

gettest.json - Sample input for the getMalwareCheck.py function to test with.

-

postMalwareCheck.py - Allows for a URL to be submitted to be added to the blacklist. It will respond with an HTTP 200 and a message if the URL is added successfully. Otherwise an HTTP 400 will be returned with an error message.

-

posttest.json - Sample input for the postMalwareCheck.py function to test with.

Usage:

The python-lambda-local function was used to allow testing of the Python functions locally before uploading to AWS. Test JSON files were used with simple URL strings.

python-lambda-local -f lambda_handler postMalwareCheck.py posttest.json

python-lambda-local -f lambda_handler getMalwareCheck.py gettest.json

Once uploaded to AWS, the Lambda functions can also be tested in the AWS console. There is a "Test" tab, and Event JSON such as the examples in gettest.json or posttest.json can be entered appropriately. The test will report back if it succeeds or fails, and provide some logging data.

The terraform folder contains all of the resources to create the infrastructure needed for this test. The resources are split out into separate files.

The base invoke URL for the HTTP API Gateway will be displayed as an output once the resources are built.

Future Optimizations:

- It would be ideal to create a module for all of the resources, to make it more portable. This could be incorporated in a future build.

- Many of the values are hard coded and could be customized as variables. This could be incorporated in a future build.

- The Terraform state files are being managed locally for this example. Ideally, we could use Terraform Cloud, or an S3 bucket and DynamoDB to store state and locks, for multiple user interaction.

- A few of the resources were created using pre-built Terraform modules, for example the DynamoDB. This was done to expedite bringing up the infrastructure to be able to start testing and creating the Python scripts.

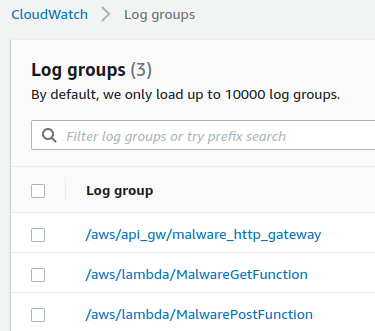

While debugging and troubleshooting to ensure the correct data was being passed from the HTTP API Gateway to the Lambda functions, it was helpful to have log groups set up to capture the HTTP requests coming in to the API Gateway, as well as viewing any errors or messages generated by the Lambda functions. Three log groups were set up via Terraform to capture this information:

To help increase the amount of data coming into the API Gateway logs, a number of parameters were enabled. This article was very helpful.

To increase the amount of data coming into the Lambda function logs, the entire event was printed to standard output (which would log to CloudWatch) in the Lambda functions. This would mean that all of the parameters passed to the Lambda function when invoked would be available in the CloudWatch logs.

Prerequisites:

- Git

- aws cli v2 with a valid

~/.aws/credentialsfile with access key and secret. - Terraform (currently 1.2.4).

Deployment:

- Clone this repository.

- Access the terraform folder.

- Ensure the terraform.tfvars file is customized for the environment you want to create. Note that this file is typically in the .gitignore to prevent any sensitive data from being stored in Github.

- Run

terraform initto initialize the working directory. - Run

terraform planto test the implementation and ensure that it is able to create all resources. Review all of the resources to be created. - Run

terraform apply --auto-approveto deploy the resources. - Once the resources are all created, test the Lambda functions or HTTP API Gateway using the methods described in their sections.

- Once testing is complete, run

terraform destroy --auto-approveto destroy all of the resources to avoid running costs.

Other changes to implementation that would be recommended:

- Use AWS Web Application Firewall to protect the API Gateway to limit the number of requests, to avoid runoff costs, or malicious activity.

- AWS Shield could be used to prevent DDOS attacks. This would also allow to put a cap on the number of service updates as described in the challenge - 5000 URL/day, updates every 10 minutes.

- WAF rules can limit the number of requests within a 5 minute period, allowing for throttling.

- Add additional commenting and debugging to code.

- Code is currently using basic exception handling. This could be more descriptive, and more logic added around handling fringe cases.

- Add additional Lambda functions to have full CRUD (Create Read Update Delete) functionality, allowing various HTTP commands to be able to add, delete, update, or query the URL Blacklist.

- Add additional metadata to the DynamoDB table to have more descriptive feedback on queries.

- Add functionality to update the URL Blacklist automatically using existing malware URL databases. For example, URLhaus.

- Originally I tried to prepopulate DynamoDB using the Terraform aws_dynamodb_table_item resource, and a list of keys.

- This worked, however a separate object is created for each record, which is not ideal when working with state files.

- Prefer to find a better way to do this, such as prepopulating with a separate Python script called as a provisioner.