This repository is for RCGAN presented in the following paper

Q. Li, L. Lu, Z. Li, et al., "Coupled GAN with Relativistic Discriminators for Infrared and Visible Images Fusion", IEEE SENSORS JOURNAL, 2019. [Link]

This code is tested on Ubuntu 16.04 environment (Python3.6, PyTorch1.1.0, CUDA9.0, cuDNN5.1) with NVIDIA 1080Ti/2080Ti GPU.

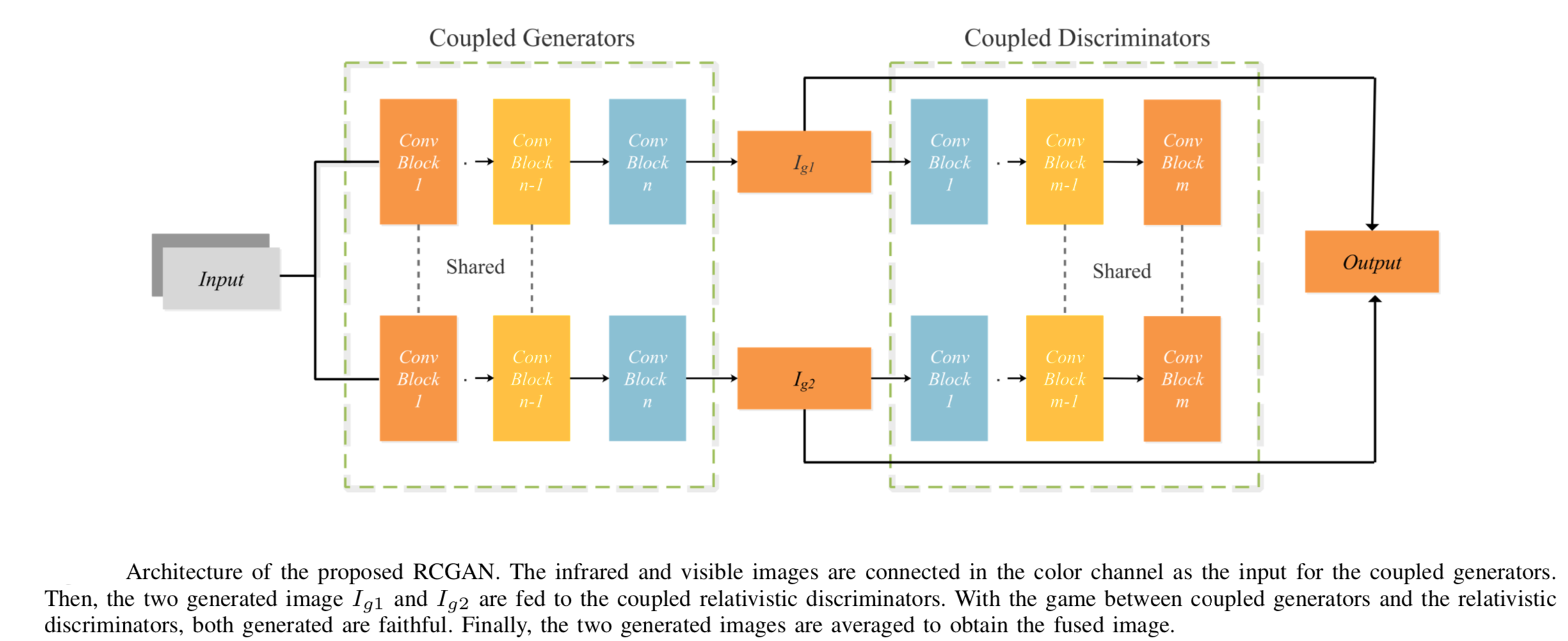

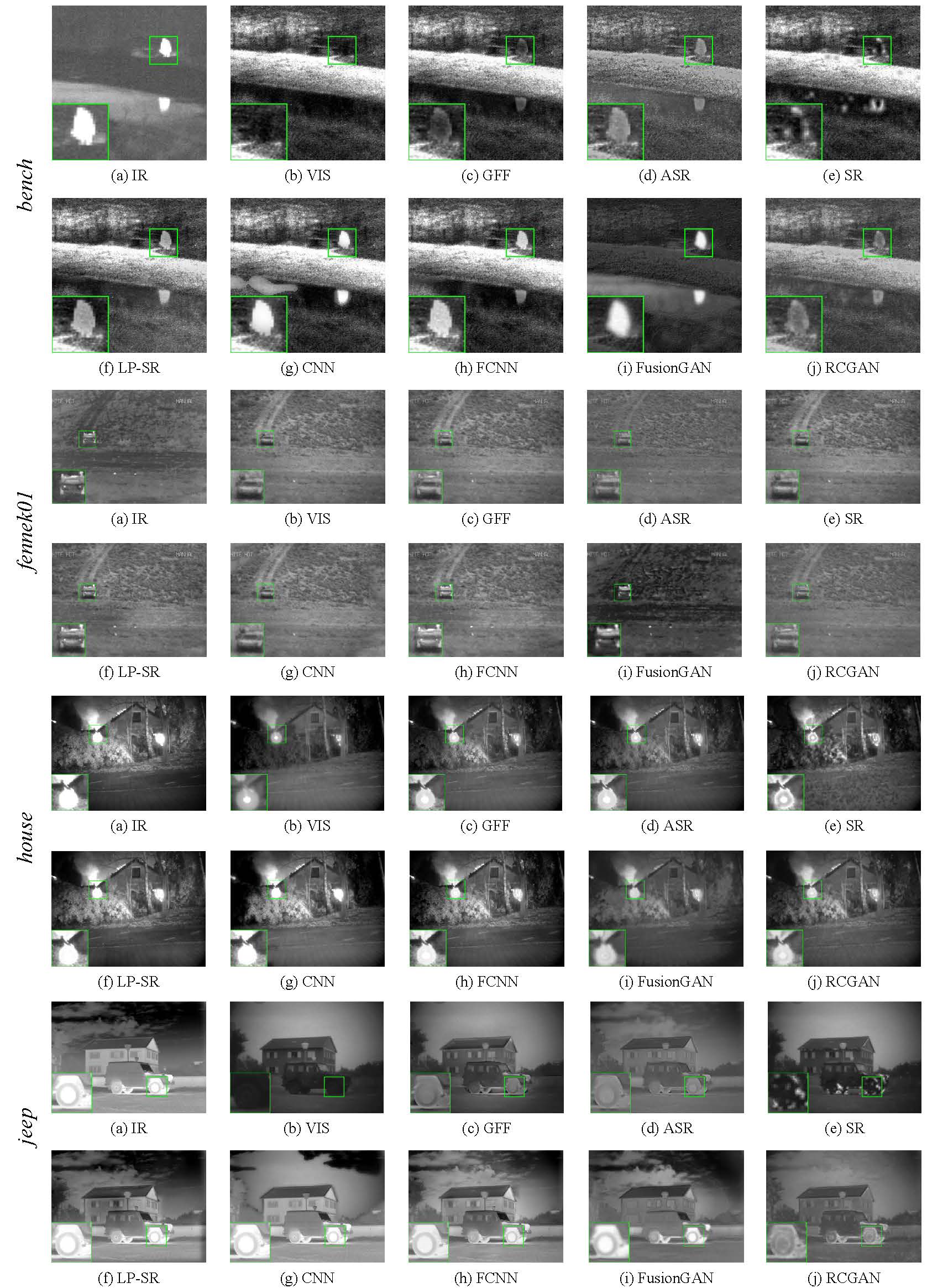

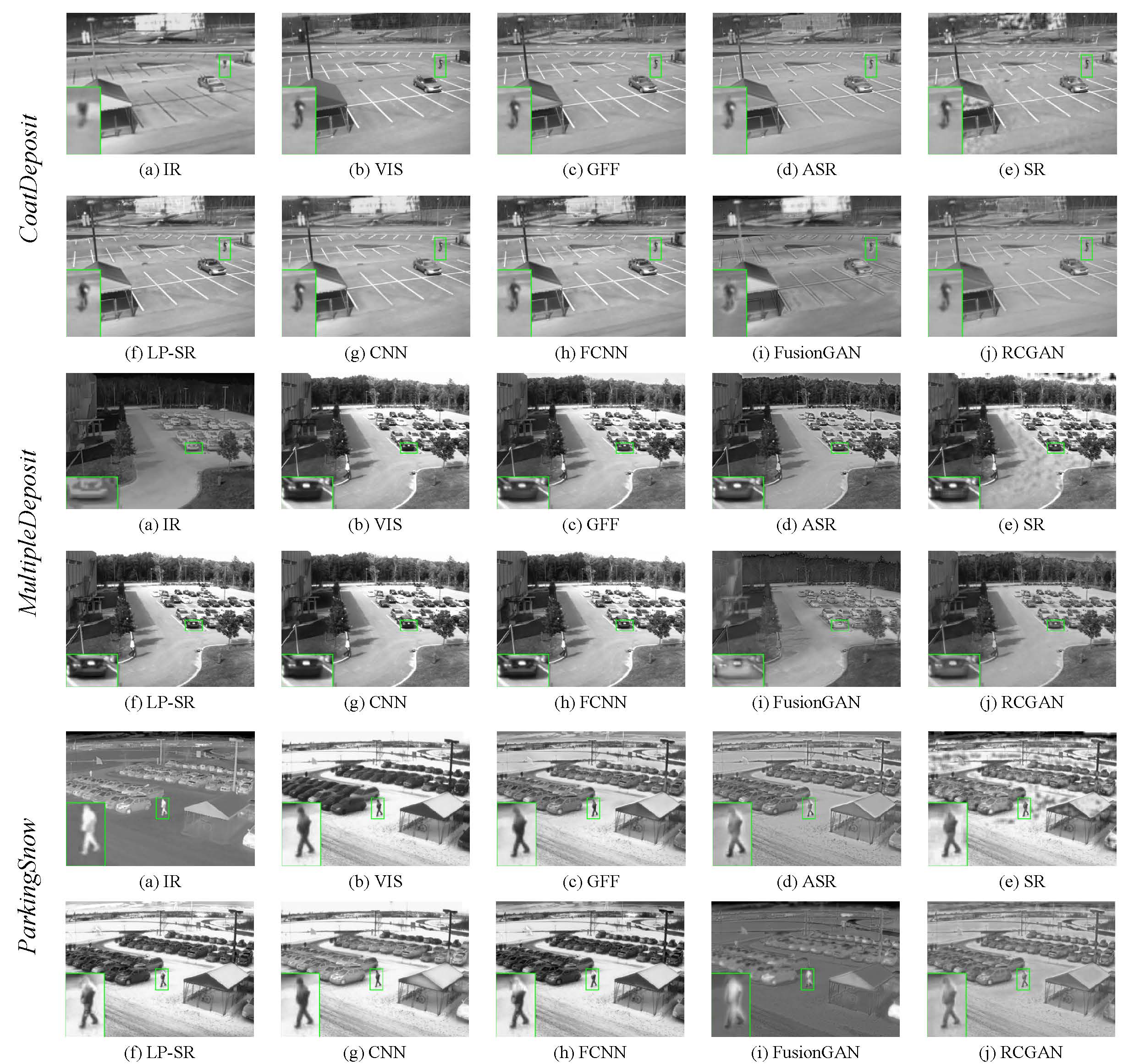

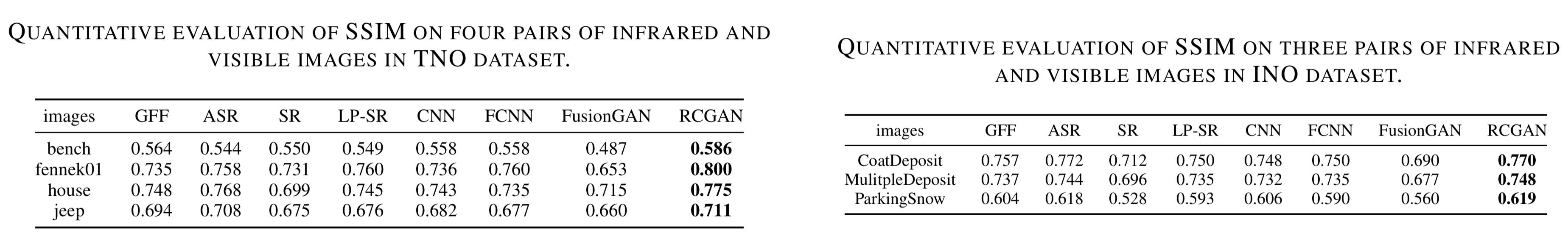

Infrared and visible images are a pair of multi- source multi-sensors images. However, infrared image lack structural details and visible images are impressionable to the imaging environment. To fully utilize the meaningful information of infrared and visible images, a practical fusion method, termed as RCGAN, is proposed in this paper. In RCGAN, we introduce a pioneering use of the coupled generative adversarial network to the field of image fusion. Moreover, the simple yet efficient relativistic discriminator is applied to our network. By doing so, the network converges faster. More importantly, different from the previous works in which the label for generator is either infrared image or visible image, we innovatively put forward a strategy to use a pre-fused image as the label. This is a technical innovation, which makes the process of generating fused images no longer out of thin air, but from ‘existence’ to ‘excellent’. Extensive experiments demonstrate the effectiveness of our network compared with other state-of-the-art methods.

-

The pre-trained model is placed in

releasefolder. Modifyoptions/test/test_RCGAN.jsonto specify your own test options. Pay particular attention to the follow options:dataroot_VI: the path of visible dataset for test.dataroot_IR: the path of infrared dataset for test. -

Cd to the root folder and run the follow command:

python test.py -opt options/test/test_RCGAN.jsonThen, bomb, you can get the Fused images in the

./release/resultsfolder. Besides the./release/cmpfolder contains the the source images and fused images for a better comparison.

- Pre-fuse the infrared and visible images using GFF in

./preparation/1. GFF. Then, the pre-fused images will appear in./Demo_dataset/train/ori_img/pf. - Modify and run

preparation/2. generate_train_data.pyto crop the training data into patches. The default setting of the patches size is120 * 120and the stride is 14. After executing, a folder named./Demo_dataset/train/sub_imgcan be obtained.

Modifyoptions/train/train_RCGAN.json to specify your own train options. Pay particular attention to the follow options:

dataroot_VI the path of visible dataset for train or validation

dataroot_IR: the path of infrared dataset for train or validation

dataroot_PF: the path of pre-fused dataset for train or validation

More settings such as n_workers(threads), batch_size and other hy-parameters are set to default. You may modify them for your own sack.

Tips:

-

resumeoption allows you to continue training your model even after the interruption. By setting setresume = true, the program will read the last saved check-point located inresume_path. -

We set

'data_type':'npy_reset'to speed up data reading during training. Since reading a numpy file is faster than reading an image file, we first transform PNG file to numpy file. This process is only performed once when the first time data is accessed. -

After performing above modifications, you can start training process by running

python train.py -opt options/train/train_RCGAN.json. Then, you can get you model inexperiment/*****/epoch/checkpoint.pth. You can also visualize the train loss and validation loss inresults/results.csv.

For more results, please refer to our main papar

If you find the code helpful in your research or work, please cite our paper:

Q. Li et al., "Coupled GAN with Relativistic Discriminators for Infrared and Visible Images Fusion," in IEEE Sensors Journal.

This code is built on BasicSR. We thank the authors for sharing their codes.