[Project Page] [Paper]

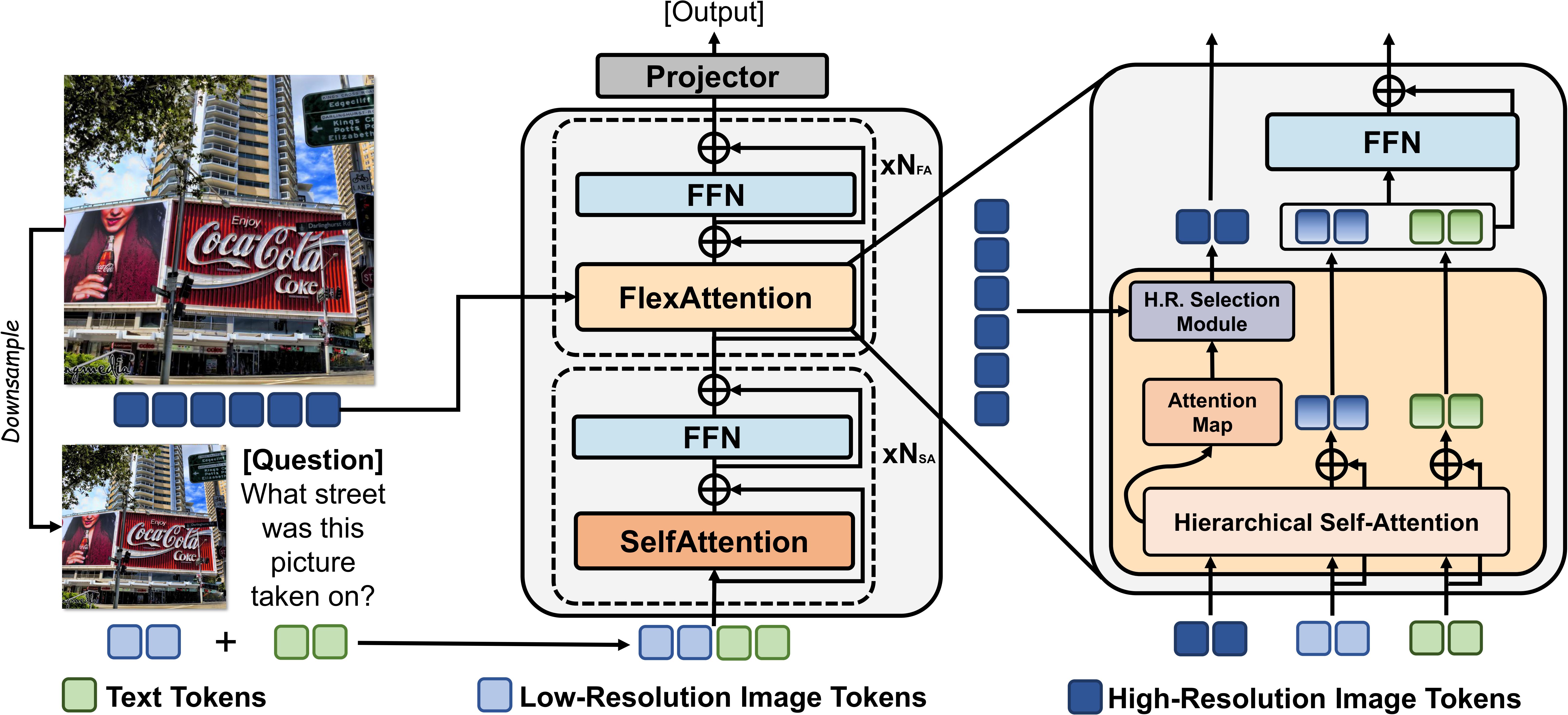

This repository contains the official code for FlexAttention for Efficient High-Resolution Vision-Language Models.

- July 2024: Open-source codebase and evaluation.

- July 2024: Accepted by ECCV'2024!

conda create -n flexattention python=3.9

conda activate flexattention

pip install -e .

pip install -e ".[train]"

pip install -e ./transformersYou can download our 7B model checkpoint from huggingface and put it into checkpoints folder.

- Follow this instruction to download the textvqa evaluaton images and annotation, and extract to

datasets/eval/textvqa. - Run the multi-gpu inference:

torchrun --nproc_per_node 3 scripts/evaluation/eval_textvqa.py --dist --model-path checkpoints/llava-v1.5-7b-flexattn --id llava-v1.5-7b-flexattnIt will generate a file similar to answer_textvqa_llava-v1.5-7b-flexattn_xxx.jsonl on the folder root.

- Run the evaluation script:

bash scripts/evaluation/get_textvqa_score.sh ANSWER_FILE- Download the dataset from huggingface.

git lfs install

git clone https://huggingface.co/datasets/craigwu/vstar_bench- Run the multi-gpu inference:

# Attribute

torchrun --nproc_per_node 3 scripts/evaluation/eval_vbench.py --dist --model-path checkpoints/llava-v1.5-7b-flexattn --id llava-v1.5-7b-flexattn --subset direct_attributes

# Spatial

torchrun --nproc_per_node 3 scripts/evaluation/eval_vbench.py --dist --model-path checkpoints/llava-v1.5-7b-flexattn --id llava-v1.5-7b-flexattn --subset relative_position-

Download the dataset from here, and extract it to

datasets/eval/. -

Run the multi-gpu inference:

torchrun --nproc_per_node 3 scripts/evaluation/eval_magnifier.py --dist --model-path checkpoints/llava-v1.5-7b-flexattn --id llava-v1.5-7b-flexattnComing soon.

LLaVA: the codebase that our project build on. Thanks for their amazing code and model.

If our work is useful or relevant to your research, please kindly recognize our contributions by citing our paper:

@misc{li2024flexattention,

title={FlexAttention for Efficient High-Resolution Vision-Language Models},

author={Junyan Li and Delin Chen and Tianle Cai and Peihao Chen and Yining Hong and Zhenfang Chen and Yikang Shen and Chuang Gan},

year={2024},

eprint={2407.20228},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2407.20228},

}